A Week of AI Tools and UI/UX Product Research

Hello, we are TEAM AI Wonderland.Our team consists of five ETC students from Carnegie Mellon University. Client is Alice Team.

“Alice” is a free and open-source educational programming environment and platform developed by Carnegie Mellon University. Users can create 3D animations, stories, and games by dragging and dropping code blocks rather than writing traditional text-based code.

Our goal is to explore how generative AI can be used to enhance the development process and enable fun and rapid creative experimentation.

Ai tool research:

Since we didn’t have a concrete development plan for the first week, we decided to start by researching the ai tools on the market according to the client’s requirements.

3D MODEL GENERATION

Shape-E : a cutting-edge 3D asset conditional generative model, differs from recent models by directly generating implicit function parameters. Trained in two stages, it uses an encoder to map 3D assets into implicit function parameters and a conditional diffusion model on encoder outputs. Even when compared to Point-E, a point cloud-focused model, Shape-E converges faster with comparable or superior sample quality. Trained on extensive paired 3D and text data, Shape-E swiftly generates diverse 3D assets, showcasing its efficiency in a higher-dimensional output space.

Google Dreamfusion: This recent work explores an innovative approach to 3D synthesis by leveraging a pretrained 2D text-to-image diffusion model. Overcoming the absence of large-scale labeled 3D datasets and efficient denoising architectures, the method introduces a probability density distillation-based loss. This enables the utilization of a 2D diffusion model as a prior for optimizing a parametric image generator. Through a DeepDream-like process, a randomly-initialized 3D model (Neural Radiance Field, or NeRF) is optimized via gradient descent, achieving low loss in its 2D renderings from random angles. The resulting 3D model, requiring no specific 3D training data, can be dynamically viewed, relit, or composited into various 3D environments, showcasing the efficacy of pretrained image diffusion models as priors.

Get3D by NVIDIA:In response to the growing demand for scalable content creation tools for massive 3D virtual worlds, our work introduces GET3D. This generative model excels in synthesizing explicit textured 3D meshes with intricate topology, detailed geometry, and high-fidelity textures. Unlike previous 3D generative models, GET3D stands out by directly producing content usable in 3D rendering engines without compromising on geometric details or texture richness. Leveraging differentiable surface modeling, rendering, and 2D Generative Adversarial Networks, GET3D achieves remarkable results across a diverse range of objects and scenes, surpassing the capabilities of previous methods.

Stable-dreamfusion: A pytorch implementation of the text-to-3D model Dreamfusion, powered by the Stable Diffusion text-to-2D model.

IMAGE GENERATION:

Stable Diffusion: Stable Diffusion XL is a latent text-to-image diffusion model capable of generating photo-realistic images given any text input, cultivates autonomous freedom to produce incredible imagery, empowers billions of people to create stunning art within seconds.

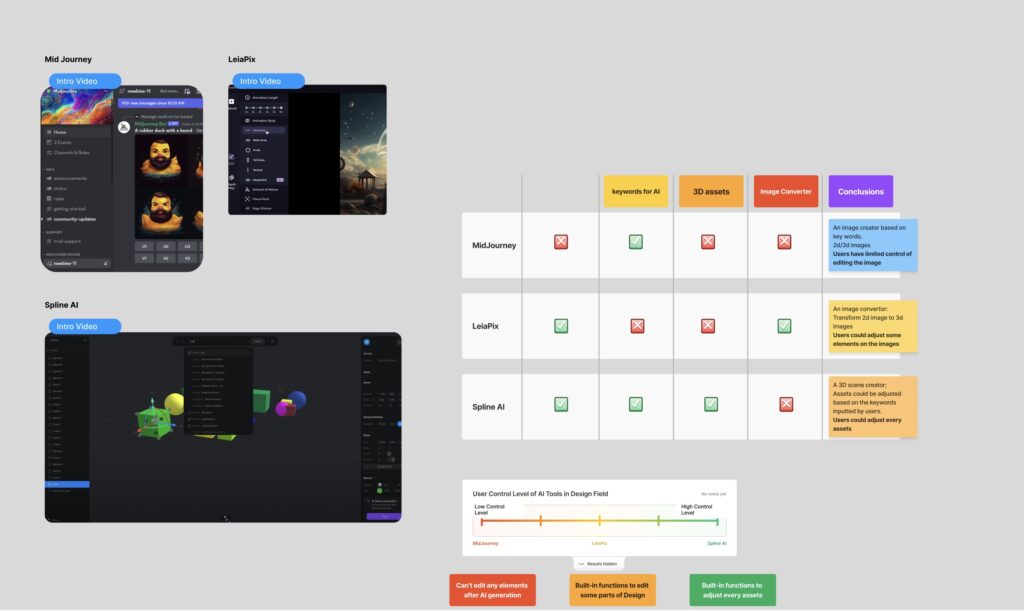

Midjourney: Midjourney is an example of generative AI that can convert natural language prompts into images. It’s only one of many machine learning-based image generators that have emerged of late. With Midjourney, you can create high-quality images from simple text-based prompts. You don’t need any specialized hardware or software to use Midjourney either as it works entirely through the Discord chat app.

TEXT GENERATION:

Meshy AI: a 3D generative AI toolbox, simplifies the creation of 3D assets from text or images, providing a swift boost to your 3D workflow. This tool enables the rapid development of high-quality textures and 3D models within minutes. Leveraging state-of-the-art AI and machine learning advancements, Meshy is specifically designed for designers, artists, and developers. Whether you’re a 3D artist, game developer, or creative coder, Meshy stands out as a solution that accelerates the process of generating 3D assets, offering unprecedented speed and efficiency.

UI&UX research:

In this week’s UI/UX research, we delve into the interfaces of common AI tools, analyzing the differences between them and how they meet user needs. We conducted a detailed differentiation analysis not only from an aesthetic perspective, but also taking usability and user experience into account. We also delved into how user interface design adapts to different domains, such as the differences between 2D and 3D AI tools, to gain insights into different user needs.

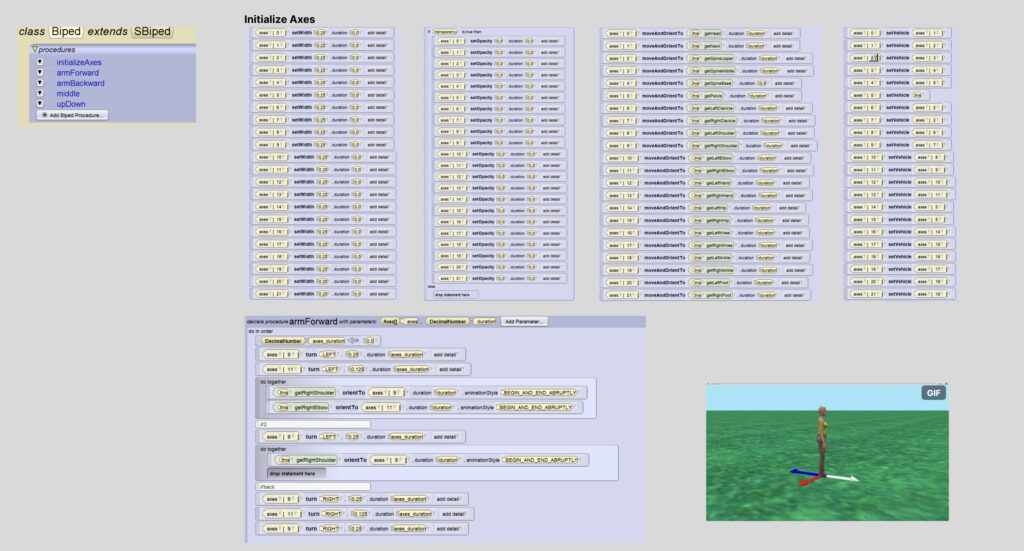

Alice Animation Research:

Since we had an idea for an AI enhancement based on Alice’s animation system, we decided to experience Alice’s animation capabilities this week. After hands-on experience with the tool, we have some noteworthy feedback on its usability. One aspect that stood out was the time-consuming operation of the tool and its lack of user-friendliness. Another particular pain point surfaced during the animation program creation process. Having to manually add all of the axes before starting the animation programming was a significant hurdle. This manual input requirement not only added additional complexity but also prolonged the time and effort invested in the initial setup.