Work Preparation:

This week, we compiled the feedback we got from playtesters on the Playtest Day, and the whole team including Pan-pan reviewed the feedback together. Gladly, without any prior information on the film story, just by looking at the film one time, people are able to understand 80% of the film. Even though they do not understand Pangu god, they viewed him as somewhat of a giant. With the detailed character model in later, we are confident that the story will be conveyed even better.

For the visuals, we agreed that the AI rendered film is a little bit too de-saturated, and thus the contrast between colors is small. The gray and white color palette makes it hard to read and visually-tiring for the audience. Also, the majority of the playtesters enjoy the opening scene in the AI-rendered film, the colorful universe scene. Thus, we are planning to add more colors into the later scenes in the AI rendered film, hopefully it will make the film more visually appealing. We have no idea how it would impact the noise control, and thus we will experiment with it more the next few weeks.

For the AI textured film, people love the details in that film, including the texturing details as well as the VFXs. However, they also pointed out that the clouds and mountains kind of blend together in the chaos scene, as thus we need to alter the mountain texture a little bit so that there will be some differences between them.

People also mentioned that some background music needs to be included as soon as possible. There are also some changes that can be made to give the current SFXs a more powerful sense.

Progress Report:

- Updated the project website and weekly blogs.

- Added new research results into the documentation.

- Met with Pan-Pan and updated her on the sound feedback we received from Playtest Day.

- Updated the character animations.

- Adjusted some camera angles based on the character animations.

- Updated the Yin and Yang scene as well the hands scene.

- Continued researching AI rendering.

- Updated the AI character texturing.

- Did a trailing VFX and implemented it into the film.

Research Results:

- AI rendering:

Last week we had Canny out, it’s time to have something new in. Controlling lighting is an important topic that couldn’t be ignored when making animation, so do AI-generated ones.

First, we still begin with the same two units we have been using, normal and depth. But this time we need to change our way of thinking, instead of putting our reference image as the one waiting for repainting, we directly feed the reference image into the ControlNet unit.

This will do the same job as previously they worked, transferring these reference images into their normal/depth information. The image fed to the repainting part now changed, this time we are feeding a lighting reference into it.

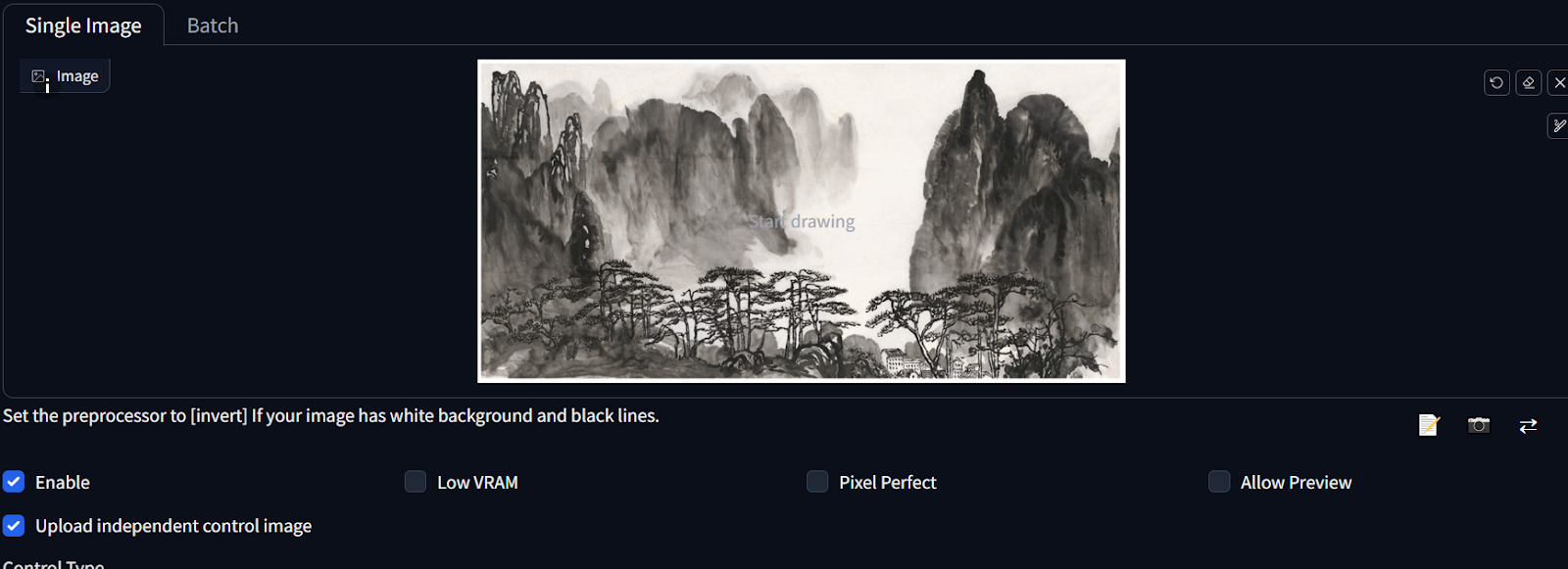

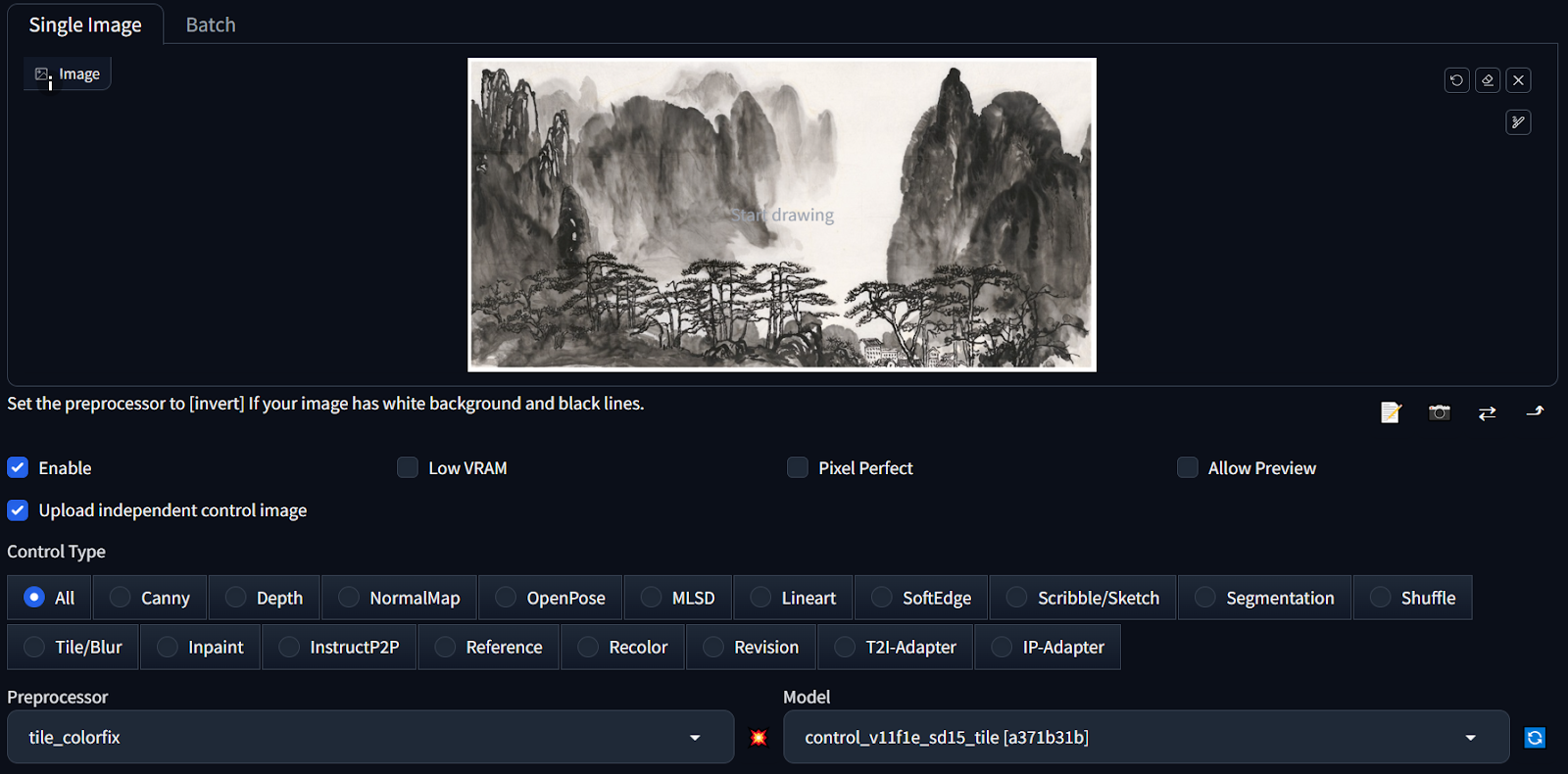

We use this photograph of mountain tops as a lighting reference to tell AI which part should be lit or not.

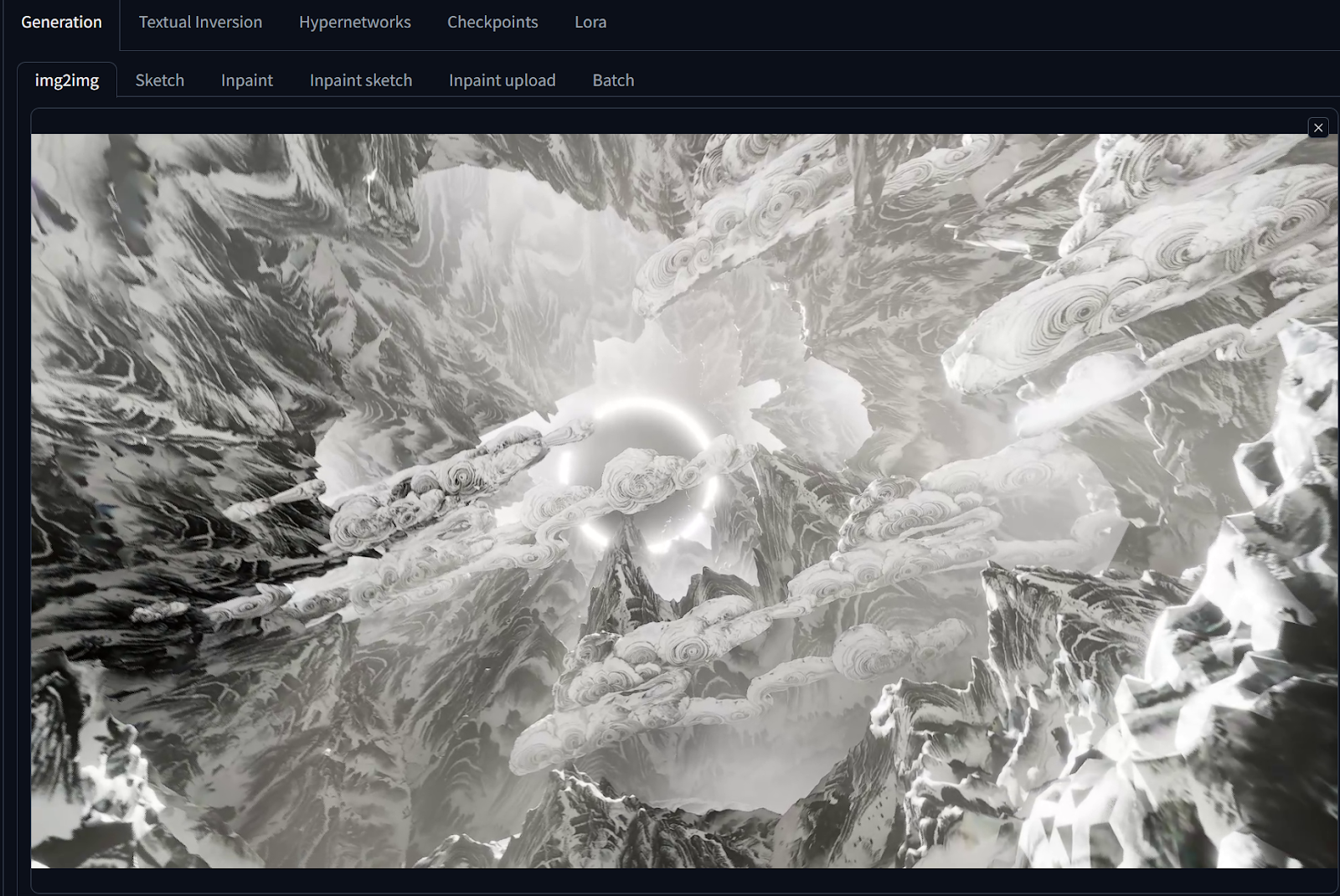

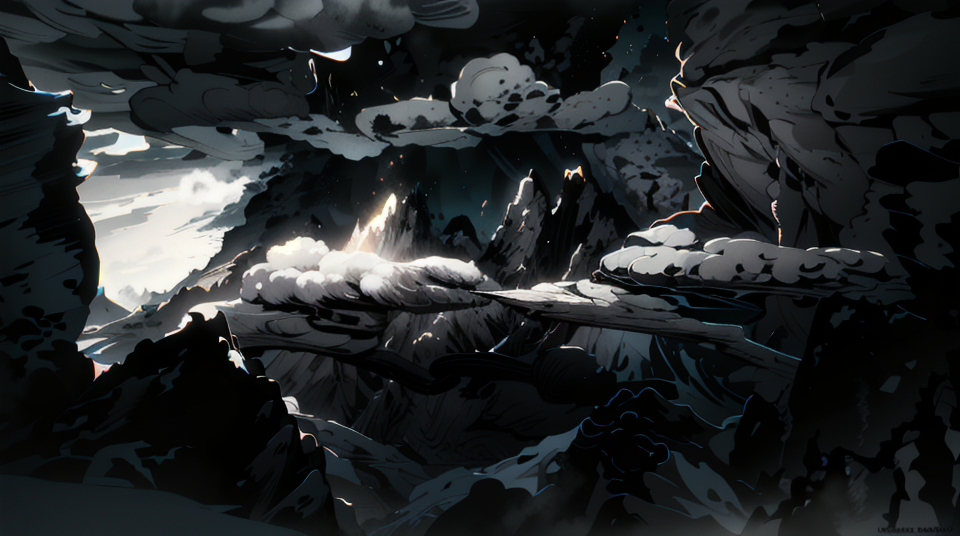

AI-generated image based on lighting reference

The generation seems to have more details and also we can clearly see the lighting condition has been reflected in the image, however, the composition has been greatly affected by the lighting reference image. We need to increase the control weight of our controlNet unit to have more control over the composition.

Weighted AI-generated image

With a fairly good setting on depth and normal unit, it’s time to add some new spice to the controlNet. This time we are using the Tile unit, and we are feeding a black-and-white shanshui painting as a reference for the unit.

Tile unit has two main functionalities, resampling and color fixing. Even though we need color fix at this time, we first test with how resampling works.

Resampled by Tile unit

The resampling well reduces the noises in the image and adds details to it at the same time. It could be considered as a method for denoising and upscaling during post-processing.

Our main focus this time is on color fixing with tile units.

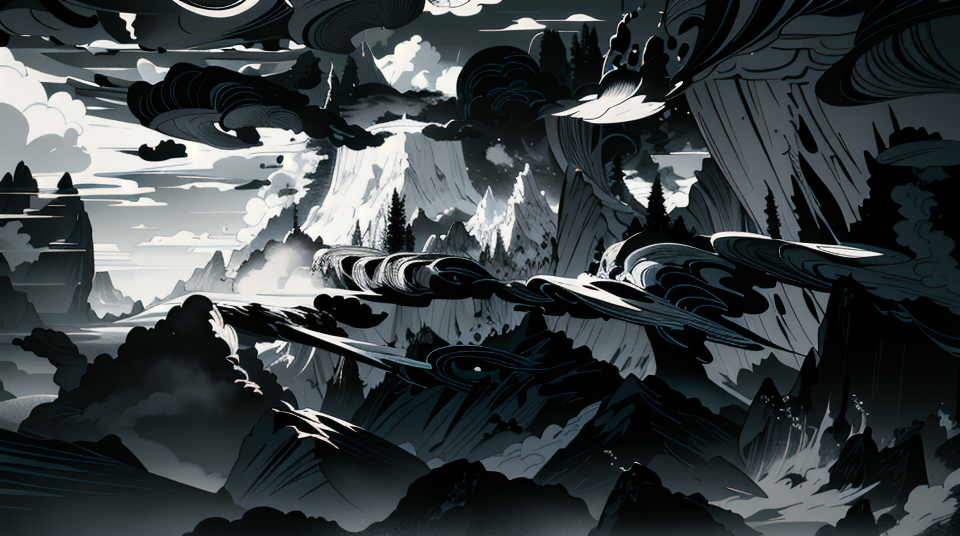

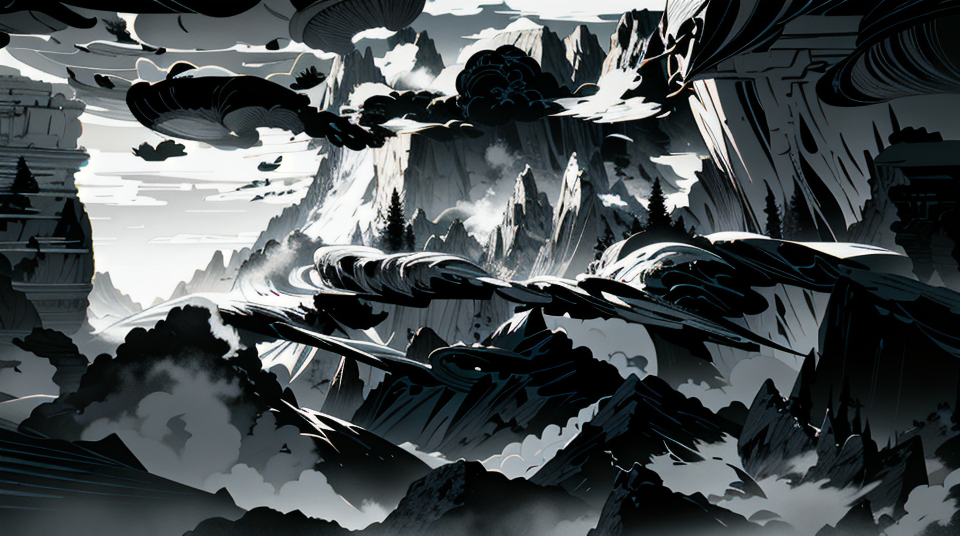

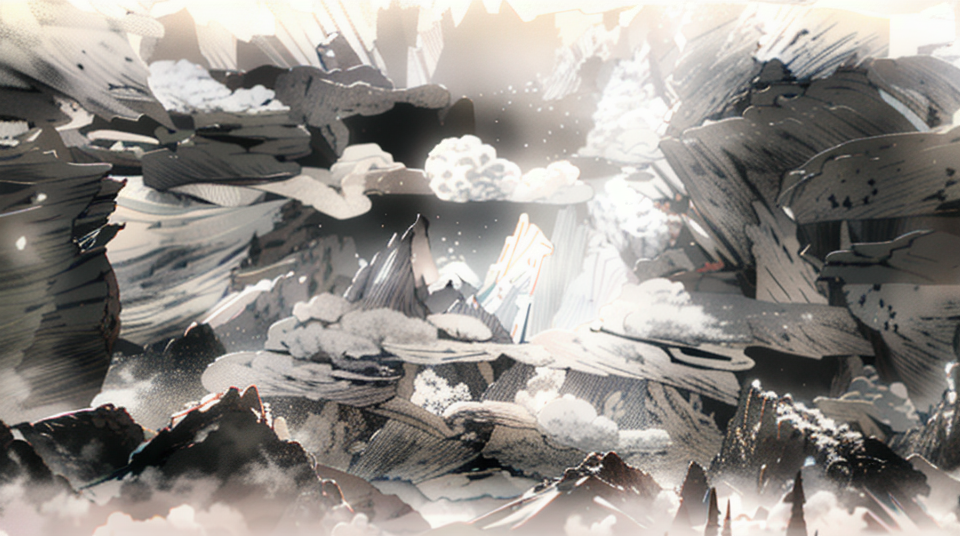

Color fixed by Tile Unit

The result is surprising, the rim light and the high-contrast background perfectly reflect the lighting condition. But compared to the well-reflected lighting, the overall color palette is not close enough to the reference image we fed to the Tile unit. So we decided to adjust the control weight to have better results.

Control Weight 1.2

Control Weight 1

Control Weight 0.4

Control Weight 0.6

Control Weight 0.7

Around 0.7 is the best parameter after several experiments. And now since we have the correct parameter for color fixing, we need to use the correct lighting reference as well.

Take frame 505 as sample, we replaced the lighting reference with the one we lit inside UE5.

UE5 lighting reference

Color-fixed and lighting-corrected AI-generated image

The result is quite promising as it could even generate fog and clouds based on the lighting reference.

Next, we take part of the sequence to test out the overall performance, and each gray-box image has a corresponding lighted reference to have a better lighted result.

Color-fixed and lighting-corrected AI-generated animation

It’s obvious that the animation has a lighting atmosphere according to the lighting reference, however, since we are playing at 30fps, the noise is too strong that couldn’t be ignored. We need denoising.

Denoised and color corrected by Davinci Resolve

Using the denoising method we previously mentioned, we get a rather good animation compared to its original one. The next step will be applying this lighting technique to the whole animation.

Animation with noises

Animation denoised using Davinci Resolve

Based on the built AI rendering pipeline, we tried to add more details to polish the animation as far as we can. One of the most important factors is lighting, and it is always something hard to achieve consistency using AI. Reversing the way we have been generating images, we are able to use light reference to tell AI how the lighting is, and by using the Tile module inside ControlNet, we are able to do color fixing to make the coloring more consistent. The next step is to further polish the current result.

- AI texturing:

We used Meshy to AI texture the hand and full body models we got.

Prompt: a hand –style ancient, 4k, hdr, high quality, hand texture

Prompt: a hand –style chinese ink painting, black and white, 4k, hdr, high quality, hand texture

Prompt: a hand –style ancient, 4k, hdr, high quality, hand texture

Prompt: a hand –style ancient, 4k, hdr, high quality, hand texture

Prompt: BLACK AND WHITE HAIR ANCIENT, WATER PAINTING STYLE

Plan for next week :

- Meet with Panpan for sound support.

- Continue working on the website and weekly blogs.

- Finalize Pangu’s movement animations.

- Work on Pangu’s facial expressions as well as hair and beard movements.

- Research more on AI rendering and iterate on rendering the film.

- Improve the last scene camera angle and the background.

- Improve the Yin & Yang scene and use different layers to improve the crack movements.

- Add the trailing VFX into the film.

- Provide an update on both versions of the films and give to Pan-Pan on time.

Challenge:

- Hard to estimate how long each approach will take and what potential problems we will meet throughout the semester.

- Hard to estimate the cost for AI tools, and how effective they will be.

- Need to think of better ways to document our research process.

- Need to make sure we have some powerful shots in the film.

- Need to prepare for the ETC Soft Opening.

- Need to keep Panpan on the same page for sound production.

- AI modeling is proved to fail, and need to shift focus to AI rigging for approach 2.

- A lot of changes in the character animations, which will also affect the camera sequence.