Work Preparation:

We discussed with instructors on Wednesday about our progress. First, we made an agreement on turning to ShotGrid when it comes to video review for easier annotation. John will follow up with more tips and instructions on how to use the application.

We then showed our animatic to the instructors and discussed the storyline more; apparently, there are still some details missing in the transitions. We also showed the cloud models as well as the updated camera sequence. The scene where Pangu lifts up the sky still needs more discussion.

We tried out several different tools for AI rendering, and some of the results are rather promising. Next week, we will wrap up the camera sequence and continue to compose all three films.

Progress Report:

- Updated the project website and weekly blogs.

- Updated the storyboard and the animatic.

- Talked with Pan-Pan for sound support.

- Continued experimenting on the clouds and cloud VFX.

- Continued researching the AI rendering.

- Finalized the camera angles and the film sequence.

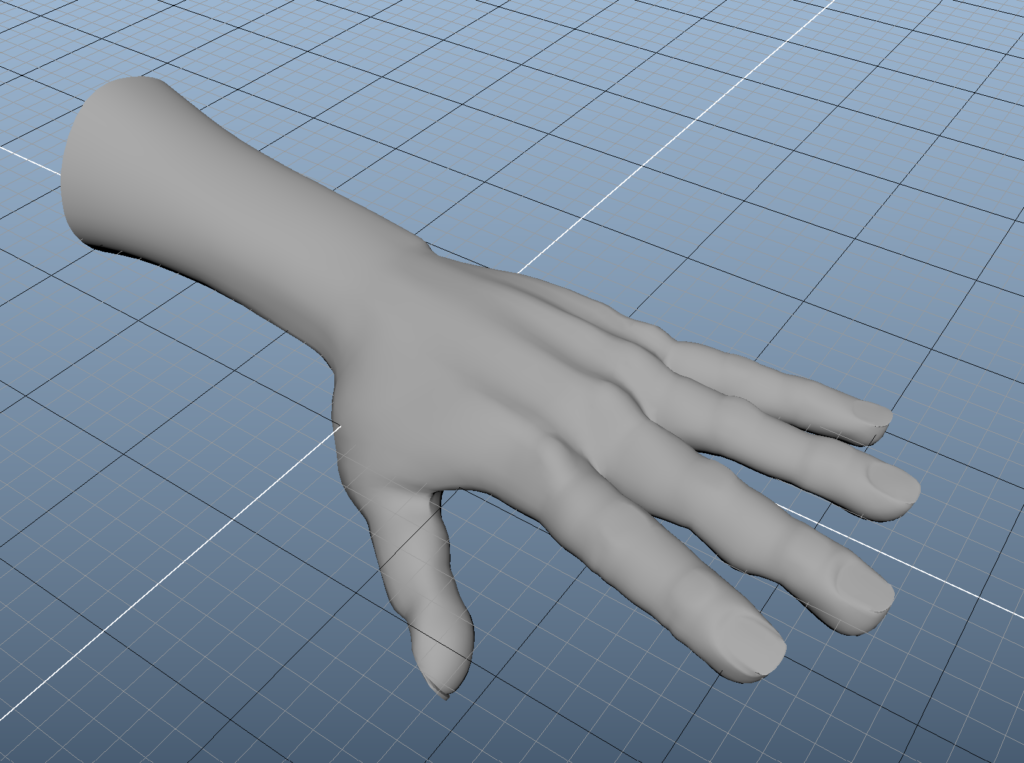

- Updated the Pangu’s hand model.

- Started on the Pangu full body model.

Research Results:

- AI rendering:

This week, we tried out Stable Diffusion 1.6 + ControlNet v1.1.313 + AnimateDiff to do the Img2img-based/text2img-based animation generation.

AnimateDiff is good at animating characters when using img2img with images that has a large portion of character with a clearer face. Resolution is also a problem influencing the final result, however, with the current 16g vram graphic card, in most cases 512 pixels is the best setting to have but definitely not the best quality. By using upscaler, it can boost the resolution but cannot fix the quality(the descriptions of objects within the images). ControlNet would greatly affect and disable animateDiff from making the images move, especially using canny and openpose that will fix the character’s pose and frame.

Animation Production:

- Animatic:

This week, we finalized the animatic with the shots and camera sequences. So that both the person works on greyboxing and the person works on background music and relies on it.

- Pangu Hand Model:

Our artist iterated on the Pangu hand model so that we can make it more realistic, more muscular, and has a more powerful feelling.

- Updated Greybox Camera Sequence:

We updated the greybox camera sequence based on instructors’ feedback, to make some transitions look more connected, some scenes look more dynamic and some scenes more powerful.

Plan for next week:

- Continue to contact Panpan for sound support, thinking about the potential background music style we want.

- Talk with the instructors for sound support compensation.

- Half presentation preparation.

- Continue working on the website and weekly blogs.

- Start to replace the hand models with AI models for approach 2.

- Continue modeling the Pangu full body.

- Use the greybox to AI render the film.

- Finish UE film shooting for approach 2 and 3.

Challenge:

- Hard to estimate how long each approach will take and what potential problems we will meet throughout the semester.

- Hard to estimate the cost for AI tools, and how effective they will be.

- Need to think of better ways to document our research process.

- Need to show all three approaches and their differences on halves, need to convince the faculty that all three films can be completed by final.

- Need to test whether people understand the story.

- Need to make sure we have some powerful shots in the film.

- No sound designer on the team.