Work Preparation:

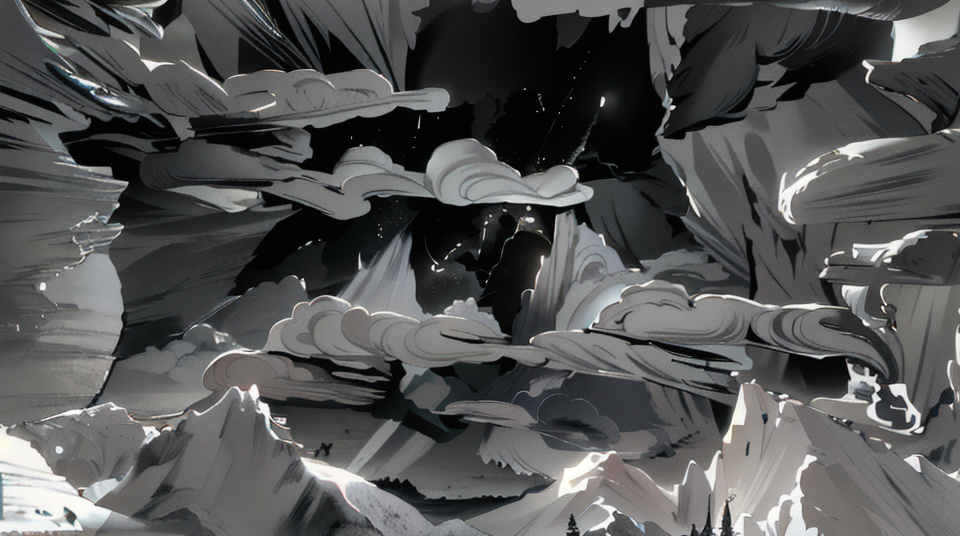

This week, we just came back from the fall break. Two members were sick at the beginning of the week, and thus some work needs to be made up later the week. We made several attempts to update and improve the approach 3 film. A new cloud VFX is made and added to the film so that when Pangu lifts up the cloud it has dripping effects from his fingers. The film background is also separated into different layers and animated, so that the film looks more dynamic instead of flat. The mountains in distance also have more depth now. We are also researching more AI rendering possibilities now, trying to add more details to the film as well as reducing the noises.

Progress Report:

- Updated the project website and weekly blogs.

- Added new research results into the documentation.

- Met with Pan-Pan for sound support and scheduled a weekly meeting time.

- Implemented the new cloud VFX into the film.

- Added more layers in the background and animated the background.

- Continued modeling the Pangu face and researching the modeling application.

- Continued researching AI rendering.

Research Results:

- AI rendering:

Stable Diffusion 1.6 + ControlNet v1.1.313, Img2img-based animation style transformation. Based on week 6’s progress, we need to look for ways to improve details.

Since we can foremost use 3 controlNet units at a time, it is important to choose the unit wisely. Even though canny is a super powerful unit that recognizes the wireframes within the image which really helps us differentiating objects from objects. However, we think it is time to not to use it since the other two units, depth and normal, we have also been using could do fairly good job as it could do even without it.

We first tested using all these 3 units to generate image and it is obvious that these units well helped recognize the objects, however, the image lacks details to better define it. Then we tried to keep everything the same except that we boost the canny unit to better recognize the wireframes to see if it would help or not.

We could hardly tell if the ai-generated result has improved, but it is obvious that the canny does recognize more frames than the previous one. Now it is a good condition to question whether canny meets our need or not since the job it does seems that it could be perfectly replaced by normal and depth as they could even provide more information than canny does.

Next, we tried not to use canny and only use depth and normal to generate the image.

Now the result is obvious, normal and depth can recognize the objects while delivering the surface and depth information. And, there are even more details just by disabling canny since the generation is no longer constrained to these frames so that AI could try to fill these spaces.

It’s time to test with this new method for animation generation. We, in the end, have a result with more details but less consistency(it’s a trade-off).

We have a basic AI rendering pipeline built but needs to be refined. The method not using canny could be a promising way to go to improve details, and the result of losing a certain amount of consistency is acceptable as we can denoise it. One vacant spot for controlNet unit is worth considering and we will keep experimenting on its best candidate.

Plan for next week (after fall break) :

- Meet with Panpan for sound support.

- Continue working on the website and weekly blogs.

- Finalize modeling the Pangu face and start rigging.

- Research more on AI rendering and iterate on rendering the film.

- Work on the Yin & Yang debris and have them implemented in the film.

- Improve the background environment more.

- Implement the Yin & Yang model into the film and its cracking effect.

- Prepare for the Saturday ETC Playtest Day.

Challenge:

- Hard to estimate how long each approach will take and what potential problems we will meet throughout the semester.

- Hard to estimate the cost for AI tools, and how effective they will be.

- Need to think of better ways to document our research process.

- Need to make sure we have some powerful shots in the film.

- Need to prepare for the ETC Playtest Day.

- Need to keep Panpan on the same page for sound production.

- AI modeling is proved to fail, and need to shift focus to AI rigging for approach 2.

- People got sick after fall break, how to make up for the lost time after coming back.