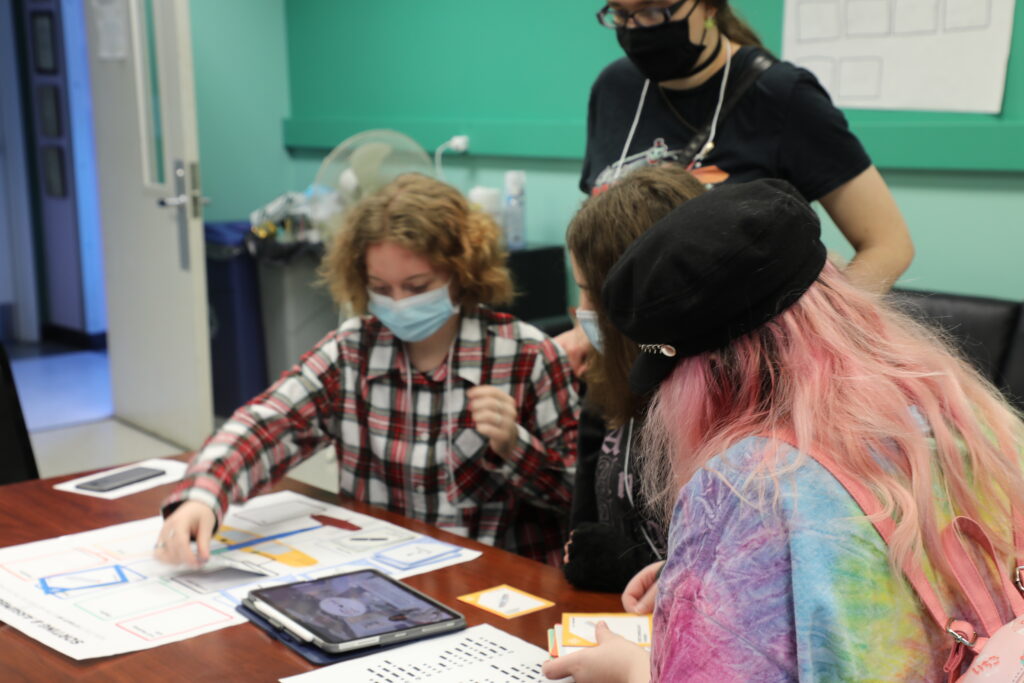

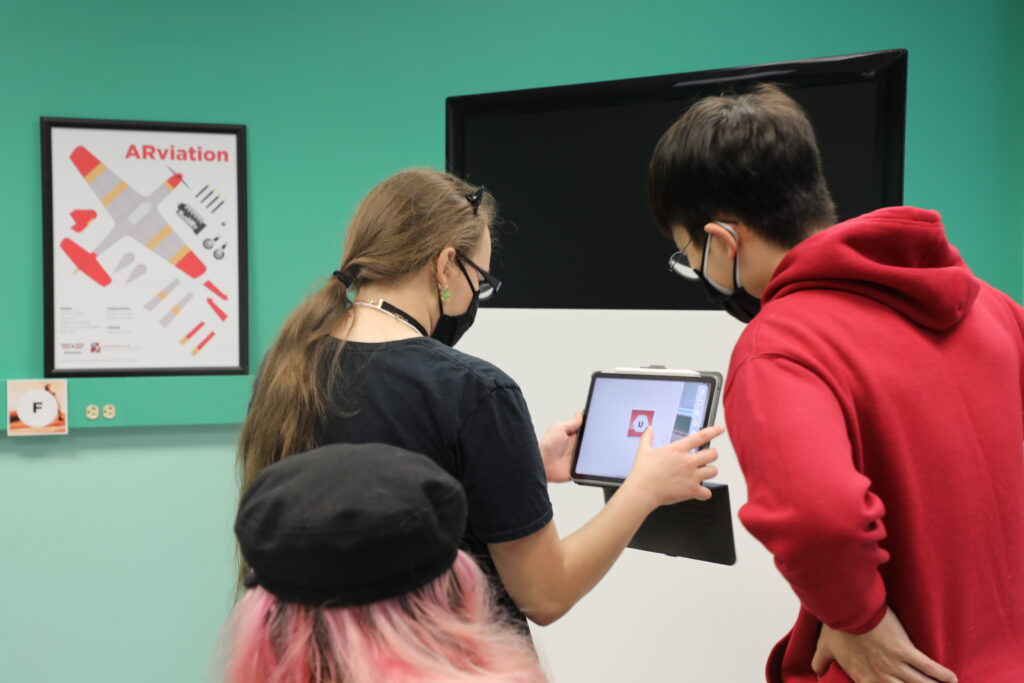

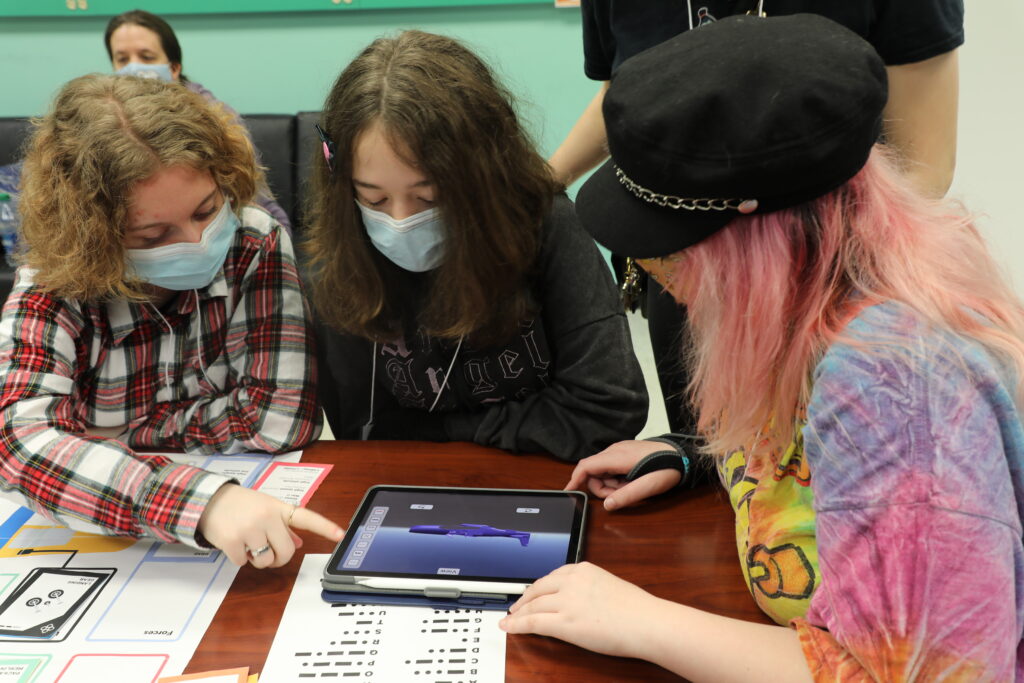

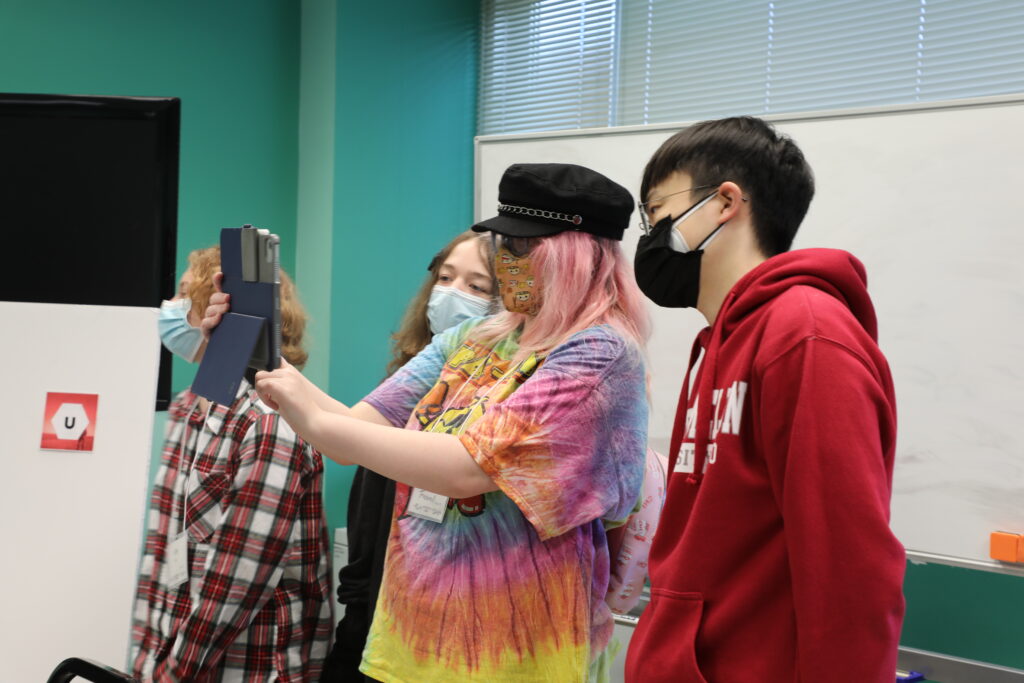

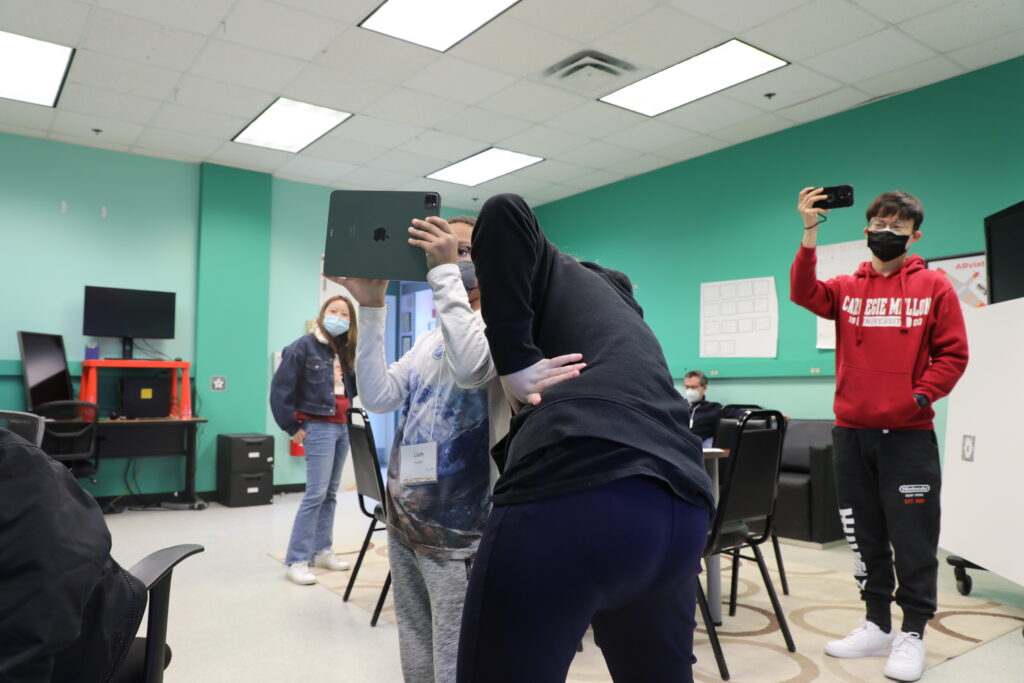

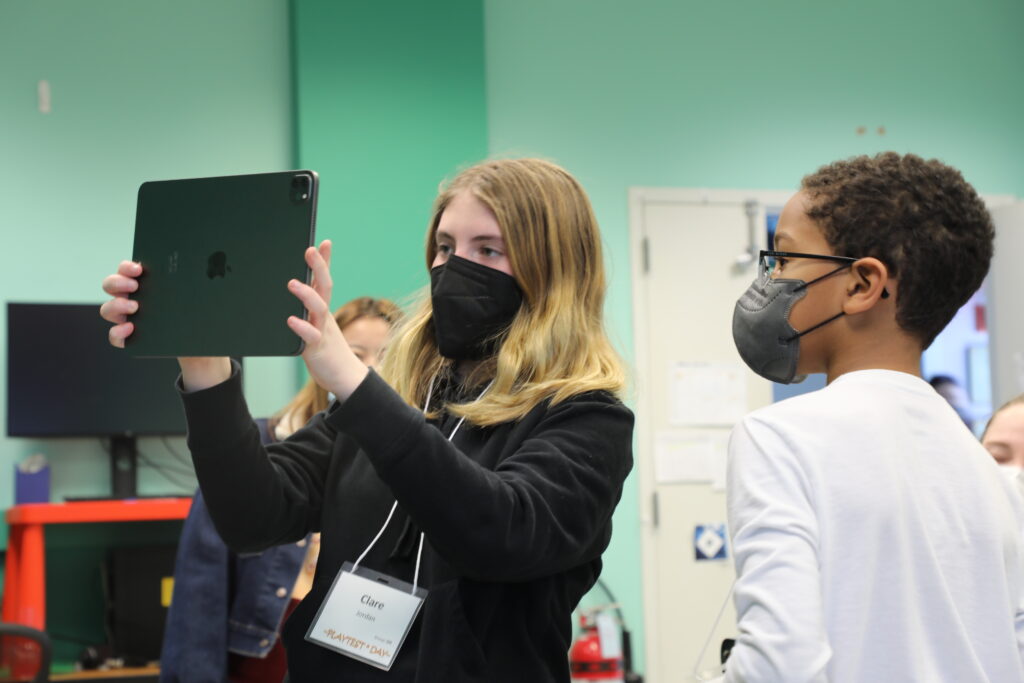

We started this week off with the ETC’s Playtesting Day on Saturday, April 2nd. We were scheduled to meet two groups of awesome playtesters, and thus needed to have a solid version of the experience for them.

Playtesting Day

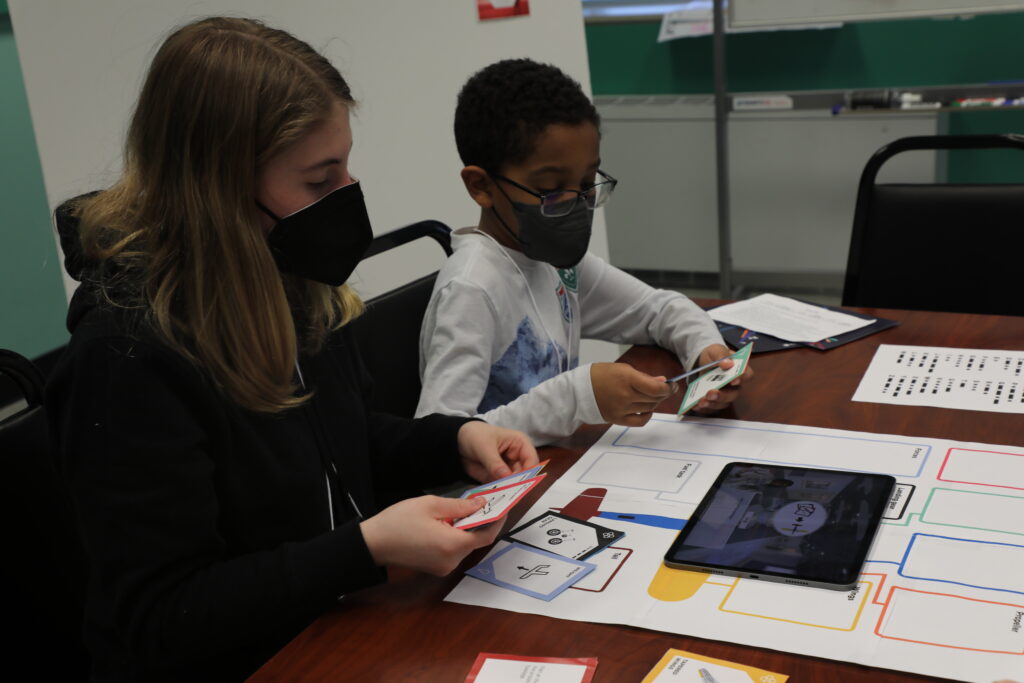

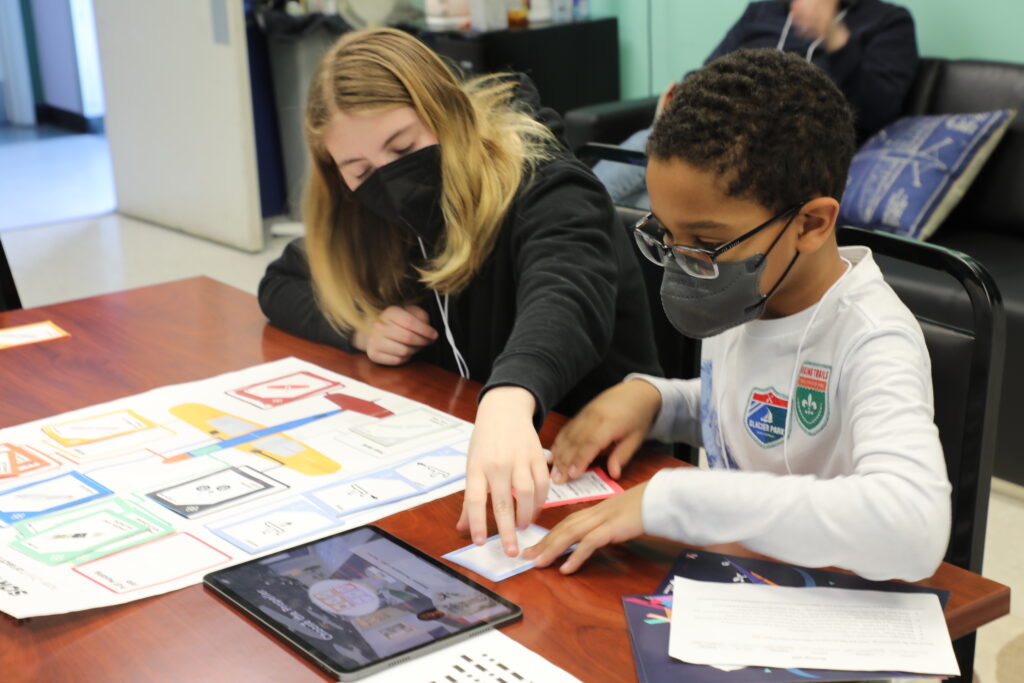

Our playtester groups were in the age ranges of 12-19 and 10-13. For the most part, these ages are much older than our target demographic (which is our “fault” – we changed our target demographic relatively late into this process), but we still thought we could get valuable information from their sessions. We wanted to find out the following:

- Is our experience fun? Even if designed for younger children, we wanted to check if the puzzle-like elements of the experience were engaging for anyone.

- Does our experience foster collaboration? Will one person take control and essentially go through the activity on their own, or is teamwork necessary?

- Is our experience difficult and/or confusing? If older guests than intended are confused and/or struggling, then the experience will be no good for younger kids.

- Is our experience educational and a source of new knowledge? Even if older guests than intended experience it, will they learn new things?

- Is our UI clear and easy to use?

- Is Charlie a good guide and narrator? What do guests think of her role?

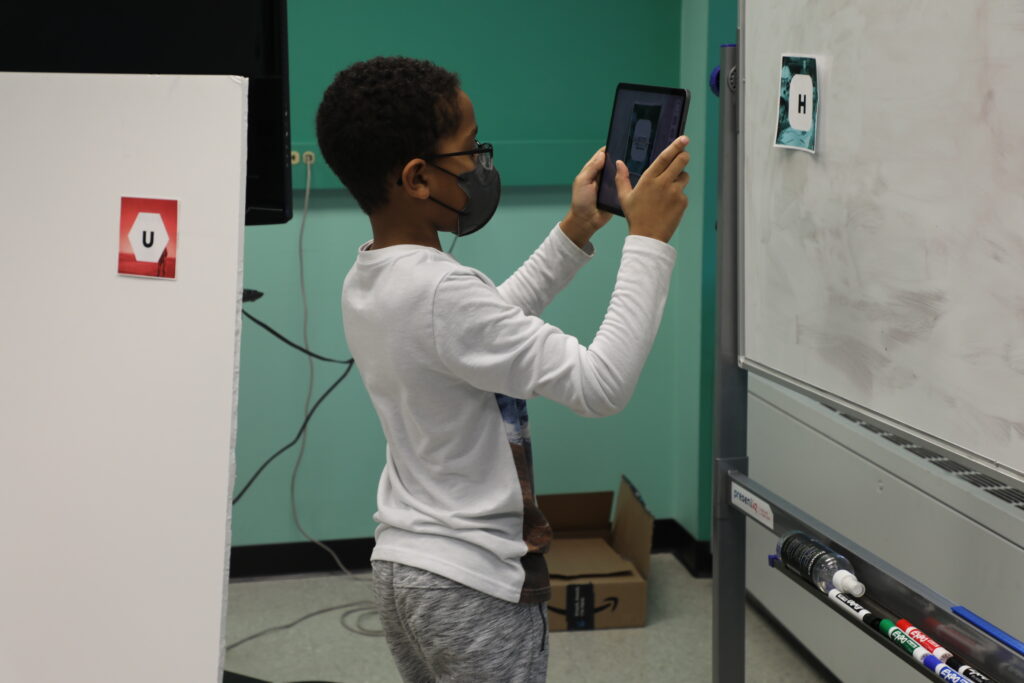

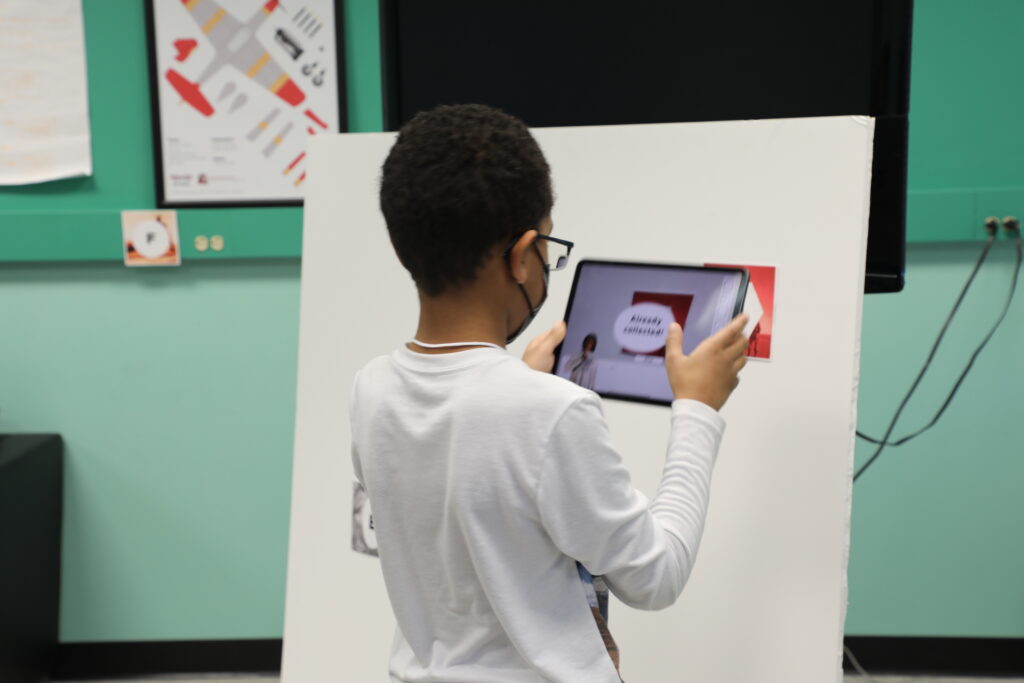

In the end, we realized this was a great approach to take, because we gathered a lot of important information and feedback from the two sessions of the day. For both, we allowed the guests to go through the experience with its physical materials (cards, sorting & assembly guide, Morse code guide) and the app in the tablet, with virtually no guidance. Afterwards, we asked them the following set of questions:

- If you had to describe this experience with 1 word, which word would you pick?

- In a scale of 1 to 5 (where 1 is the easiest activity you have ever done and 5 is the hardest), how would you rate the difficulty of this experience?

- In a scale of 1 to 5 (where 1 is the most boring activity ever and 5 is the most fun), how would you rate fun in this experience?

- In a scale of 1 to 5 (where 1 means you already knew all the information in this activity and 5 means you had never heard about any of these concepts), how would you rate the educational content in this activity?

- What was your least favorite part? What was your most favorite part?

- What did you think of the character/narrator?

- What did you think about the app’s controls and style (UI)?

- Etc.

This was also a very successful day of playtesting! We got valuable feedback from our playtesters and it served as confirmation that we’re going in the right direction. It was very exciting to see our guests enjoying the experience, and mentioning that it was made more engaging by completing it as teams. They highlighted the processes of sorting cards and deciphering Morse code as fun puzzle-like activities, and their reactions when they saw the airplane fly evidenced their excitement.

Progress

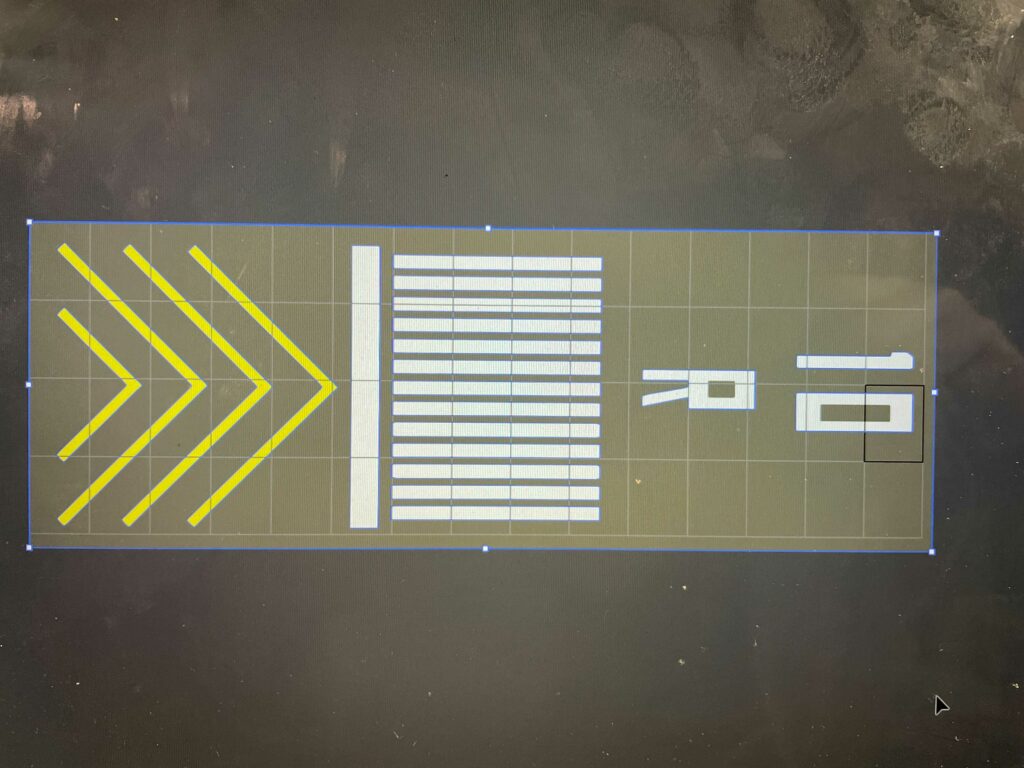

Progress that is worth pointing out (among so many things we’ve been working on) are the completion of the P-51 Mustang 3D model by Xiaoying and the incorporation of the exhibit’s runway as a marker.

As a placeholder for our app prototypes, we had been using a free 3D model of the P-51 Mustang, but we soon realized that it wasn’t too well-built. We wanted to use the model to animate the airplane, and that implied Xiaoying would need to build her own model. She completed it this week and it looks awesome! We’re just missing the texturing.

In terms of the runway marker, Eric had been working on having our app detect the runway that MuseumLab built in the aviation exhibit as a marker to anchor the virtual airplane in the flight stage of our experience. This is a client requirement, to make use of that feature in the space to make the airplane take off and land, which makes absolute sense.

We struggled with the recognition of this marker, and even reached out to our client to discuss other options. However, Eric and Jimmy worked on it to find fixes, and the issue is no longer a problem. We can scan the runway!