As mentioned in last week’s blog, this week’s work was heavily impacted by feedback we received last Friday. The first comment that influenced our idea and design was the suggestion to focus primarily on the students in the experience who would likely not have the opportunity to control the tablets and hence the AR side of things. After all, these kids would comprise the majority of our guests. The second comment was the idea to incorporate asymmetrical cooperation into our experience. This implies a scenario in which different guests have the same final goal (cooperation) but the rule set is different foreach of them (asymmetrical).

Idea & Iterations

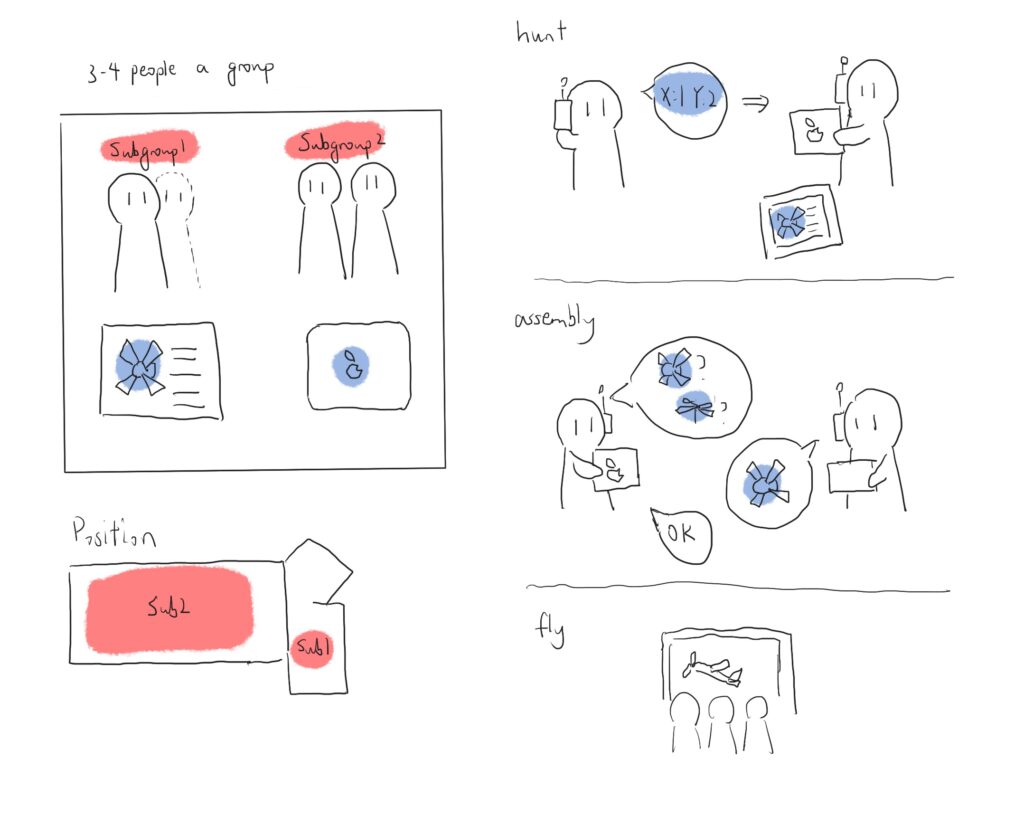

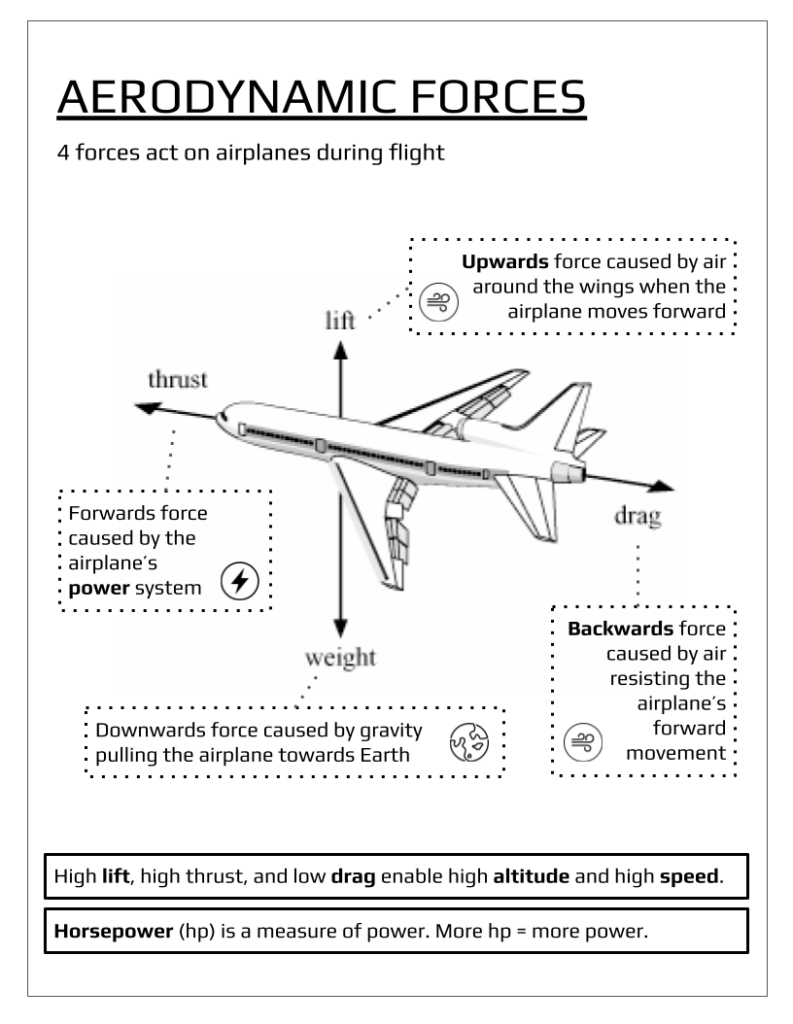

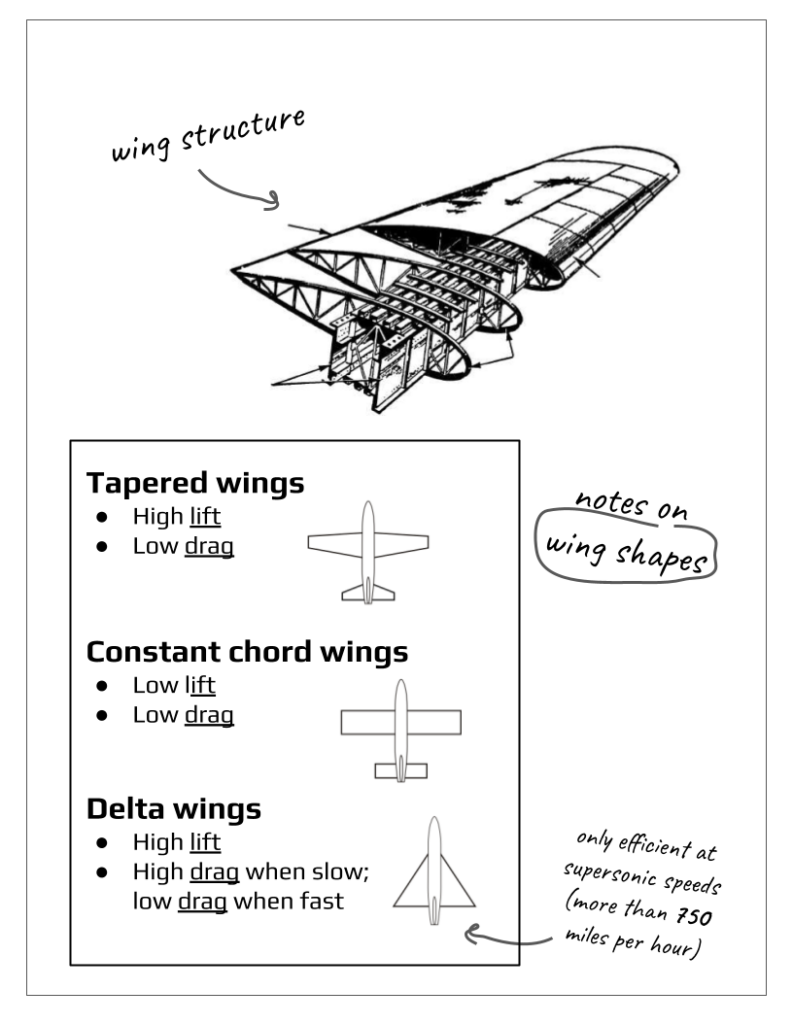

Taking into consideration this feedback, we began discussing the possibility of setting up the student teams with specific roles for each person, all within the world of aviation to represent different career possibilities. We started with an aeronautical engineer and an aircraft mechanic – these two people could be handed physical objects with specialized knowledge to help the tablet-operator throughout the experience. These roles later evolved somewhat, and currently, we are looking to have the aeronautical engineer, a physicist, and an air force historian. We also considered a version of the experience in which the whole group of students collaborate together, but this was quickly dropped. Additionally, we considered the use of other devices like walkie talkies and a large screen in the space, but these are no longer part of our idea.

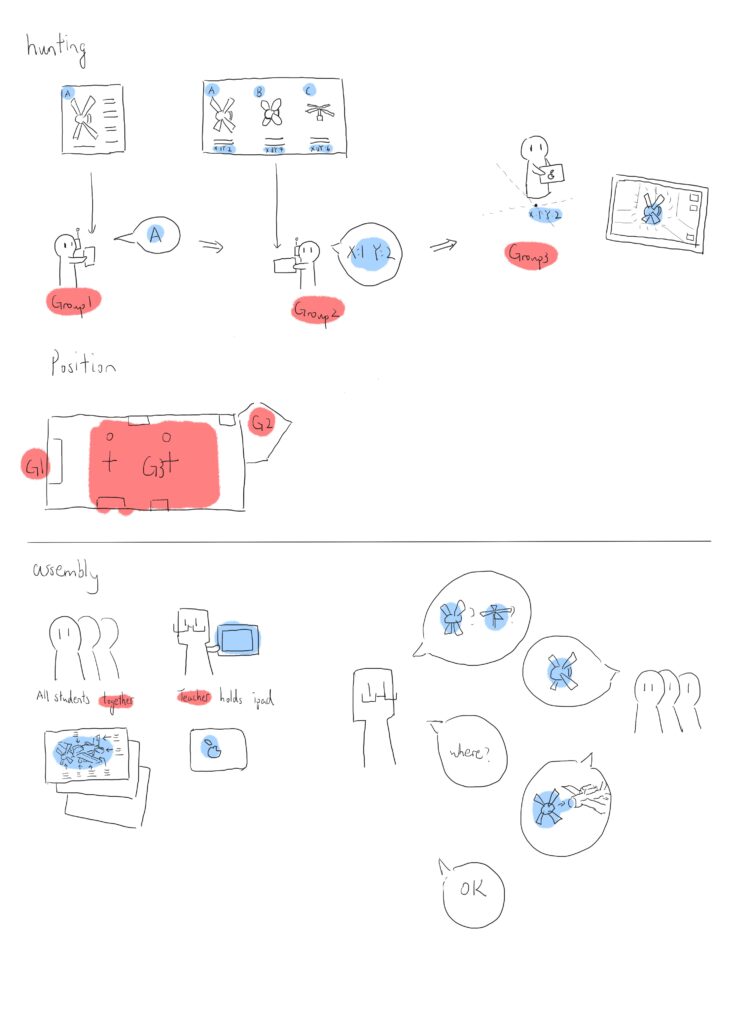

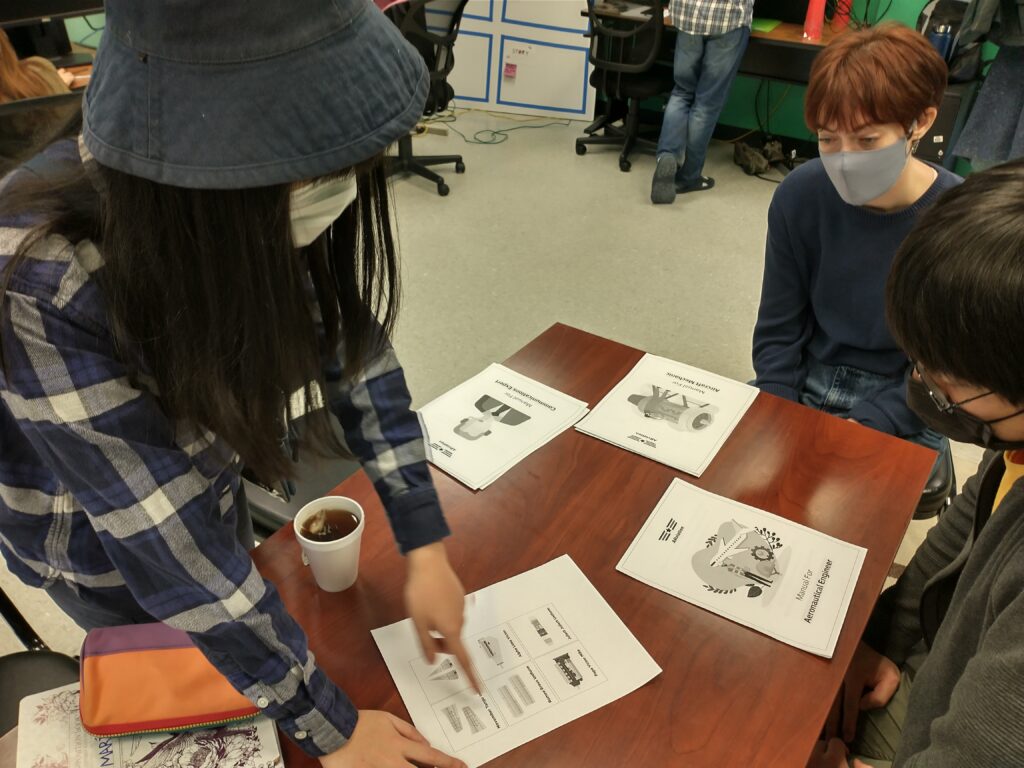

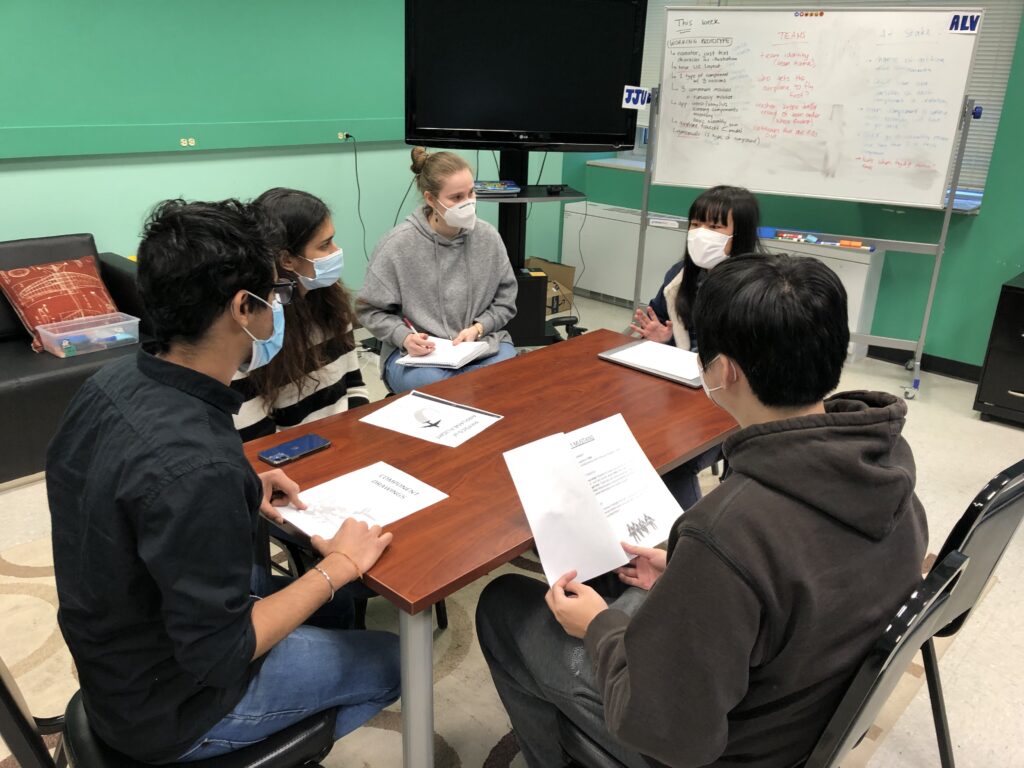

Our instructors pointed out, early in the week, that it was urgent for us to settle on one experience design path, and make sure we all understood it and agreed to it. Therefore, as a team, we carried out a “paper walkthrough” of the experience in our project room, using paper to represent the different physical objects and app screens a guests would see and use. The experience was extremely useful: we had a chance to see what was confusing, what didn’t make sense, what worked, and what had to be reworked. In the end, we defined a throughput that we all found to be good for our first prototype, and to begin playtesting.

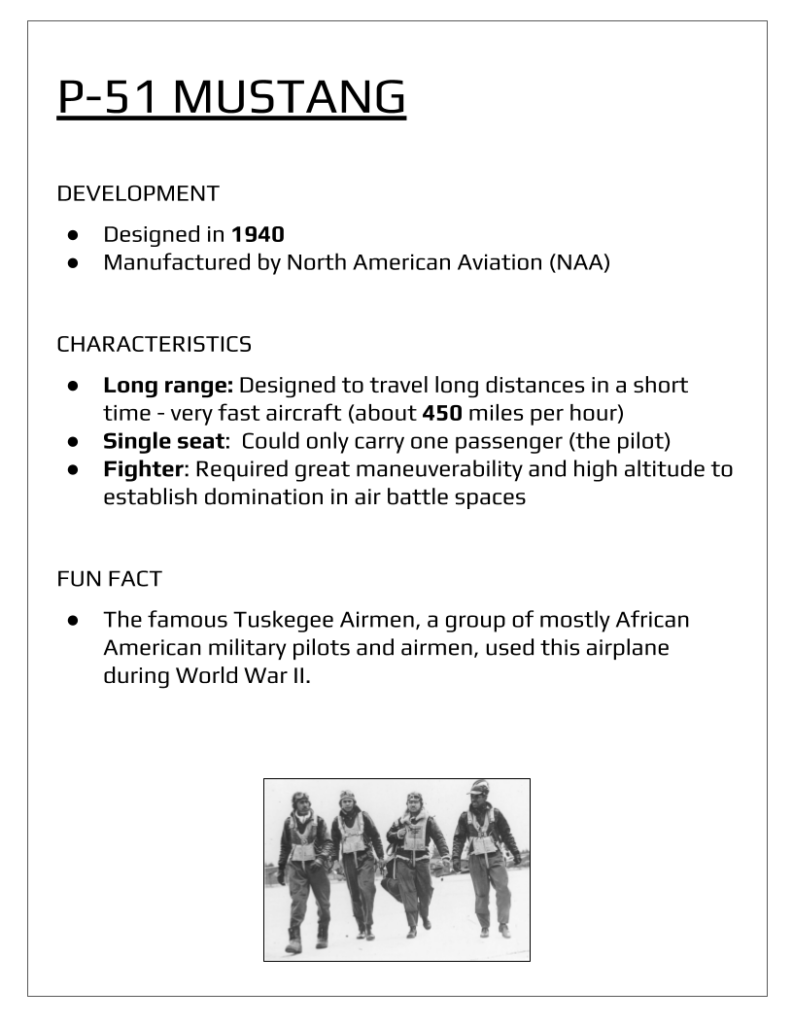

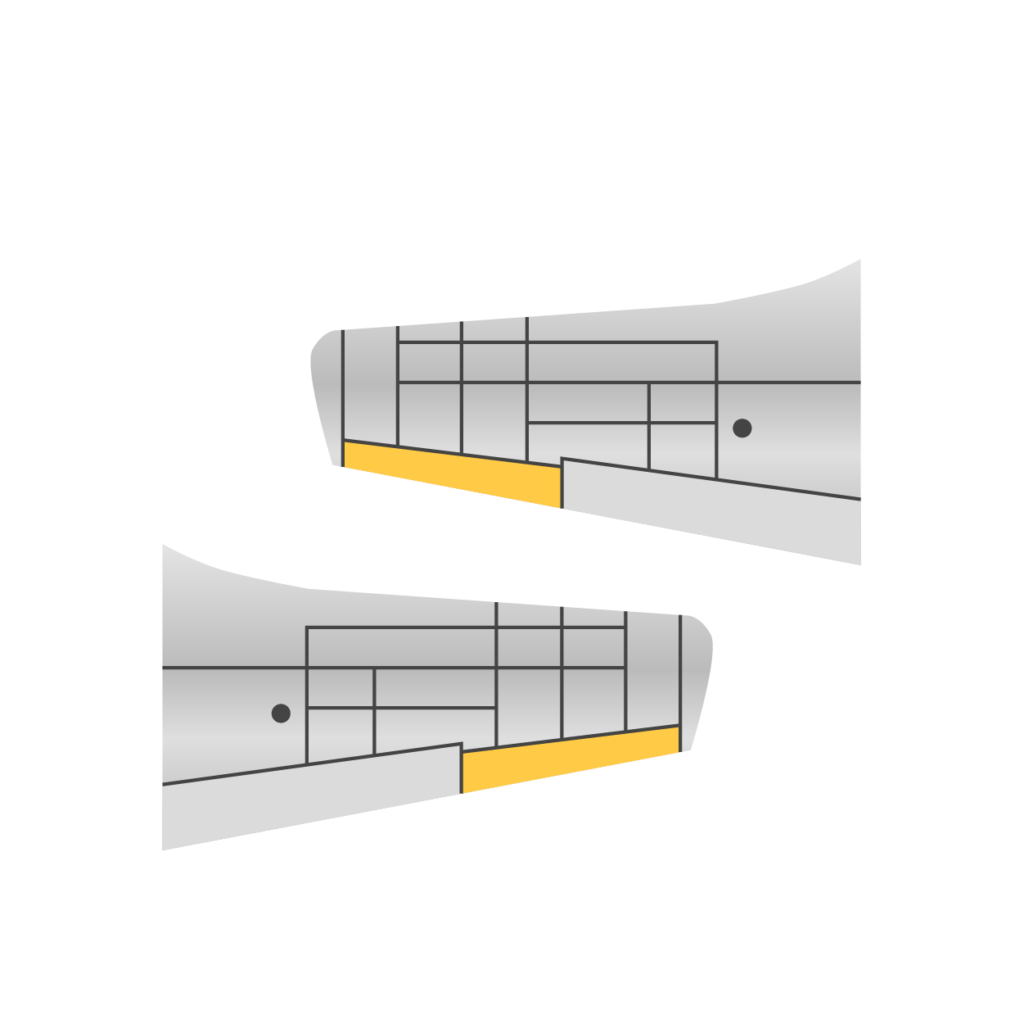

This led to the creation of our first sets of manuals for the guests, which were iterated twice during the week (the first, second, and third versions can be seen with the provided links). Alongside this, icons for components and 3D models were also created. For these first prototypes, we worked with wings and engines as the components to be found.

(third version)

(third version)

(third version)

(P-51 Mustang’s wing shape)

(P-51 Mustang’s wing shape)

Playtesting

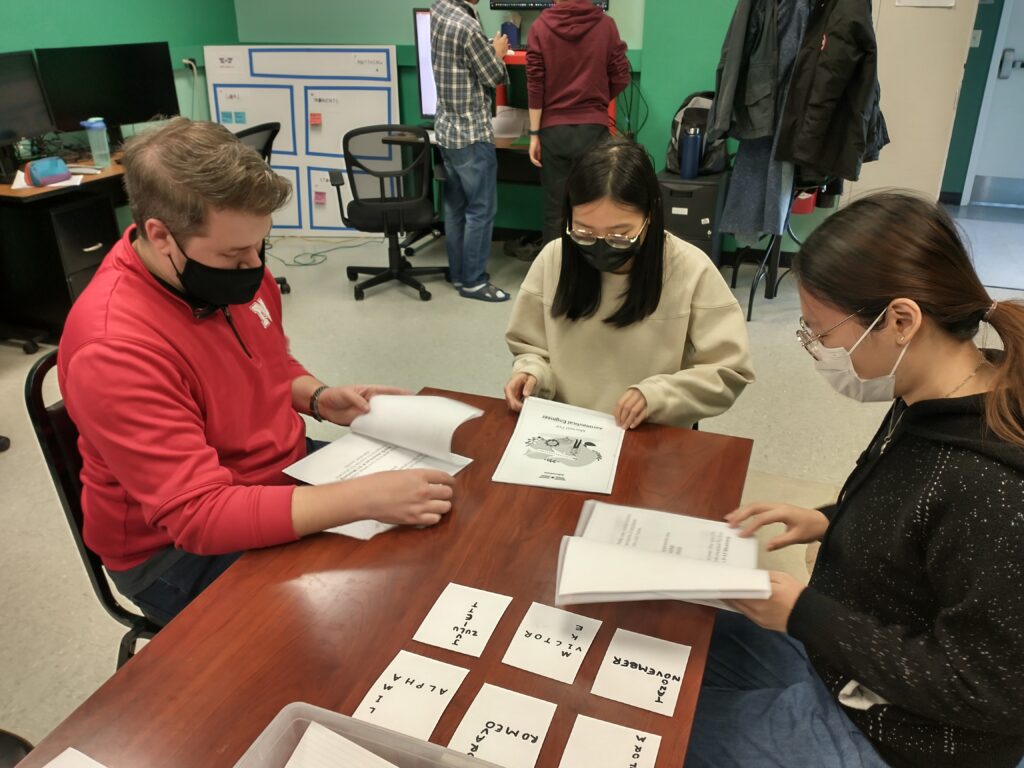

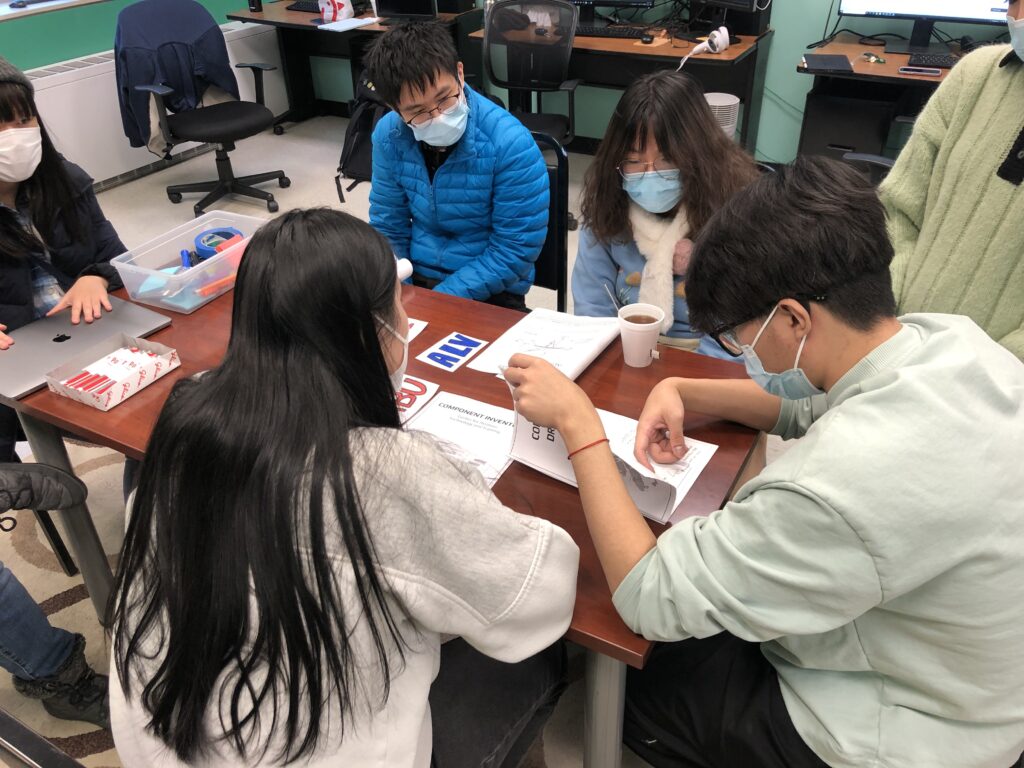

Given we already had first versions of the manuals and ideas for the markers, we carried out sessions of paper playtesting this week, with fellow ETC students as our subjects. These sessions were used to test the cooperation and engagement of the guests when assigned different roles and given different, specialized information to find the airplane components. We haven’t integrated the AR with this yet, so the goal of our playtesters was to identify the right components from a variety of physical cards presented to them, which represented our future markers. By the end of the week, we had two days of playtesting, with two groups going through the experience per day. The playtests of the second day (in terms of materials and their contents, amount of roles per group, and how the experience was presented) were greatly influenced by feedback from the first day.

Group 1 (with Ivy as facilitator)

Group 2

Group 1

Group 2 (with Ivy as facilitator, María taking notes)

Needless to say, these sessions were incredibly helpful (though, admittedly, also frustrating in some cases) as they showed us what wasn’t working in our design of this first part of the experience. It forced us to iterate on the design of each manual, what kind of content each should include, the design of the markers, and how the experience is introduced and explained to guests. We also identified what was working in terms of education, engagement and cooperation, which was obviously very rewarding to us. The highlights of this process were certainly exploring, testing, and iterating. Detailed notes from each session can be found in our playtesting logs.

App Prototype

The team has also made progress for the first prototype of the app to go through the full experience. Basic wireframes for the UI of both the component hunting and the airplane assembly are ready, so they can later be implemented in Unity (where our programmers are building the app prototype).

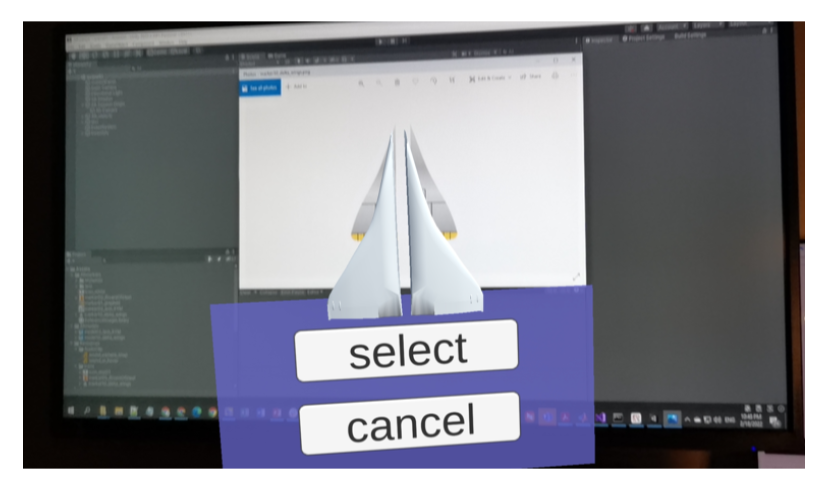

In terms of the app’s development, we have working prototypes of both the component hunting and the airplane assembly parts as well.

For the component hunting part, an inventory system has been implemented and integrated with the AR marker detection system. When a guest scans an AR marker, a 3D piece emerges and floats around the marker. The guest can interact with the object (a feature that is yet to be implemented) or put it in the inventory and continue to search for the next part. The guest can also remove any object from the inventory or re-take it as many times as needed. An integration of the 2D icon and 3D model for one type of wings we are using (delta wings) has been tested successfully. We also have a version of the assembly part, in which the user can drag and drop an object inside the virtual world. The goal is to implement the function to attach different components and rotate the user’s viewpoint before half presentations.