Character & Mocap

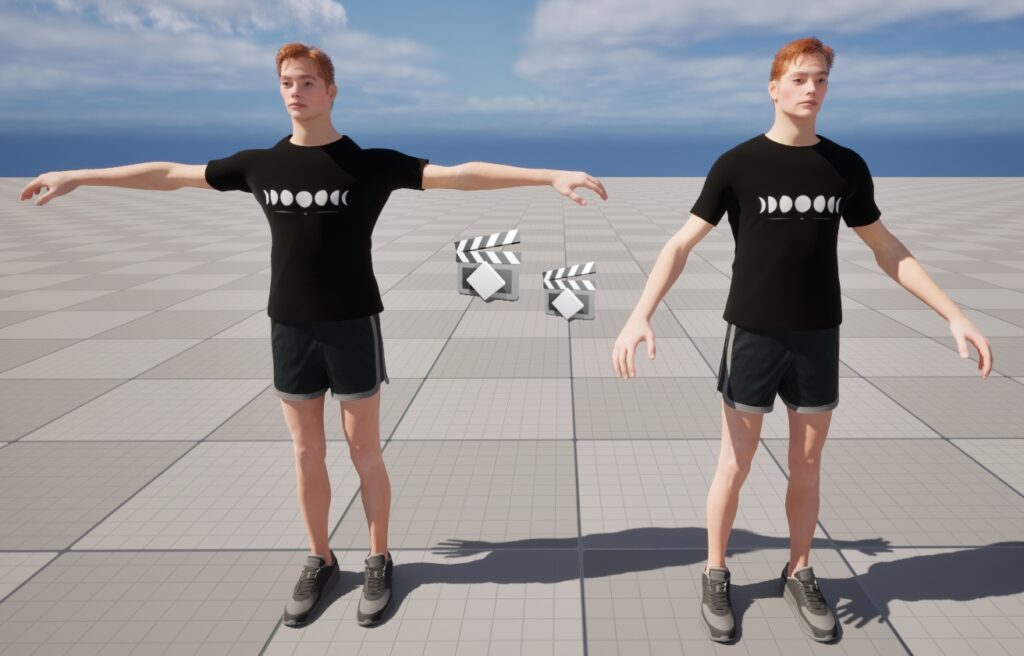

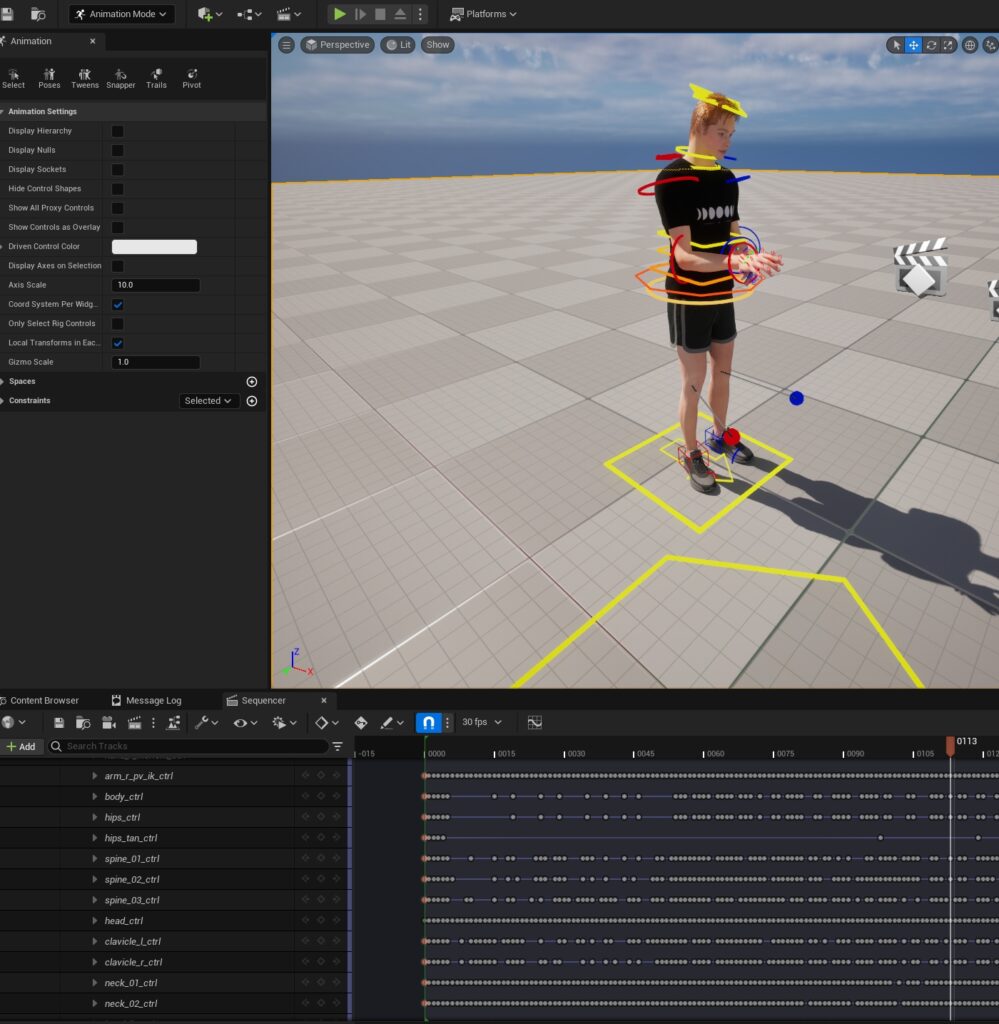

As we have one of our own characters’ base models being fully rigged and modeled through UE5’s metahuman, we were able to use our own model to test out the draft mocap pipeline that we lay out in the previous week through free online models.

draft mocap pipeline: MotionBuilder (clean and retarget mocap data) ->Maya (edit animation) ->UE5(render animation).

Metahuman is an official functionality of UE5, its rig controls works well on UE5, and it would not be possible of exporting the rig controls to Maya.

In addition, we found out that the Maya also has basic mocap functionality same as the Motion Builder, and it is sufficient of the level of complexity that we need.

As a result, we modify and finalized our mocap pipeline.

finalized mocap pipeline: UE5(character rigged and modeling, export skeleton) -> Maya (clean and retarget mocap data) ->UE5(edit and render animation).

Shader

This week, we developed the oil painting and glitch shaders, which could be used for the ADHD perspective.

For the oil painting shader, she explored the Houdini to Unreal pipeline for importing digital assets. She added brush pieces in Houdini using a geometry node (e.g., the duck model, which can be replaced with any model in Unreal) and applied a toon shader in Unreal. The oil painting style can be adjusted by modifying the brush shape, as well as the number and size range of the brush pieces in Unreal.

For the glitch shader, she adapted it to resemble the style from Spider-Man: Across the Spider-Verse. Attached is a GIF showcasing the shader. The glitch speed, overlay texture, and piece shapes can be adjusted later.

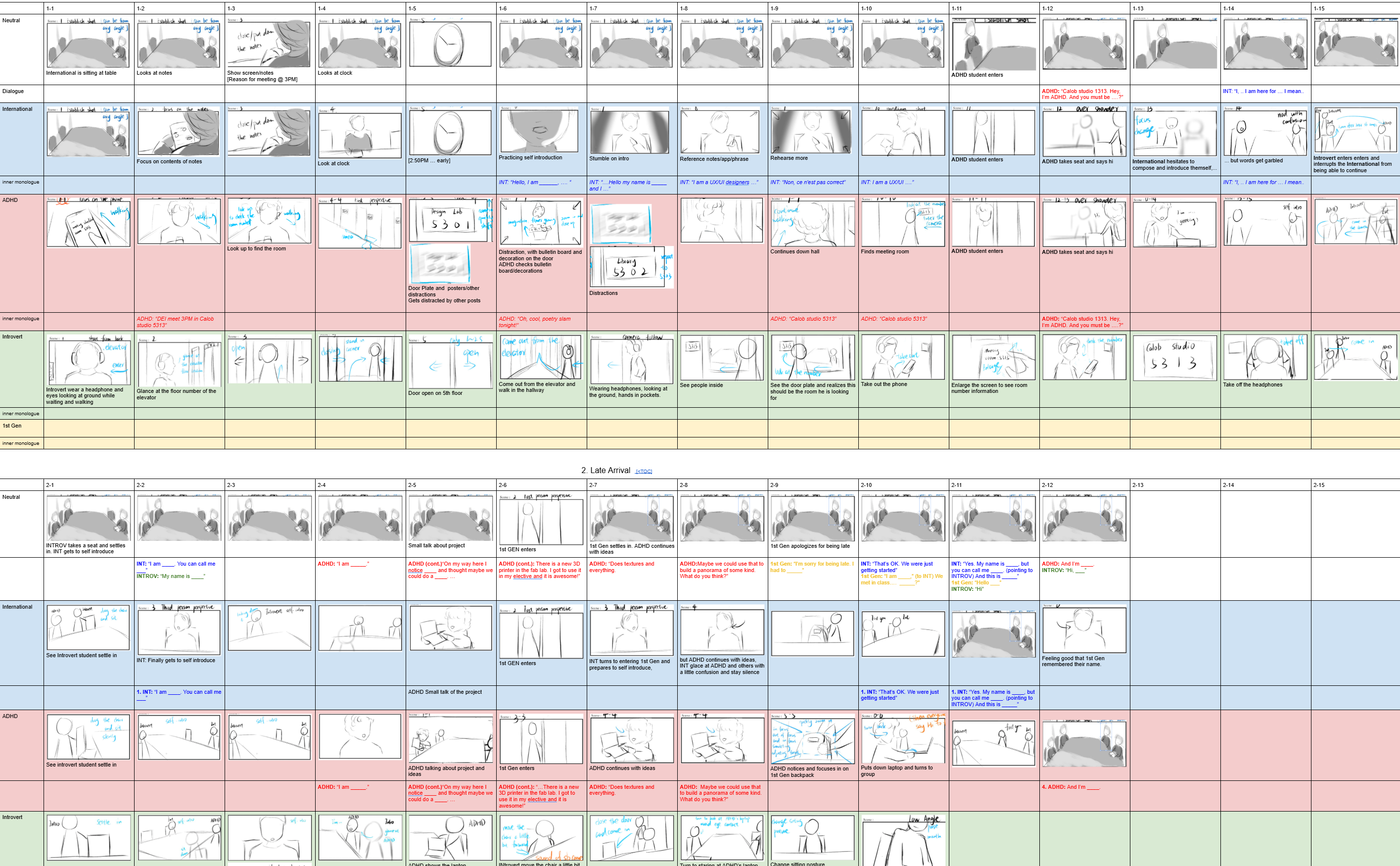

Storyboards

The dialogue has been created, and the boards of four versions are matched in a form. As the full story is done, the gap between each important events has been filled. The story now has its continuity.

The storyboards for international and ADHD perspectives had been polished. We had another meeting with our advisor Ayana and got advise from her about First-gen perspective. The introvert version is in progress and about to finish, the First-gen version will be finished next week.

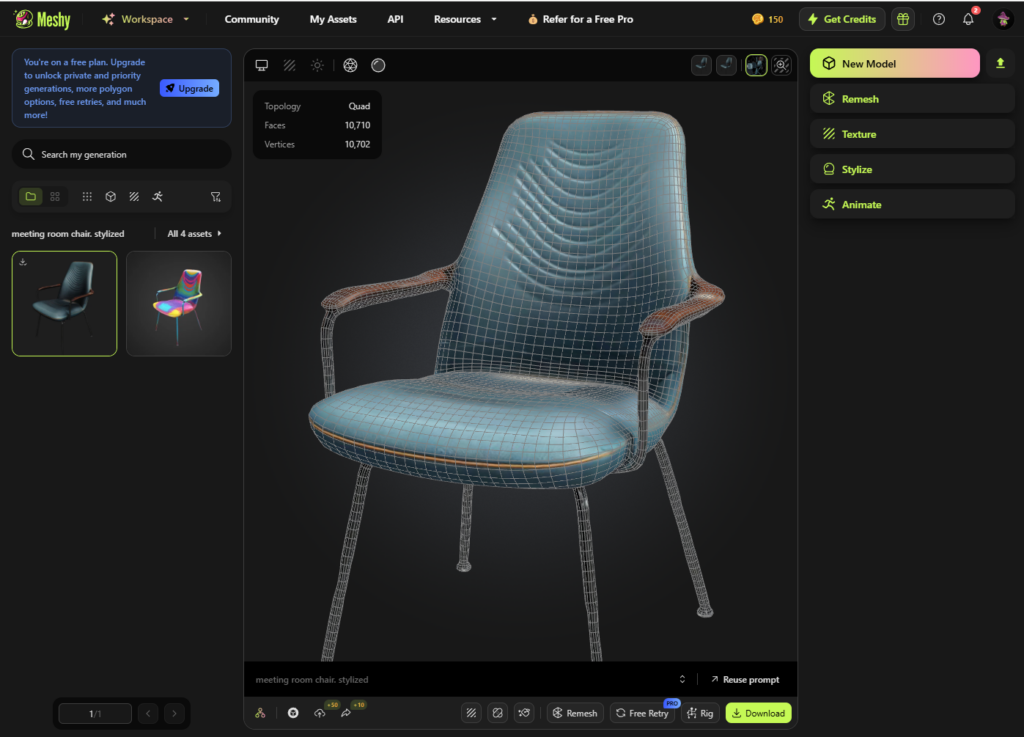

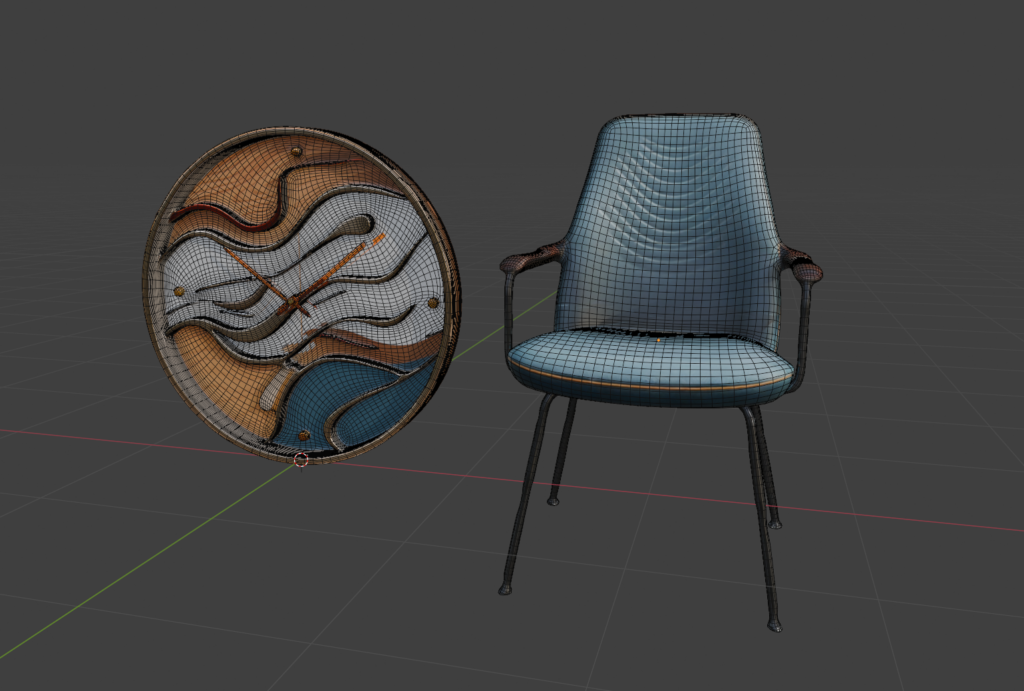

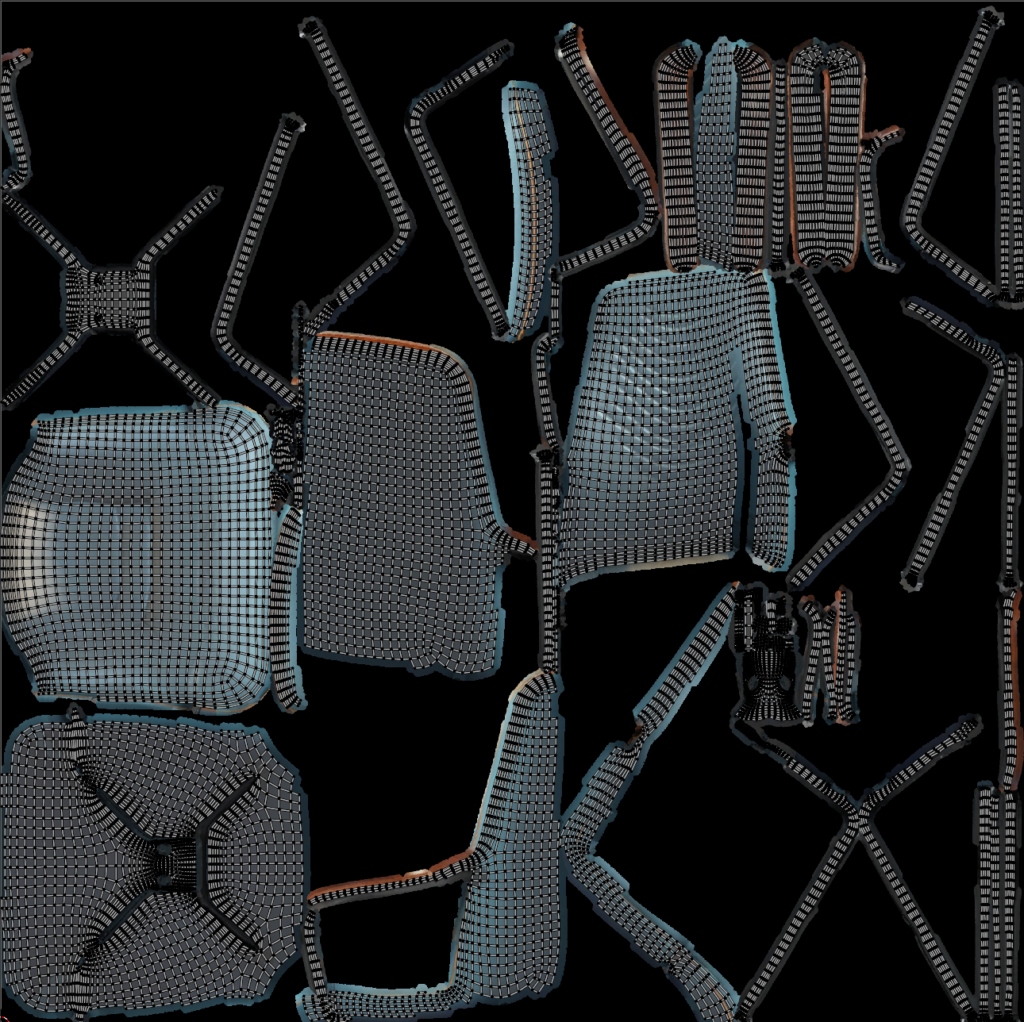

AI tools for 3D Assets

We want to have some of our models made by AI to improve efficiency. We explored different 3D AI tools to generate 3D assets for environment. We have decided to use Midjourney and Meshy among all the tools explored. Licenses are being applied.

The wireframe and UV of the AI generated assets are usable but need to be cleaned up, the textures and materials are not very satisfying and will be fixed or remade by ourselves.

3D Asset pipeline: Midjourney (Prompt to image)/Meshy(Prompt to image)→Meshy (Image to model)→Maya/Blender (Clean up wireframes and UVs)→Substance Painter (Fix and remake textures and materials)→UE (Environment)

Website

The Colleido website has been set up.

Cinematography

We continued working on the international student camera shots.