Over the past fourteen weeks, the Haptic Wave team embarked on an ambitious and exploratory journey. While we set out to investigate the intersection of haptics and audio, what we gained stretched far beyond technical know-how — it was an experience in collaboration, communication, and navigating the unknown.

Expanding Sensory Boundaries

At its core, Haptic Wave was an inquiry into how we could tell stories and enable navigation experiences without relying on visuals. Our guiding questions were clear:

- How do we successfully design audio- and haptic-driven wayfinding mechanics, while maintaining minimal visuals?

- How can we craft unique experiences that communicate a story using only audio and haptics?

These two pillars framed every decision we made.

The Village Behind the Project

This project could not have existed without the remarkable community that surrounded us. We collaborated with external programmers, consulted with a brilliant subject matter expert, and leaned heavily on the advice and encouragement of dedicated faculty members. Navigating tight deadlines tested our interpersonal communication skills — especially as none of us came into the semester with prior experience in audio engineering or haptic design. Learning to communicate clearly, set realistic expectations, and manage an evolving production pipeline were as valuable as the prototypes we created.

Our Prototyping Process

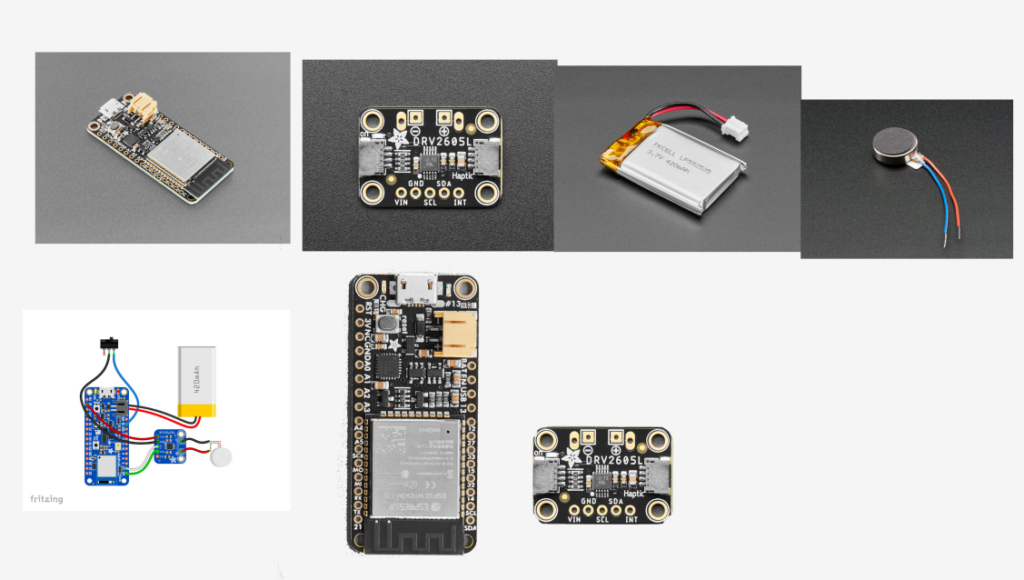

We taught ourselves about sound frequencies and how they translate into haptic sensations, experimented with audio design techniques like binaural and spatial audio, and ultimately built a quadraphonic six-channel setup in Reaper. We also experimented with a variety of haptic devices, including Meta Quest controllers, bass shakers, and custom-built microcontroller rigs.

Our work culminated in two prototypes:

1. Audio and Haptic-Driven Navigation Prototype

We designed a first-person VR game to explore how visually-abled users would navigate a space using only sound. The core mechanics included:

- Sound Beacon: A directional sound emitted from the destination point to guide users.

- Echo Navigation: Players sent out a sound probe to detect invisible obstacles, receiving haptic feedback if blocked.

We iteratively reduced visuals — first hiding obstacles, then eliminating all visual elements — forcing players to fully rely on sound cues. Through extensive playtesting, we discovered that simplifying level design (starting with cubes and spheres) made navigation clearer and less overwhelming. Later, we layered in complexity with additional obstacles.

Key discoveries:

- Distinct, exaggerated sounds were critical for clarity.

- Overstimulating or repetitive sounds were quickly fatiguing.

- Introducing footstep sounds helped players perceive their own movement, a surprisingly necessary addition.

2. Spatial Audio and Haptic Floor Sensory Experience

For our second prototype, we constructed a 4ft x 4ft haptic floor using bass shakers and combined it with a four-directional speaker setup. Our goal was to explore narrative storytelling through pure sound and touch.

We began with nature soundscapes — jungle animals, birds, and a dramatic stampede — testing how haptics could enhance immersion. During playtests, guests imagined a variety of environments (volcanoes, subways, camping), showing us that a minimal narrative context was essential to guide interpretation.

We then evolved the prototype into a meditation simulation pod with a light narrative:

- A calm, AI-guided nature soundscape that gradually “glitched” into more complex, busy sound environments.

- Haptic vibrations synchronized to the soundscape, designed to evoke emotion and sensation.

Key considerations we developed:

- Layer sounds gradually to aid mental visualization.

- Use recognizable, stereotypical sounds for quicker participant recognition.

- Align haptic feedback with emotional beats and sonic events.

- Balance narrative dialogue with sensory immersion — “show, don’t tell.”

Lessons in Production

Beyond design, we gained invaluable production lessons:

- Working asynchronously with external programmers taught us to over-communicate details and adjust deliverable expectations, as external workflows differ from ETC norms.

- Discovery-driven projects require structured flexibility — balancing open-ended exploration with clear milestones to stay on track.

- Rapid iteration and constant playtesting informed every pivot we made.

Team Takeaways

Entering the semester, none of us were audio or haptics experts. By the end, we had successfully built two functioning prototypes, deepened our technical understanding, and most importantly, learned how to prototype in an unfamiliar medium — a skill that will serve us long after this project.

Looking Forward

We compiled our findings into a documentation package for future ETC students and potential employers, outlining:

- What worked and what didn’t

- Design decisions and rationale

- Playtest insights and future directions

For those exploring audio and haptic design, we believe this space holds enormous potential for inclusivity. While our project wasn’t explicitly accessibility-focused, creating experiences built on non-visual modalities opens up pathways to richer, more accommodating interactions for diverse audiences.

Future Possibilities

If we had additional time to iterate, here’s where we would go next:

For Audio-Driven Navigation

- Integrate Unity Analytics to track player patterns

- Experiment with new audio interaction techniques

- Build a full game loop

- Integrate directional haptic cues, not just feedback

For the Sensory Experience

- Collaborate with experienced sound designers

- Validate ease-of-use and build processes

- Integrate interactive elements via Unity

- Add wearable haptics (e.g., haptic vests)

Final Thoughts

Haptic Wave taught us that there is no one-size-fits-all solution in haptic and audio design. People instinctively seek visual cues, so thoughtful sound and touch design is required to bridge that gap. Building custom devices, embracing flexibility, and always making time to “just try” were keys to our success.

We’re excited to see how future designers will expand this space — and we’re proud to have contributed to its foundation.