Welcome back to our development blog! We are now in week 11, just 1 week away from Soft opening.

Client Meeting:

Some of the talking points included:

- Consider the speed, size, and positioning of the beat object approaching the car in relation to how long it will take for players to recognize the beat object.

- Give the transition more detail. How does the transition tie in to the movement of the vehicle?

- The beat objects look a little too small.

- The platform (simulator rig) is great, but consider a more practical platform, like a mobile device.

- This would be most useful for approaching OEMs as of now, as the concept idea we are creating is still years ahead in the future.

- Good approach to 2D flat facades on buildings for holgorams

- Consider multi-modal interactivity

- Kinect – use pre made scripts for gesture recognition

- Sensors

- Think about air circulation and ventilation in the room

- Adding fans to help the temperature low

Design Update:

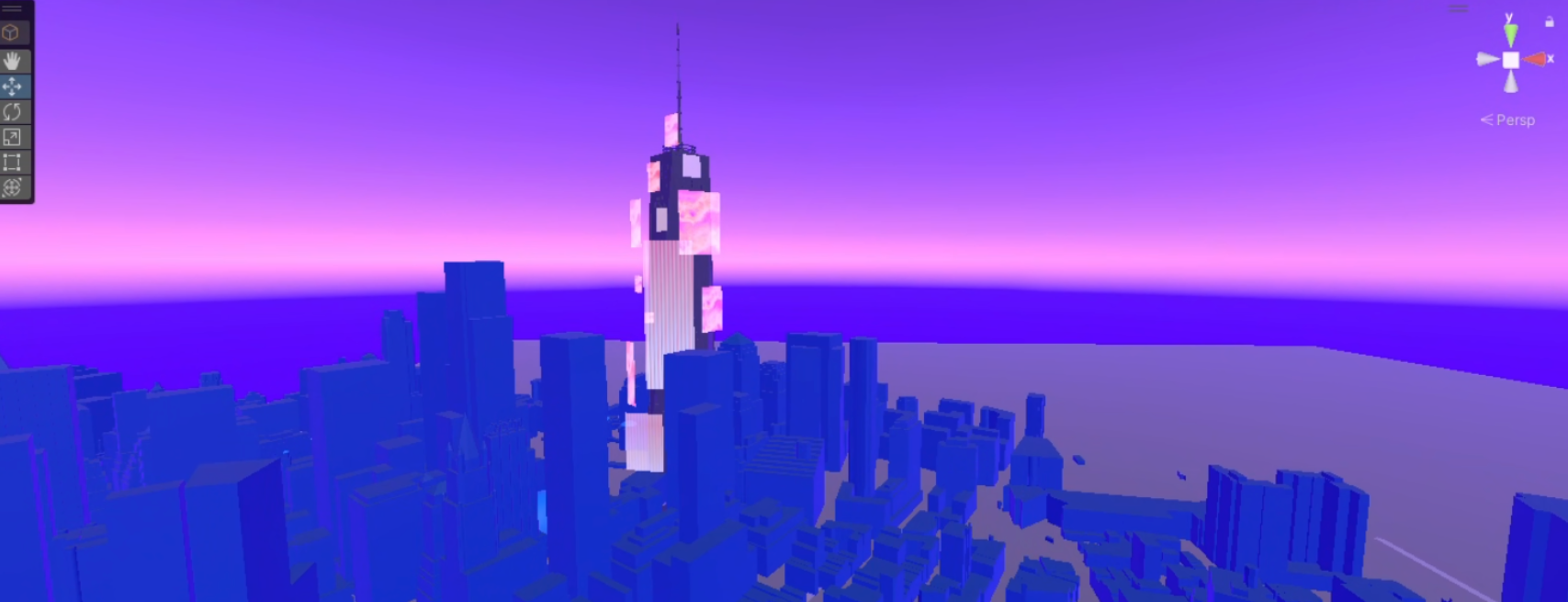

Our designers are working on populating the virtual NYC scene, and instead of creating brand new textures for individual buildings, we’ve decided to add 2D videos that create a hologram-like effect on to buildings. This lowers the intensity of the workload, while creating engaging elements that works well with the cyberpunk-like theme.

Tech Update:

Since our technical challenges last week, we discovered that by using the nativeless version of the DryWetMidi (original plug in we had used for Windows) was working with Linux-based Isotope. The issue here was that without a Midi playback device, we had to rely on a separate .wav or .mp3 file to play the music, and hope that Unity will perfectly synchronize the MIDI file that is supposed to generate the beat objects.

Unfortunately there were a lot of discrepencies that were no consistent. This meant that we could not practically create a game system where the beats and the music were perfectly in sync, which is a critical component to any rhythm game.

The good news was that we were able to make the midi files be read on Linux, and we could deliver a build that we used for the Playtest Day.

Hardware Update:

We cleaned up the platform, taping down all the cables to prevent testers from tripping over, and to make the door opening/closing more seamless.

We’ve also installed a temporary “center console” made out of foam board, to attach the buttons on to. This also acted as a piece of furniture that added to the realism of the in-car experience.

Playtest Day

With persistent sync issues, we pushed ahead with playtesting. While we were unable to feedback on the gameplay itself, we were able to show case the concept and gather a sense of what people found exciting and what more they wanted to see.

The most obvious point of feedback was lack of clarity on when they should hit the buttons or not. This was something the team was already well aware of, and is something we are still working on to fix. This problem is deeply rooted in the sync issue.

Outside of the gameplay issue, playtesters found the virtual world immersive, fun and exciting, coupled by the music. The idea that they could “get inside” a fully immersive display with sound was in itself a fun experience. One of the playtesters actually noted how they wished it had proper surround audio system, where each players heard their own instruments on their side of the vehicle.

Another point of feedback was the interest curve issue. The gameplay was mostly flat and while intereseting in the beginning, became very repetitive. Some suggestions included adding power-ups, combo events, group combo-events, and/or a score system that tracks player performance.

The group-combo event was particularly interesting for us, as we were struggling to create a more collaborative game experience, even with all the players playing along to the same music. If there were, for instance, a large object where all players had to hit the buttons at the same time, it would encourage greater communication and make the passengers feel like they are in a shared experience.

There was also a feedback around rear passengers, and how they could see very little of the front screen where the objects were flying in from. We are currently working on fixing the animations for the objects so they approach from the sides for the rear passengers, so they have a better way to gauge the timing of the beats.

We also received feedback around visuals, specifically on contrast and brightness. The beat objects were difficult to distinguish from the bright environment. One of the guests gave us a very insightful comment, on how in a real car setting, you do not get the kind of brightness from the windows as you do from the TV screens in a dark environment. This sensory overload may be a little too much of a distraction, especially when compared to real life scenarios. Bringing the brightness a little down, and using more natural lighting could bring the experience closer to what you would expect in real life.

Overall, it was an informative session that gave us a good set of feedback we could quickly work and iterate on to polish our experience. While we do not aim to add additional functions, the feedback we have received revealed simple changes we could make to make the experience better.

We are not oblivious to the techincal challenge as well, but we are confident that we can have a rhythm game functioning by mid-next week.