Hello and welcome to week 6 of our development blog!

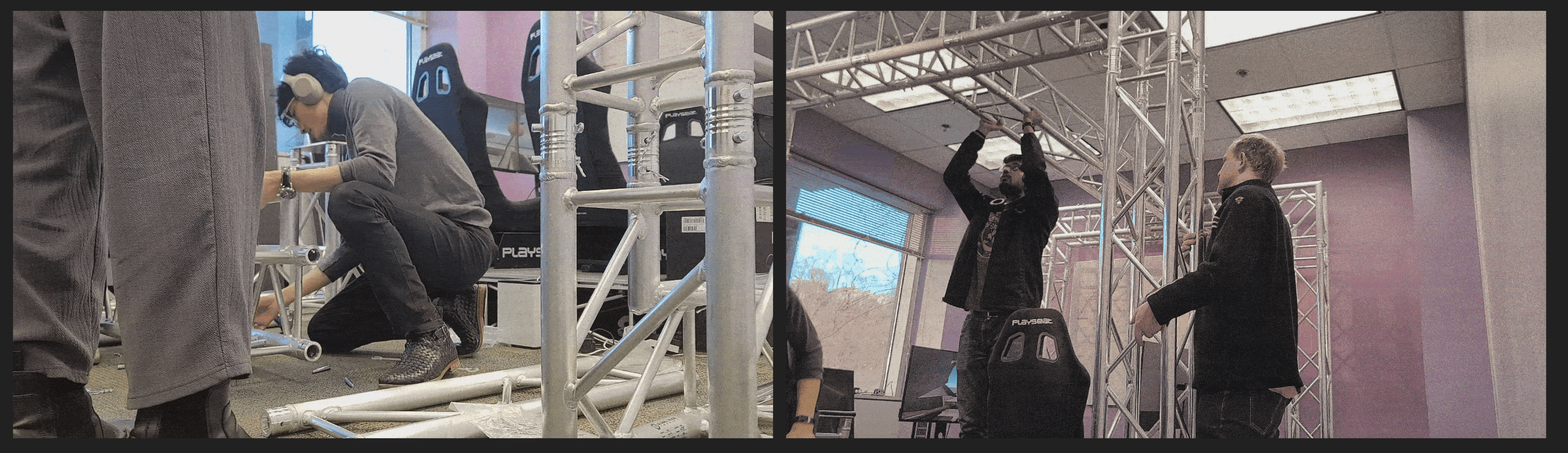

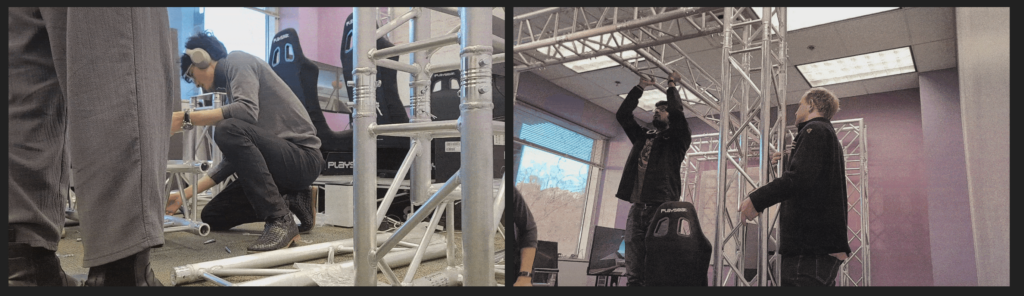

Hardware update

Exciting update on hardware: all the parts arrived! We were able to put together the truss system in just about 4 hours. Additional 2 hours was spent on attaching the hinges on to the pillars and mounting the 50″ TVs.

We decided to adopt larger displays and put them in landscape, in order to minimize the gap between the displays to prevent breaking the immersion of the CAVE system.

We also decided to have all the passengers face the front, in order to create a shared perspective. Conflicting perspectives may break immersion in our CAVE system, as we are limited by the fact that the displays are flat, as opposed to a real window which as a 3D space beyond it.

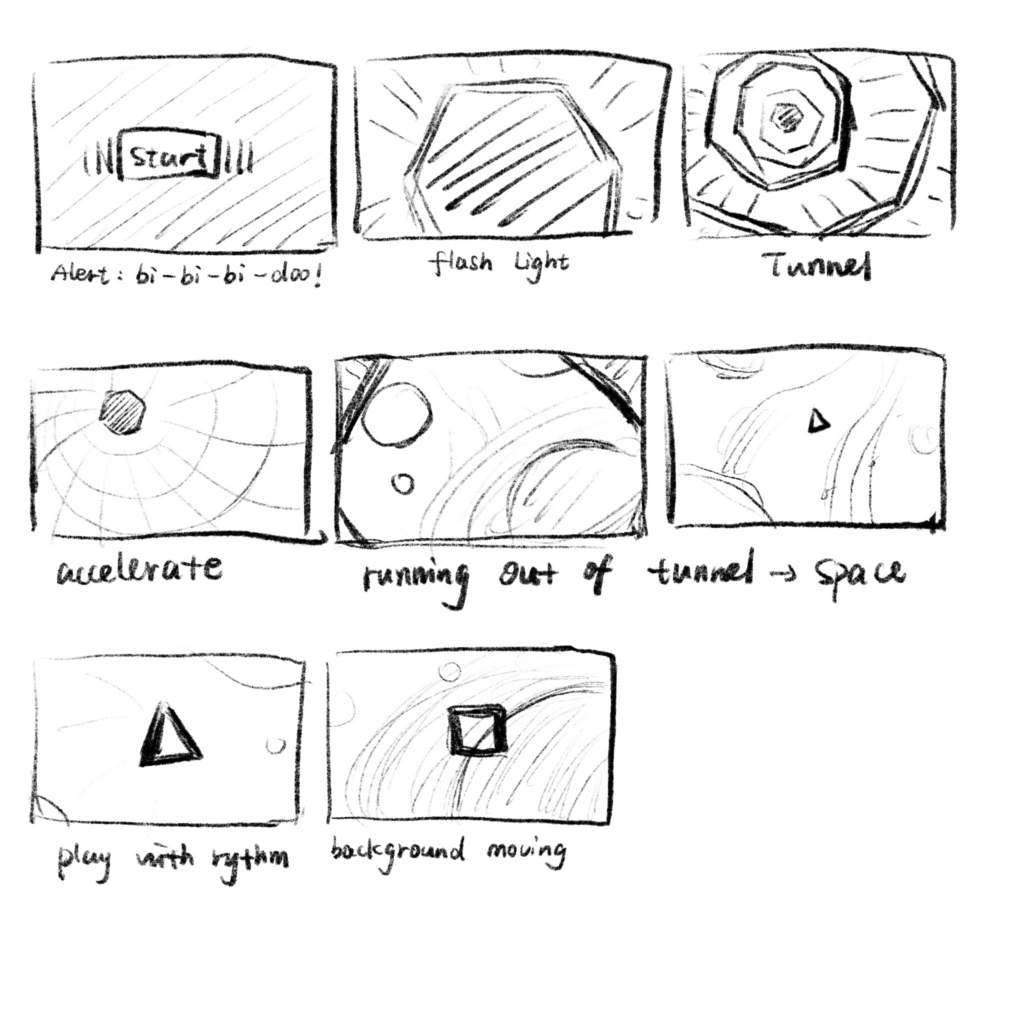

Experience Design

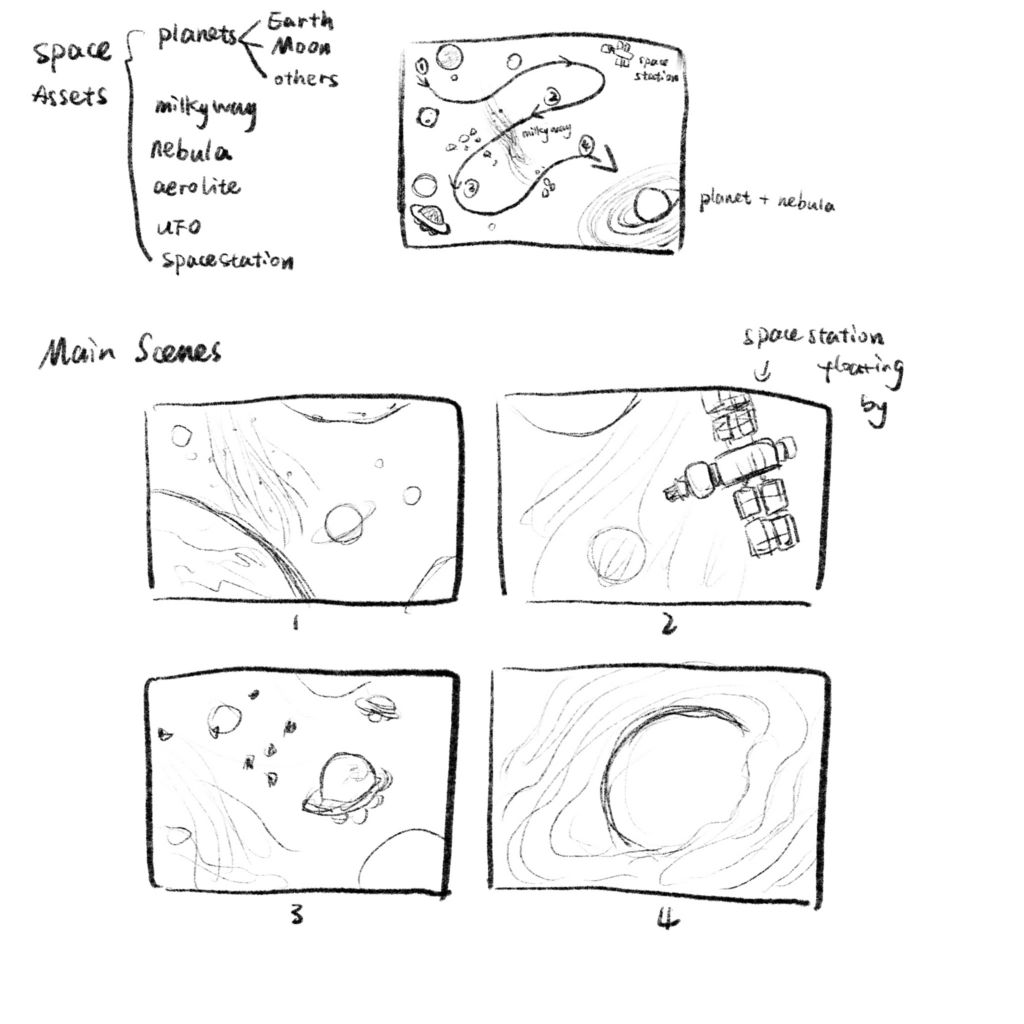

Amelia, our designer worked on creating a story board for our experience. We were heavily inspired by Disney ride, Space Mountain. The idea is that the guests enter the car, and they are driven through a tunnel. This fulfills two purposes.

- Build anticipation for the coming experience

- Provide a sense of being driven in a real vehicle

When our rhythm game experience starts, the guests are ejected out of the tunnel and into a vast open virtual space; in this case, outer space. We want to create a set ‘track’ on which the vehicle will move to simulate the vehicle movement effecting the gameplay. The reason behind a large open virtual space is to give ourselves flexibility and greater margin when matching perspectives.

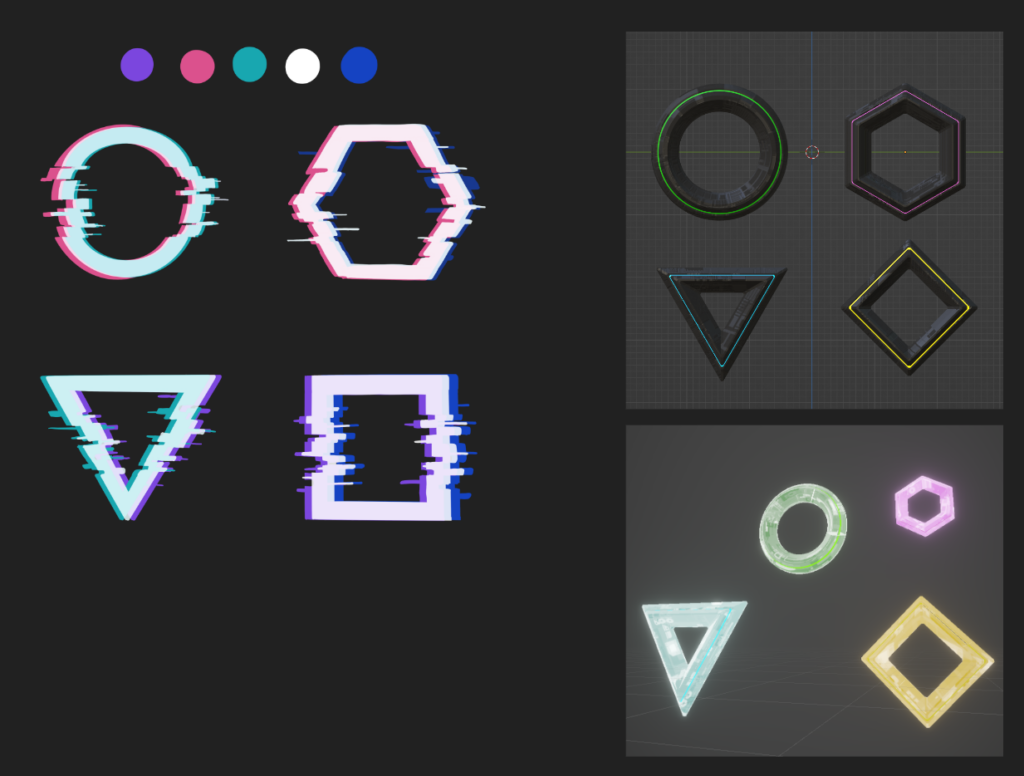

Alex, our designer, also created 3D objects that represent the ‘beats’ the passengers will have to destroy as the vehicle moves through the environment. These objects will spawn and approach the vehicle, and much like a traditional rhythm game, must be destroyed to the beat.

Gameplay & Sound

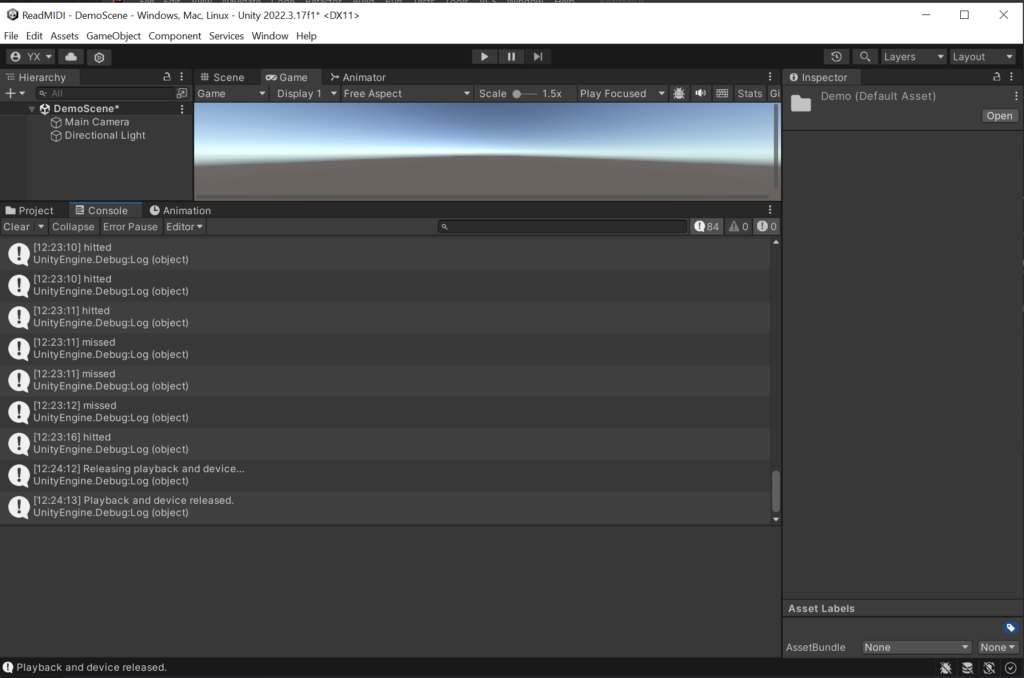

We made progress on the actual gameplay front, and established a pipeline for implementing MIDI files into Unity. We are using a free Unity plugin: https://assetstore.unity.com/packages/tools/audio/drywetmidi-222171#description

Currently we have a text-only demo of the rhythm game. The music plays in the background, and hitting the spacebar on the beat gives feedback through the console.

We’ve created a documentation on this pipeline as seen in the following section:

Exporting midi for Unity

Using this plug-in: https://assetstore.unity.com/packages/tools/audio/drywetmidi-222171#description

You want at least 3 tracks to create a rhythm game.

- Melody // This is what players will hear as music

- Hit tracker // This is where Unity looks for the button being pressed by player

- Object spawner // This is what Unity uses to spawn objects for the game

The second and third track should be on a separate track from the melody. The melody must always stay in its own track. The hit tracker and object spawner, in their respective tracks, must have the volume turned down by setting the fader to negative infinity. You do not want to mute the tracks, as that will not export the midi input for Unity to read.

The hit tracker is identical to the melody, except all notes are of identical length (of around 0.2 seconds), and they are played about 0.1 second ahead of the melody. This is to give the players some margin of error and also to account for lag on the Unity front.

The object spawner is identical to the melody as well, but like the hit tracker, it can be identical in length. The notes must be played in advance of the melody, depending on how long you want the object to remain visible before the player must hit the notes.

It’s also important to keep the hit tracker notes and object spawner notes in a specific pitch that does not move, and that does not interfere with the melody. Using these pitch variables, we then tell Unity for example, F1 pitch is the hit tracker, and F0 pitch is the object spawner.

Looking Ahead

Our halves presentation is set for Monday, and we will be preparing for the presentation. Following that, we plan to have our first playable demo to playtest throughout the week. Given we already have a rudimentary working demo albeit without any visualization, we are confident in meeting this milestone.

This will include the virtual outer space environment, 3D assets of the rhythm blocks, destruction animation upon hitting them, and all of this mixed in with the beat of the music. The pipeline should allow for fairly easy implementation of the 3D assets, as it includes the spawning and hit detection.

As for actual sound design, we have discovered numerous free MIDI file archive, where we can download and mix the MIDI file to adjust the difficulty level as we see fit. Hopefully this will cut down on precious time, resources, and budget.

Thank you for reading our week 6 blog, and we’ll see you again next week!