This week we conducted several playtests, gathering insightful and unexpected results that will help us refine our design and interactions.

Playtesting at Hunt Library

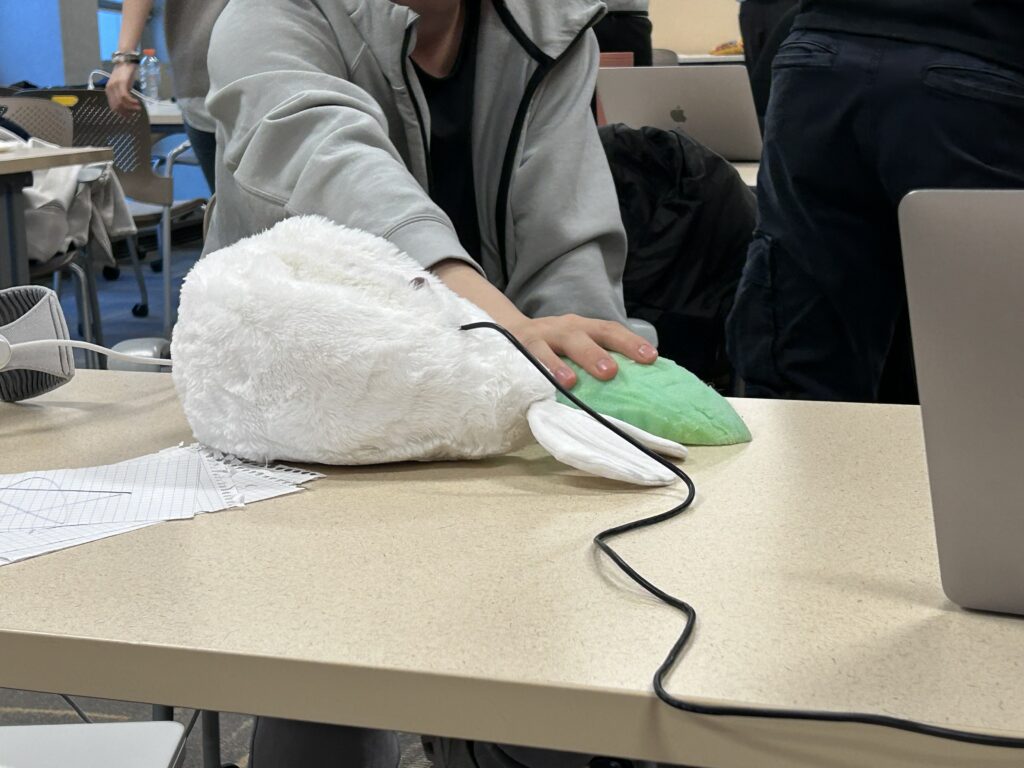

Joy completed the main patterning of our plush, embedded with Brian’s electronics. With this first prototype, we were ready to go for realistic playtesting.

On Tuesday, we attended playtest night at Hunt Library, where we tested touch interactions with the plush and a minigame concept with other CMU students. Our main conclusions from this session were:

1. We need to teach players how hard to press the plush. Even when prompted for a gentle stroke or a hard squish, players varied greatly in the amount of pressure they applied.

2. A hard shell will be important for protecting the electronics inside the plush, and adding more details to the plush (ears, eyes) will make it more intuitive for users to know how to hold it.

For example, one tester saw the plush (without a face at the time of testing) as a chicken drumstick and wanted to whack it around – not quite our intended interactions.

3. Sophie acted out our proposed touch minigame where animations prompt the user to touch different parts of the plush. She confirmed the intuition and effectiveness of animations and false cues. This reinforced the importance of making it obvious where each body part of the plush is located.

Testing Secondary Attention

Our advisor Derek provided important feedback on our playtesting approach, noting that we need to redirect our focus toward our goal of secondary attention. He pointed out that people will interact differently when the plush is the sole focus versus when they are doing something else while holding it. To simulate this, he suggested playing a video for participants to watch while they interact with the plush.

Playtesting with Derek’s Kids

This Friday, Derek brought his kids, Reed and Evan, to playtest with us. This was our first opportunity to test secondary attention interactions and our first playtest with children. Here are the main takeaways:

Things we did well:

- We established a baseline of what the kids were bringing into the experience by asking about their connections to pets, stuffed animals, and digital pets (we can think of users’ past experience as a Venn diagram of these three, and it’s unlikely they will answer “no” to all three).

- We normalized stuffed animal interactions by having additional stuffed animals for others to play with in the background, creating a comfortable environment.

- We used distractions, like a video game video, to create a relaxed atmosphere and simulate secondary attention.

Things we could improve:

- Introduce the pet as a personality, not just a study object. For example, saying, “This is Luceal,” helps users connect with it as a character.

- Focus on emotional prompts, such as “Luceal is scared” or “Luceal is playful,” and observe how users respond. This approach doesn’t necessarily require full implementation in the Apple Vision Pro and can be tested with simpler tools like flashcards.

Also note the new ears that Joy added to our plush!

Customization Screen Implementation

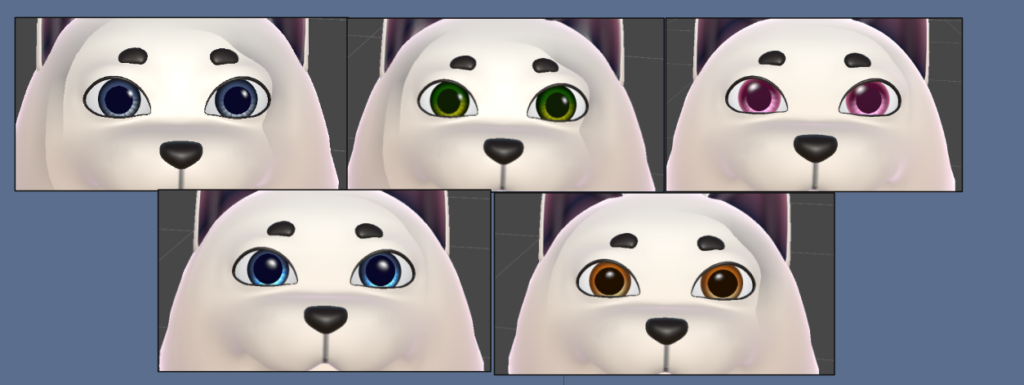

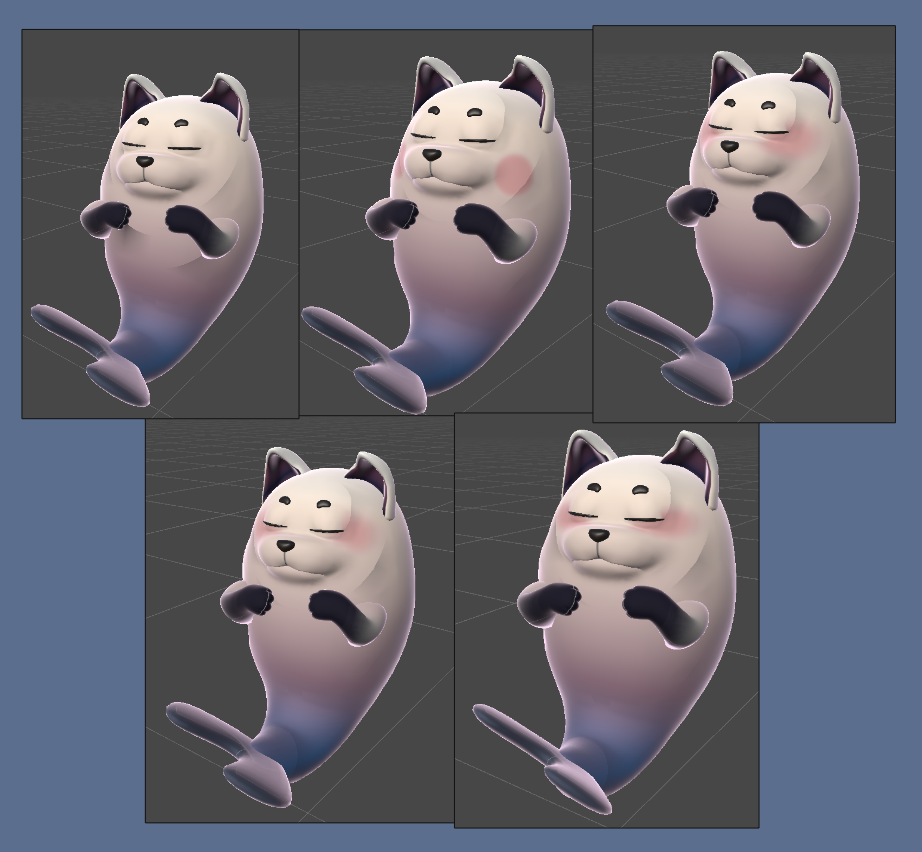

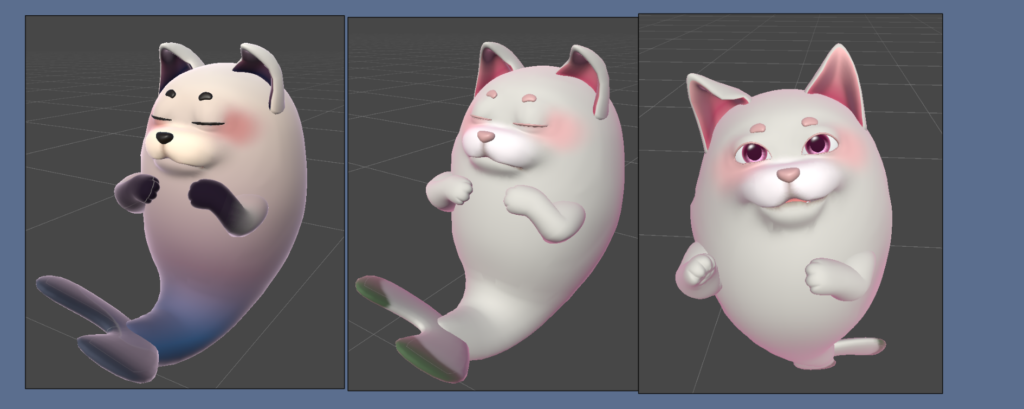

With Paige’s new personalization shaders, Jerry implemented a customization screen, allowing users to personalize their virtual pet.

Next Week

Sophie and Jerry are headed to GDC next week.

They will have a booth where they plan to give a demo of our game so far and allow GDC attendees to playtest. This is a very exciting opportunity, and we look forward to telling you how it goes next week!

Comments are closed