This week, we had our 1/4s Monday afternoon, which helped us settle on a design direction. We also have updates in art and on the physical tech side.

1/4s Feedback & Direction

Our main objective for 1/4s was to spark discussion and receive feedback on our project goal and design ideas, helping us to choose one of the 3 design plans from last week and clarifying our direction going forward.

Moreover, we conducted a mini playtest during the faculty walkarounds by offering faculty stuffed animals of varying sizes so that we could observe what interactions the size and shape of a plush prompts in people.

Thanks to faculty feedback, we cemented the decision that we will not aim for full overlay of the virtual pet onto the physical pet. Animation of the virtual pet when it is overlaid on the physical pet will look unnatural and break the illusion of immersion in the XR space. This, combined with the technical challenges of tracking a physical object that may move and be deformed, ruled out full overlay as a feasible path for now.

Instead, we will opt for a combination of plans #1 and #3 from last week’s post. In our experience, the physical pet will be stationary. The user can interact via gesture with the virtual pet when it is moving in space. The culmination of our experience will be when the virtual pet merges with the physical pet, so that the user can interact via touching the physical pet plush.

Furthermore, we received feedback on our purpose and target audience. Emotional support pets for children and a virtual pet for a Vision Pro user who can’t have a pet are two very different demographics. While our project has therapeutic components, discussion with faculty clarified that we would be building for the latter. Fun, new touch interaction is our foundation rather than the responsibility of an emotional crutch.

Narrative

We met with Brenda Harger afterwards to develop a narrative that justifies our experience and makes it real, rather than feeling like a technical limitation.

Our main takeaways from the meeting were:

- Treat the physical and virtual pet as two different beings with very different personalities. The virtual pet is extraverted and energetic, while the physical pet is introverted and shy.

- Create a loop of initation and reaction: both the pet and the user will initiate interactions, leading to more interactions.

- The experience should be the building of a relationship from a blank slate. The user must learn the pet’s language by trial and error. In this way, the experience is real and simple while being something new you can only get with this technology rather than a real pet.

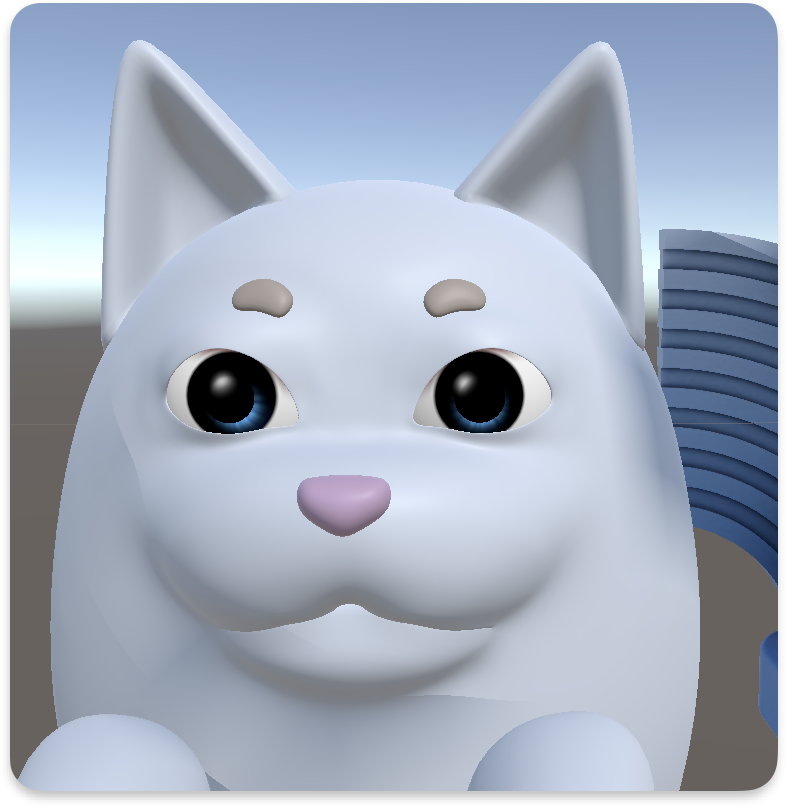

Art

Paige wrote eye shaders for our character. The colors can be easily adjusted – here are the results of two different colors.

Tech

Our ESP32 finally arrived Thursday of this week, allowing us to start building the embedded systems of our plush.

Brian came up with a solution to map the analog output of the force sensors to keyboard inputs, so that the force detection can be delivered to the Apple Vision Pro. The ESP32 will function as a bluetooth keyboard, and an analog reading of force will be quantized and converted to a certain keystroke within a set range (ex: 12345, qwert, etc.). This allows multiple force sensors placed in different parts of the physical pet plush to deliver discrete readings of force on a set scale.

Plan going forward

With a design in place, we made a plan for creating a working prototype before 1/2s. We assigned each team member deliverables, and we plan to do a sprint in each of weeks 4 and 5, taking Friday to play through what we have and review our design direction.

Comments are closed