This week was packed with exciting developments, inspiring discussions, and some technical challenges that we were able to overcome. Here’s a breakdown of what we’ve been up to:

Soft Circuits and Touch Interactions

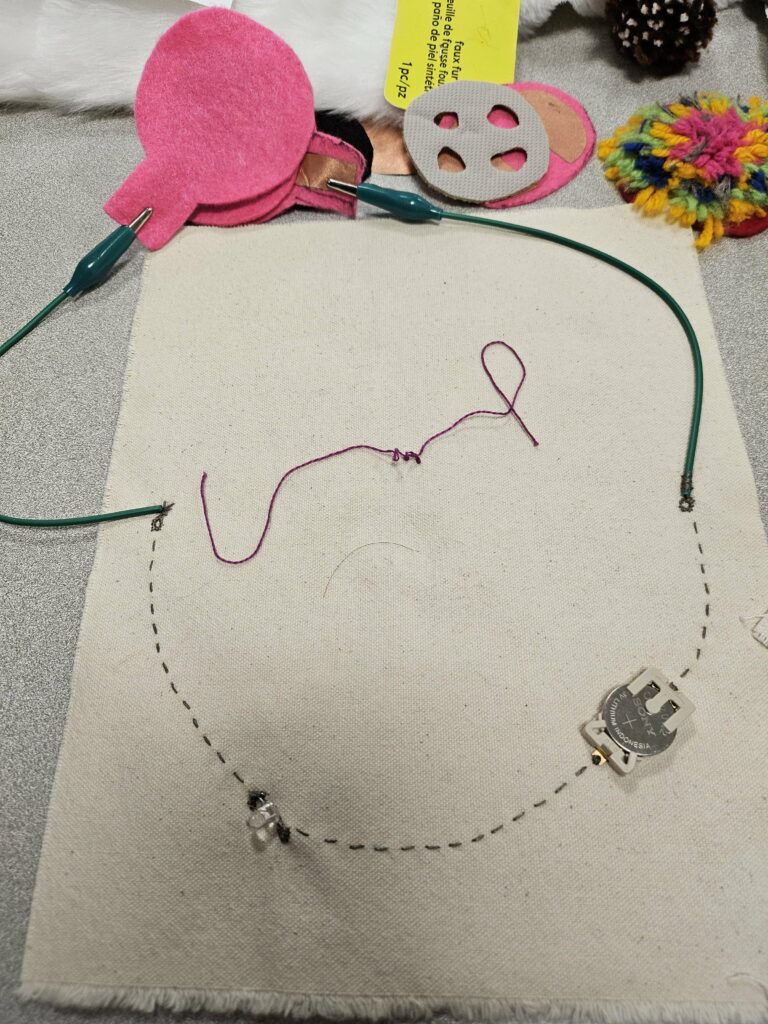

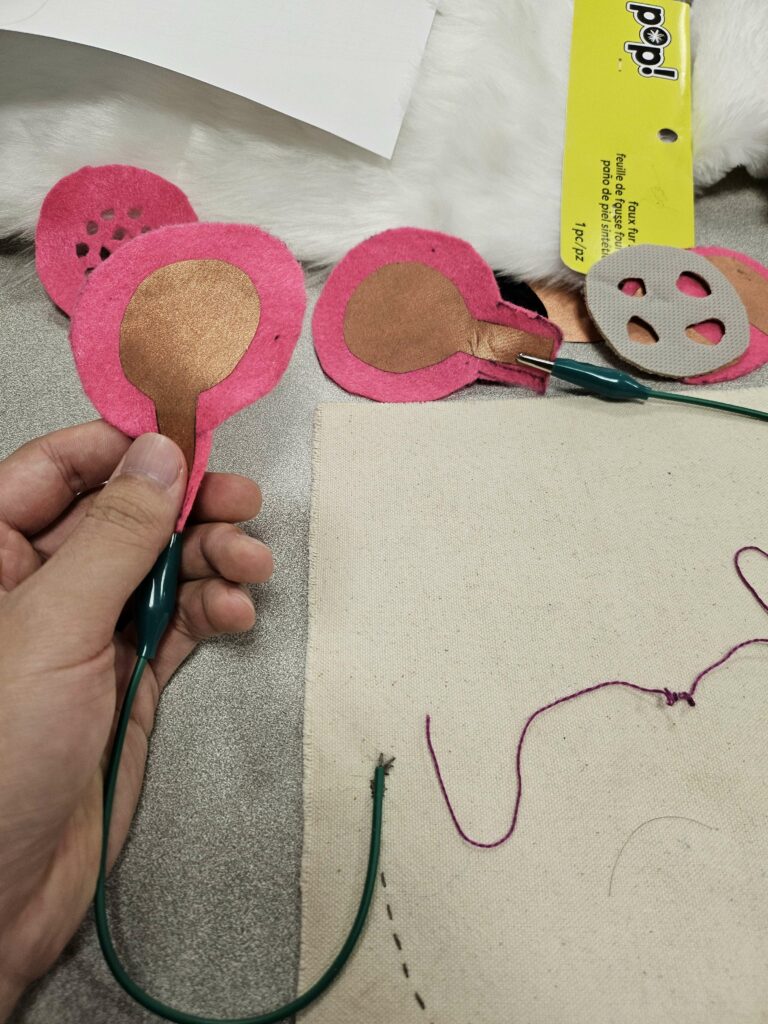

We had the pleasure of meeting with Olivia Robinson, one of our faculty consultants and an expert in soft technologies at IDeATe, to discuss the technical details of successfully building our physical pet plush. Olivia introduced us to a variety of soft circuits built with conductive yarn, which enable touch interactions such as squeezing, pulling, and petting.

We were particularly inspired by the potential of these soft circuits to deliver analog data via all these different touch interactions, and by their ability to fit better within our plush than hard, pre-built force-sensing resistors. After 1/2s, we plan to experiment with integrating Olivia’s designs into our pet, exploring how these touch-sensitive materials can deepen the connection between the user and their companion.

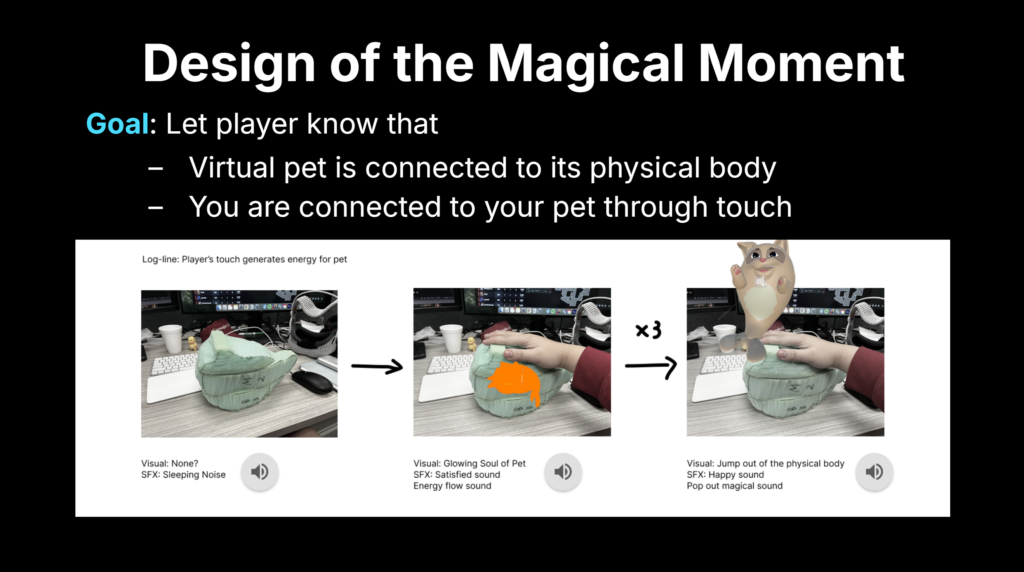

The Magical Moment: Linking Physical and Virtual Pets

To solve the storytelling challenge of linking the physical and virtual pet, Jerry designed the Magical Moment of our experience—the point where the physical and virtual pets are linked for the player. This moment is framed within the context of a ritual, designed to feel seamless and magical. Here’s how it works:

- The player puts on the headset and hears a sleeping sound. Only the sleeping physical pet is visible.

- They touch the physical pet to “charge” it with energy.

- When the pet has enough energy, it wakes up and the virtual pet pops out of the physical pet.

This approach not only simplifies the technical challenges with object tracking but also ensures that the moment feels natural and effortless for the user.

ESP32 Challenges and Solutions

On the technical side, we ran into an issue with the ESP32. Specifically, the Apple Vision Pro was unable to read physical keyboard inputs in-game, which posed a problem for our force sensor inputs. Thankfully, Jerry and Brian discovered this issue sooner rather than later and were able to troubleshoot this quickly by implementing a hidden text box as a workaround.

Brian is now working on converting the force sensor inputs into Xbox controller inputs, which not only resolves the issue but also gives us more analog freedom in how we interpret and use the sensor data. This solution opens up new possibilities for nuanced interactions, allowing us to better capture the subtleties of touch and pressure.

Looking Ahead

With these updates, we’re making steady progress toward our goals. Here’s what’s next:

- Preparing for 1/2s: As 1/2s presentations are in Week 7, we will be sprinting towards completing a prototype and rehearsing our presentation.

- Refining the Magical Moment: We’ll continue to build and polish this key interaction, ensuring that the transition between the physical and virtual pets feels as magical and seamless as possible.

- Finalizing the ESP32 setup: Once Brian’s work on converting force sensor inputs to Xbox controller inputs is complete, we can connect it to the physical pet and have a working prototype.

We’re excited to keep pushing forward and can’t wait to share more updates as we continue to bring this project to life!

Comments are closed