Project Overview

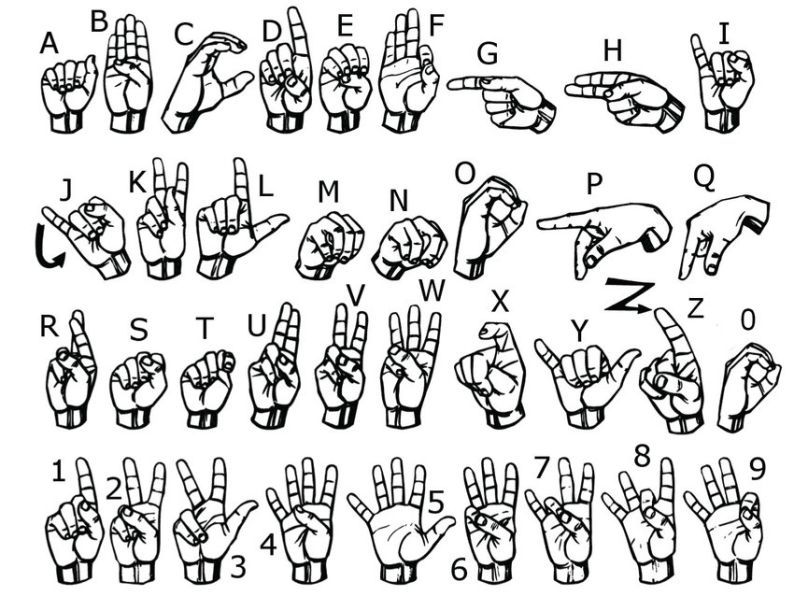

Project Gesture Quest is an educational project dedicated to teaching sign language using motion tracking technology. Our team aims to develop an educational video game dedicated to teaching sign language through entertaining and engaging experiences. To capture players’ hand gestures as game inputs, we leverages Google MediaPipe tracking technology and machine learning for the gesture detection and recognition.

In collaboration with Jonathan Tsay, a researcher with a background in theoretical mathematics, physical rehabilitation, and cognitive neuroscience, the game’s foundational structure and learning curves are effectively shaped. The project is designed to make learning American Sign Language (ASL) both fun and interactive. Instead of traditional classroom methods, it invites learners to dive into role-play and problem solving. This approach makes learning more enjoyable and memorable, with visual elements that help in understanding and retaining ASL more effectively. The game mixes learning and testing in a playful setting, making ASL not only easier to grasp but also bridging the communication gap with the deaf and hard of hearing communities.

Composition Box

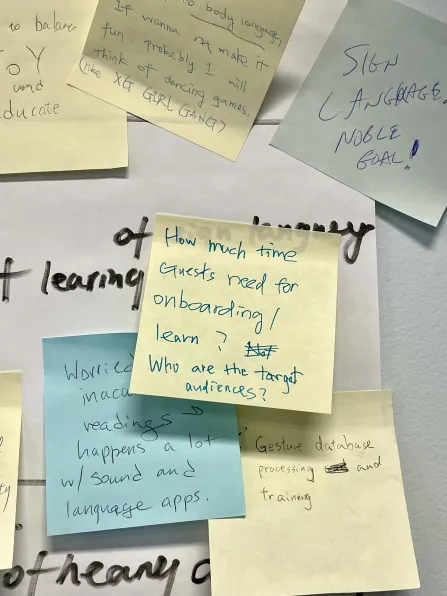

For Composition Box practice, we made a poster beginning with one-sentence description: a game designed to creatively educate individuals in sign language, enhancing their ability to communicate with hard of hearing community. We followed the template and finished the rest of parts, including inspiration, experiences, needs and experience goals. During the playtesting session, we received a lot of comments and a few questions, and we reviewed these valuable first-round feedback as a team after the session.

Core Hours

- Mon 11am – 6pm

- Tue 3pm – 6pm

- Wed 10am – 6pm

- Thu 2pm – 6pm

- Fri 11am – 2pm

Tech Investigation

We first went through past projects to see if there were similar projects we might refer to. However, we didn’t find much useful information. We then evaluated different platforms and searched for online resources to get a better overview of the tech aspect.

Game Dev

- Unity vs. UE5

- Unity has easier two-way communication with other languages (like Python).

Sign Language Tracker

- Detection → Google MediaPipe vs. OpenPose vs. Leap Motion Controller

- The accuracy of Google MediaPipe is good enough (eg. M and N).

- If there’s no need for additional devices, it would have easier access for more people.

- Recognition → ML → Python vs. ml5.js

- Python can have better project integration.

So our platform choice is Unity + Python + Google MediaPipe.

Unity Hand Tracking Simple Demo