Sign Language Research

Since we didn’t know much about sign language, we conducted research on sign language mainly including the three parts:

- Movie CODA (Child of Deaf Adults)

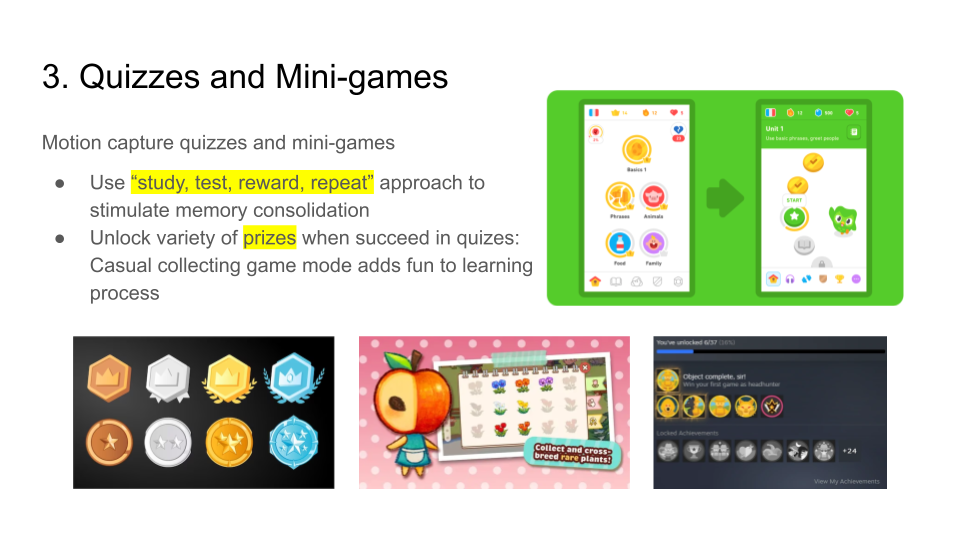

- Duolingo Investigation

- Online Resources – YouTube Videos and Google

One of the key findings at this stage is that sign language typically involves dynamic movements of both hands to express phrases or words, often combined with facial expressions. Considering our project scope and schedule, we plan to focus more on static poses and single-hand signs for the first half of the semester. Once we’ve achieved basic goals, we can evaluate the feasibility of incorporating the phrase-based movements and see how much further we can develop in this aspect. Our client JT also agreed with our plan.

In terms of phrase-based gestures, we might use checkpoints for the movement sequence detection, which would potentially impact the accuracy of the detection system. The tradeoff between system accuracy and vocabulary richness is also something we need to think about later.

Additionally, we found several language learning methods and we tried to incorporate some ideas like shadowing learning into our design as well.

Connect with Consultants

We reached out to Prof. Golan Levin at the main campus and he gave us some suggestion as follows:

- Leap controller, communicating to the Wekinator machine learning system, using the OSC protocol.

- A decent camera and Google’s MediaPipe hand tracker + ml5.js

- To deal with time-based gesture-tracking (not just pose identification), consider dynamic programming.

- Research on ASL trackers

We also made connections with the head of the ASL Department at Western Pennsylvania School for the Deaf thanks to Anthony. Although we haven’t schedule a meeting yet, we’ve compiled a question list for sign language professionals.

Sign Language Question List

Sign Language General

- What is the percentage of static sign language among all sign language forms?

- How often do hand interactions with the body (head, shoulder, chest) occur in sign language?

- Is there a rule about whether the palm should face the person we’re communicating with or not for different signs?

Accuracy

- What is the tolerance for correct posture? (eg. hand shape, palm orientation, movement speed)

- What errors can affect the accuracy of sign language?

Contents/Topics

- What scenarios do people who are deaf use sign language mostly?

- Besides the hard of hearing population, what other groups and how often are they interested in learning sign language?

- Are there any fun sign language gestures, like the one for ‘helicopter’, that can intrigue and engage learners more easily?

Teaching

- What are some most difficult situations you faced when teaching sign language?

- Are there differences in teaching formats when instructing hearing versus hard of hearing individuals?

- What elements do you think can be added to teaching that can help people with hearing loss learn sign language?

- What elements do you think can be added to teaching that can help hearing people practice and remember sign language?

Learning

- How would you describe the learning curve of sign language? What is the first sign or initial topic that most people start with when learning sign language?

Pitch Ideas

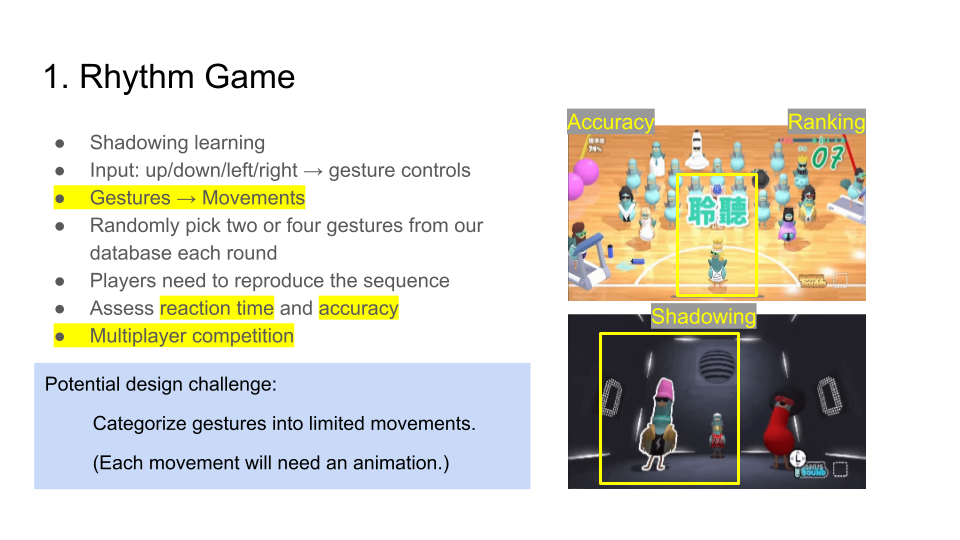

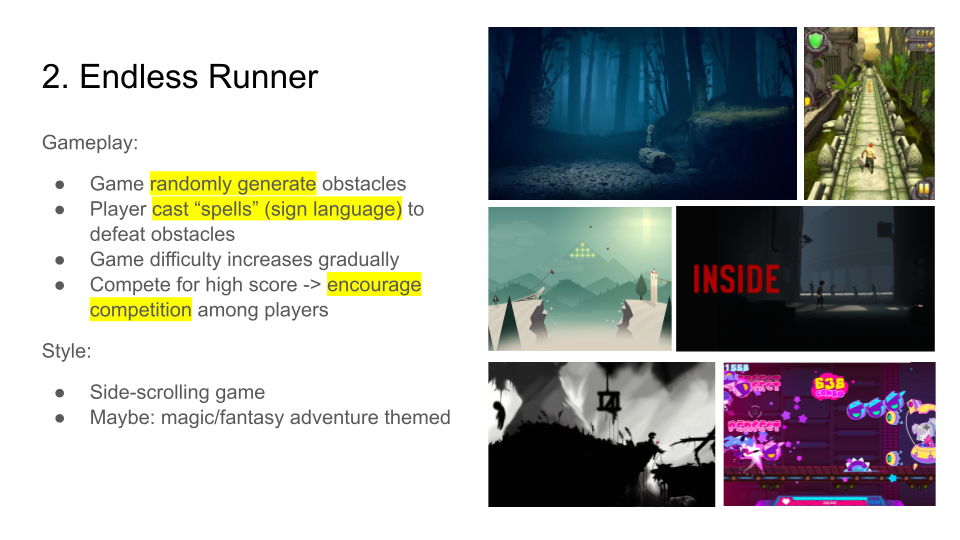

We came up with four different pitch ideas this week before our client meeting.

After discussions with JT, we summarized a few key points:

- Direct link to the sign language learning

- Think about reaction time calculation – it starts from appearance

- Mini-games with different educational intentions

- Pre-training and post-training comparison

Based on the feedback we got, we will work on some rapid prototyping this following week.

Tech Update

Unity – Python Communication

The two-way communication between Unity and Python has been established and our programmers are working on the API part to make it easier to use across multiple programmers.

Gesture Recognition Demo

We’ve trained our own ML (Machine Learning) model of gesture recognition. It started with four simple different gestures and we then collected data of 24 alphabets by inputting around 6000 images. The accuracy of the model is not high enough yet, but it gives a general idea and shows the promising future as long as we collect more data from various sources and improve the model.