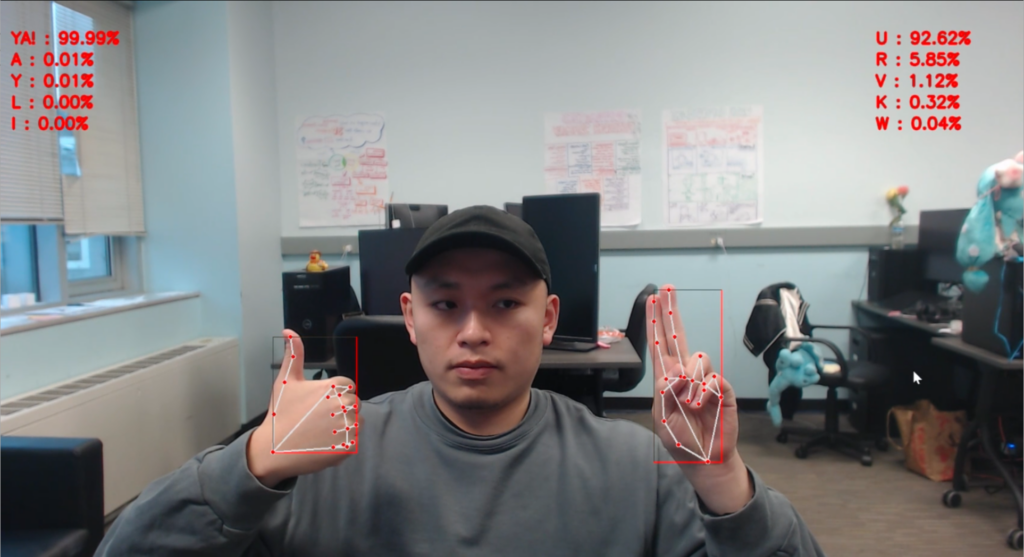

Tyler Yang has been working on our sign machine learning model since week one. As we approach the half, let’s see what results we’ve got.

Phase I – Static Gestures

What’s the process for building our static sign ML model?

- Data Collection and Preparation: We extracted and recorded the normalized landmarks from Google MediaPipe for each sign. For now we have around 1K data for each of the letters we have, each of them with 21*2 coordinate values as features.

- Choosing and Training Model: In this case, we chose a supervised learning neural network model for training, which is easy to build using Tensorflow and Keras. The training time depends on how I construct for the model, the average time is about 4-6 minutes per training.

- Evaluating and Parameters Tuning: This is the part that’s time consuming. At the beginning of the process, I encountered huge losses and low accuracy in similar signs, e.g. m&n, d&x, u&r, o&c. We continued retraining by recording the data with better quality, diversity and quantity, adjusting the hyperparameters such as epochs, batch sizes and the number of layers. Eventually we have a 98% accuracy model up and running.

What challenges have you encountered when training the model?

- The environment setup, the first week I’ve been tackling numerous version compatibility issues between opencv, python and mediapipe.

- We had a feature for detecting the closest hand in front of the camera, but since we don’t have a depth camera, I tried to use the z coordinates from MediaPipe, which performed really unstable when making signs. I also tried an another approach called Monocular depth estimation, which is a computer vision task that involves predicting the depth information of a scene from a single RGB image. However, that’s super computationally-INTENSIVE, and I was about to try that on the GPU, but again the environment setup and the version of Tensorflow will be again a super long process, so we kicked that feature out.

- At the beginning the camera loading time is so slow that it’s really frustrating and time wasting when playtesting, so we then improved that, and it worked out pretty well.

Is there any improvements and future prospects?

- The potential improvements on this model we will be focusing on, if we have time, is the data-feeding part. We’re trying to collect more data from different hands size, different hand shapes and we might consider finding an expert to help out for the data. To accomplish this, I was thinking about setting up a database and maybe send the data collecting module for experts to collect data, but as far as it’s working for the game we have right now, that’s kinda out of scope for this semester.

- The future prospect for this is mentioned in quarters by some of the faculties, I believe it’s Steve, Jon, Bryan and Dave. It’s a great platform for BVW, with just using a webcam.

Phase II – Motion Detection

Why is there a second phase for motion detection?

For better game experience, we are now trying to include movement signs. Among alphabets, there’re two letters J and Z with a motion when signing them. By integrating motion detection, we’ll be able to introduce more simple words and phrases other than letters.

What’s your approach when dealing with motion detection?

Currently, we have 2 approaches experimenting, LSTM (Long Term Short Memory) and DTW (Dynamic Time Warping)

The first method is building a LSTM model for detecting motion signs. LSTM is a recurrent neural network (RNN) which is capable of learning order dependence in sequence prediction problems. The advantage of using LSTM for this project is that it’s a similar structure with the static sign tracker, and easier to integrate to our current project. However, the data we need is way lot more compared to the static poses, since we need to record the landmarks from each frame for a number of sequences, and I tested out that it’s not as stable as expected, maybe is the data or maybe it’s almost there but just needs more adjustment.

The second method is using an approach called Dynamic Time Warping, which is widely used for computing time series similarity. The upside of this approach is that it’s faster in data collecting, and it’s pretty good when testing out, but the down side of this is that it’s more code, and having harder integration into the gameplay and game system we have now. We’re still testing the stability and accuracy of this approach, and we might go for this one if the adjustment is ready in-time and the integration is further solved.

The potential third approach is to record “sequence static signs” inside the model we have now, and match the “(static) sequences” to pass out the letter. Or simply ask the player move their finger in a fix track generating in Unity to finish the sign. Pros: Easy. Cons: Bad extension, potentially mess up data, and follow fix track in Unity might be frustrating and results bad gaming experience.