TACIT Post Mortem

Introduction and overview of the project

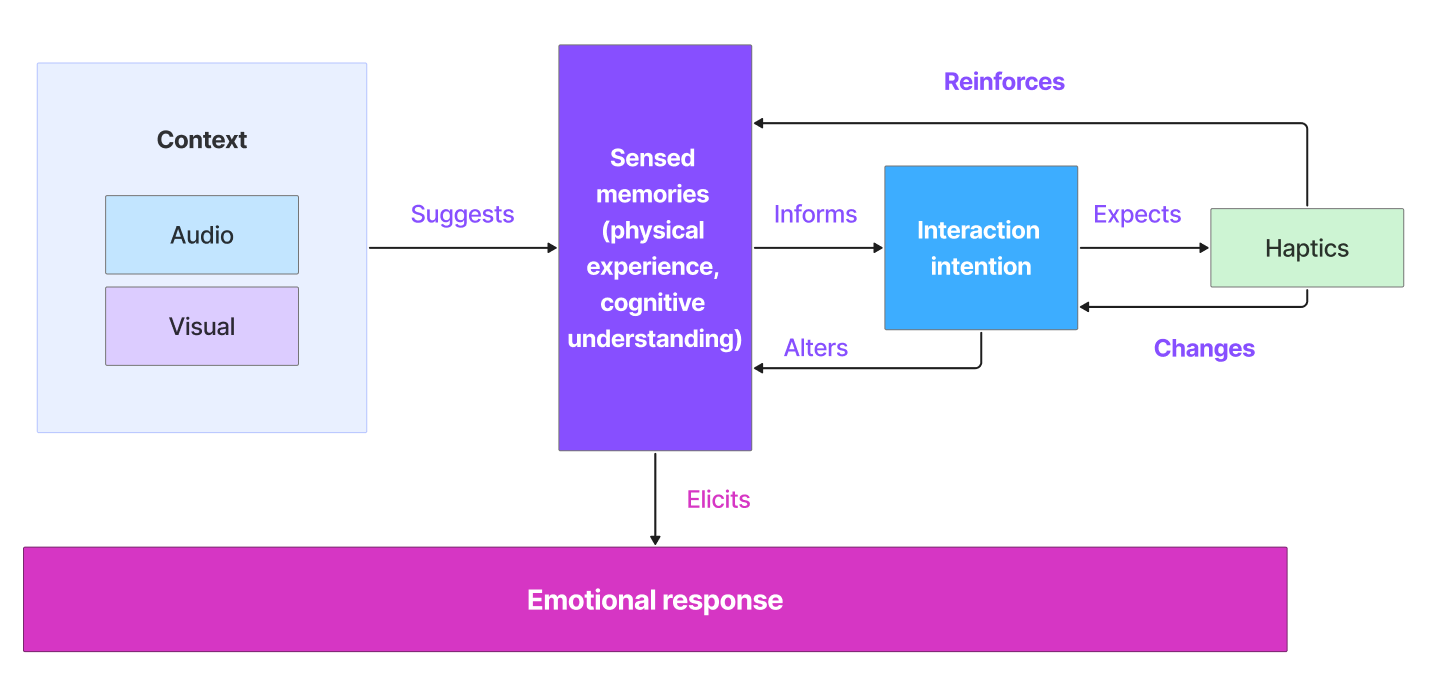

TACIT started with a dream of having some tactile feedback in Jing’s BVW VR monkey climbing game. As a pitch project, we recruited more members, Jack, Alex, Yufei, (and at that time Michael who did not immediately join), and after discussion and outreach to SMEs and faculty, which yielded the initial assumption that haptics cannot work without context support, decided to do a tech exploration on how haptics along with context (audio and visual) can elicit emotional responses, experimenting from the bottom up with individual haptic moments, and stringing it together to create an emotional journey.

Deliverable

Our deliverable converged to two main things:

- Whatever we discovered in the exploration phase into documentation – our TACIT documentation, and,

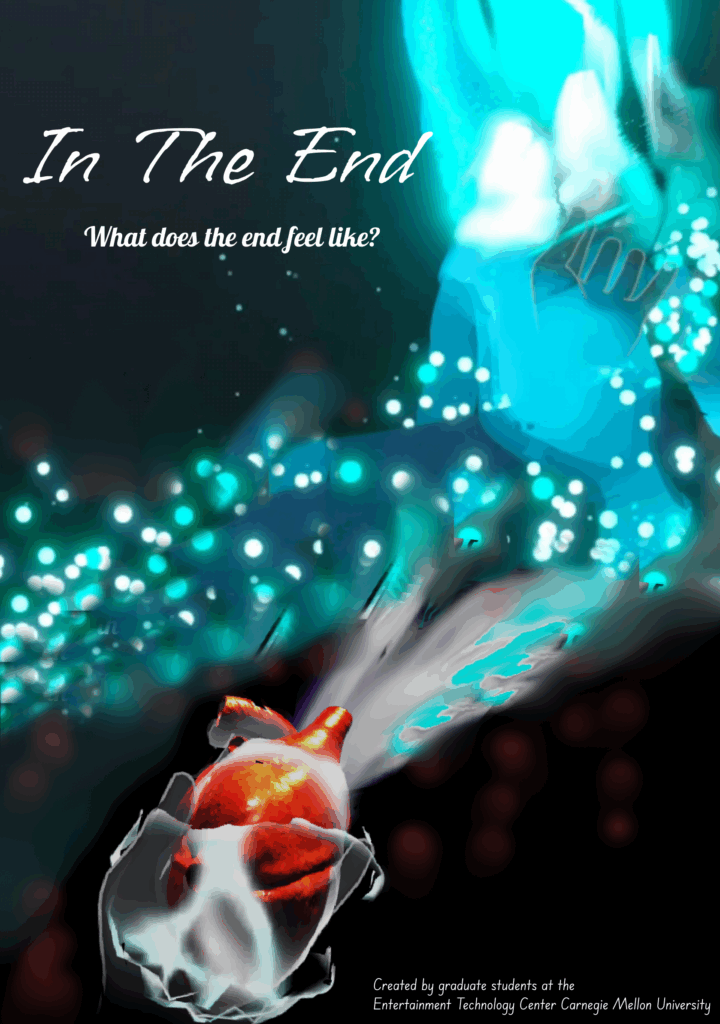

- The main experience we’ll build later in the semester – In The End, a 10 minute cohesive VR narrative experience.

Technology

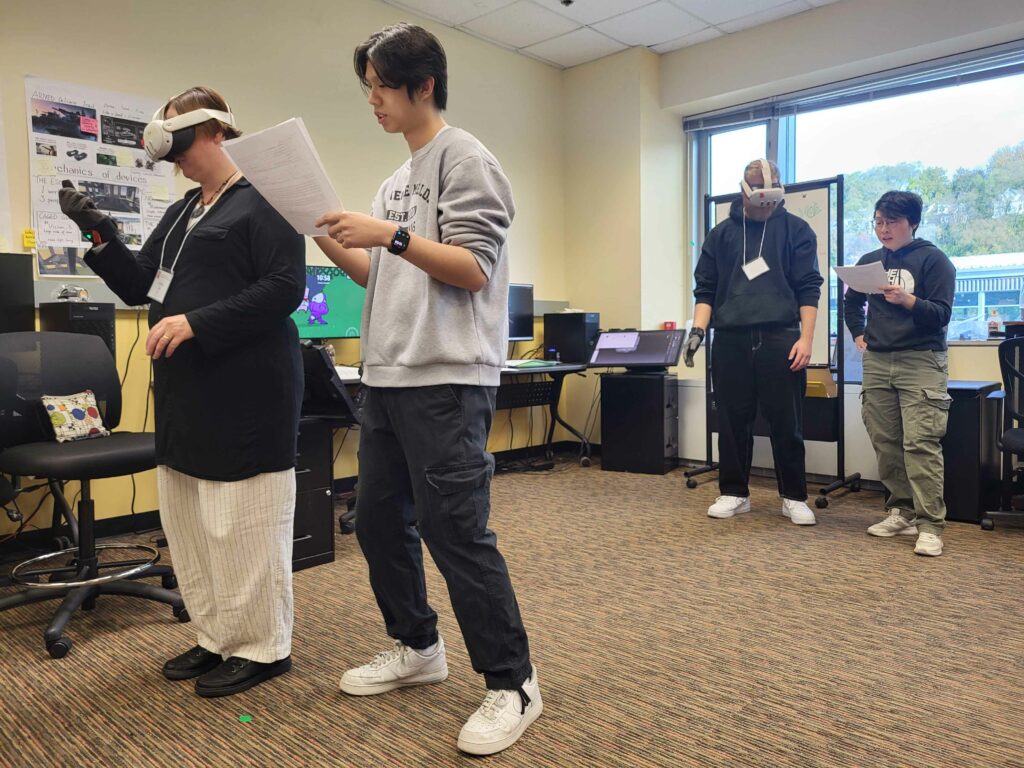

Using the bHaptics gloves, which we chose due to its comprehensive developer support, easy to finetune haptic actuators, and cheap price compared to all others, we knew from the start we couldn’t create force feedback and temperature stuff. Still, we were determined to experiment and see if that was actually not possible.

Exploration phase

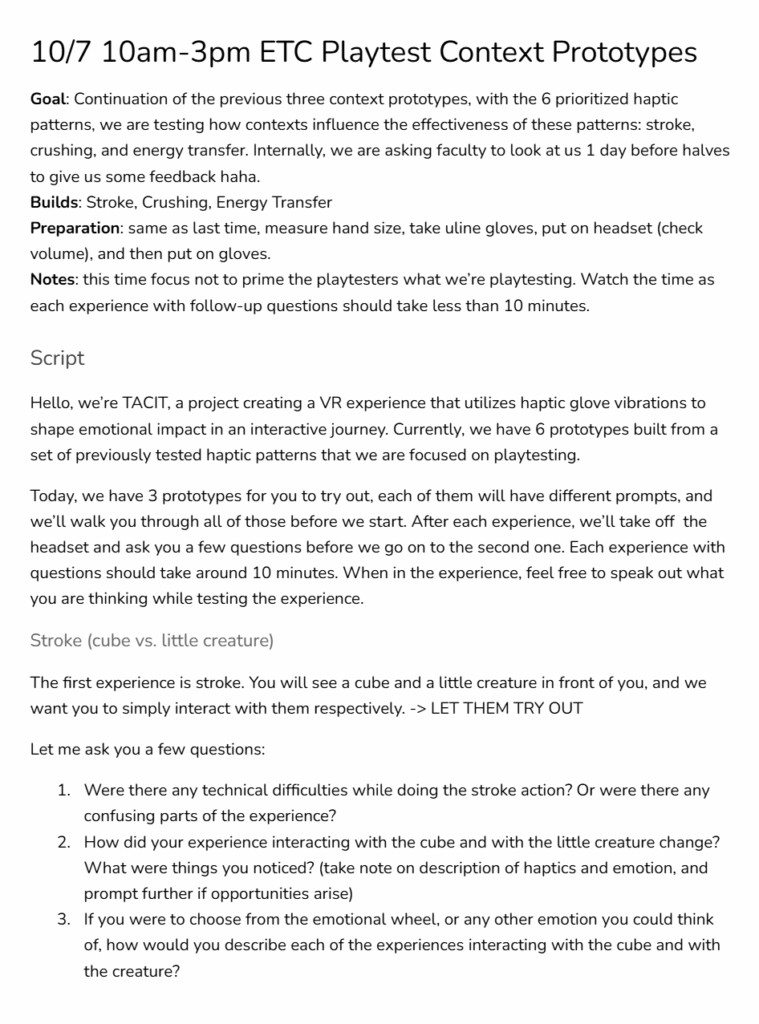

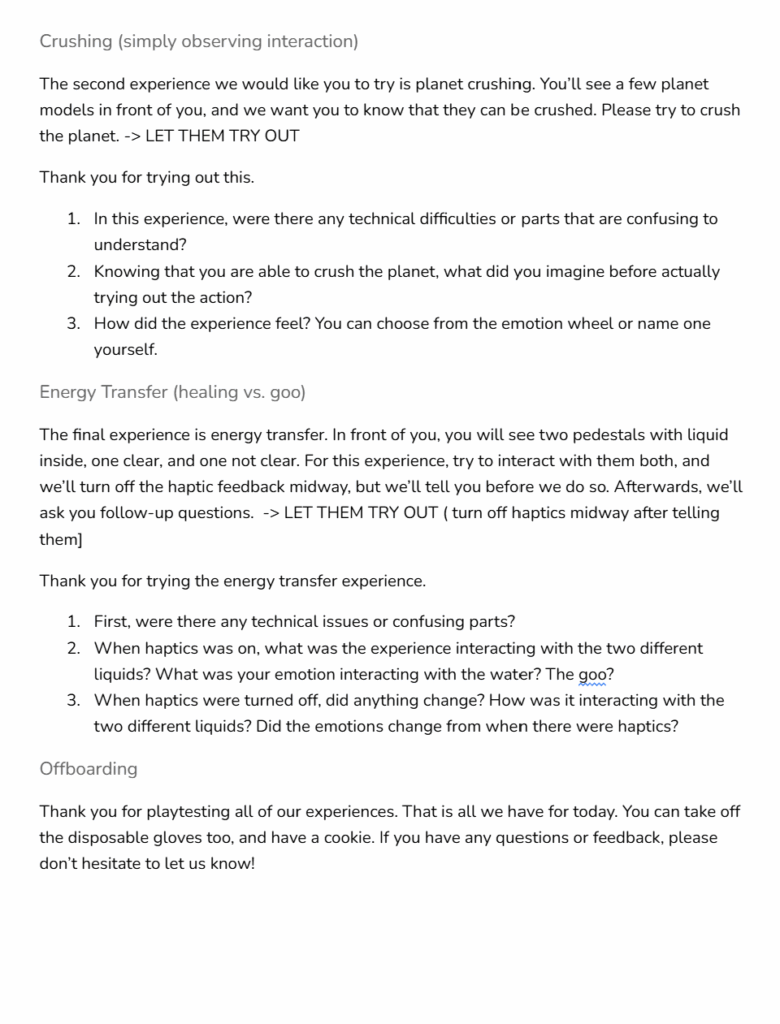

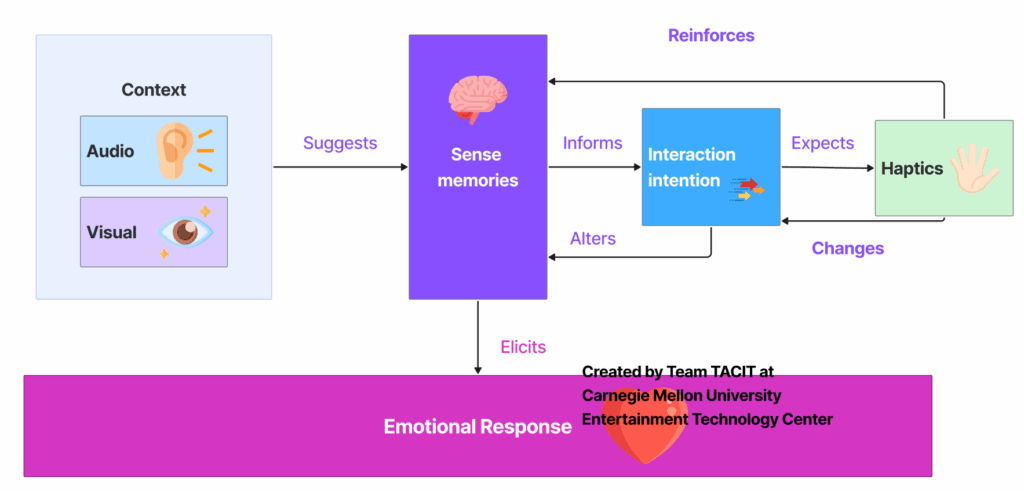

These informed our first half of the semester exploration. In the experimentation phase, we came up with individual haptic patterns in bHaptics Designer, and tested how the patterns themselves elicit imagination from testing participants. After that, we augmented the patterns with different audio and visual context in VR to test how changing contexts changes an emotional response of a same pattern. The following diagram generally summarizes our conclusion.

Final experience development phase

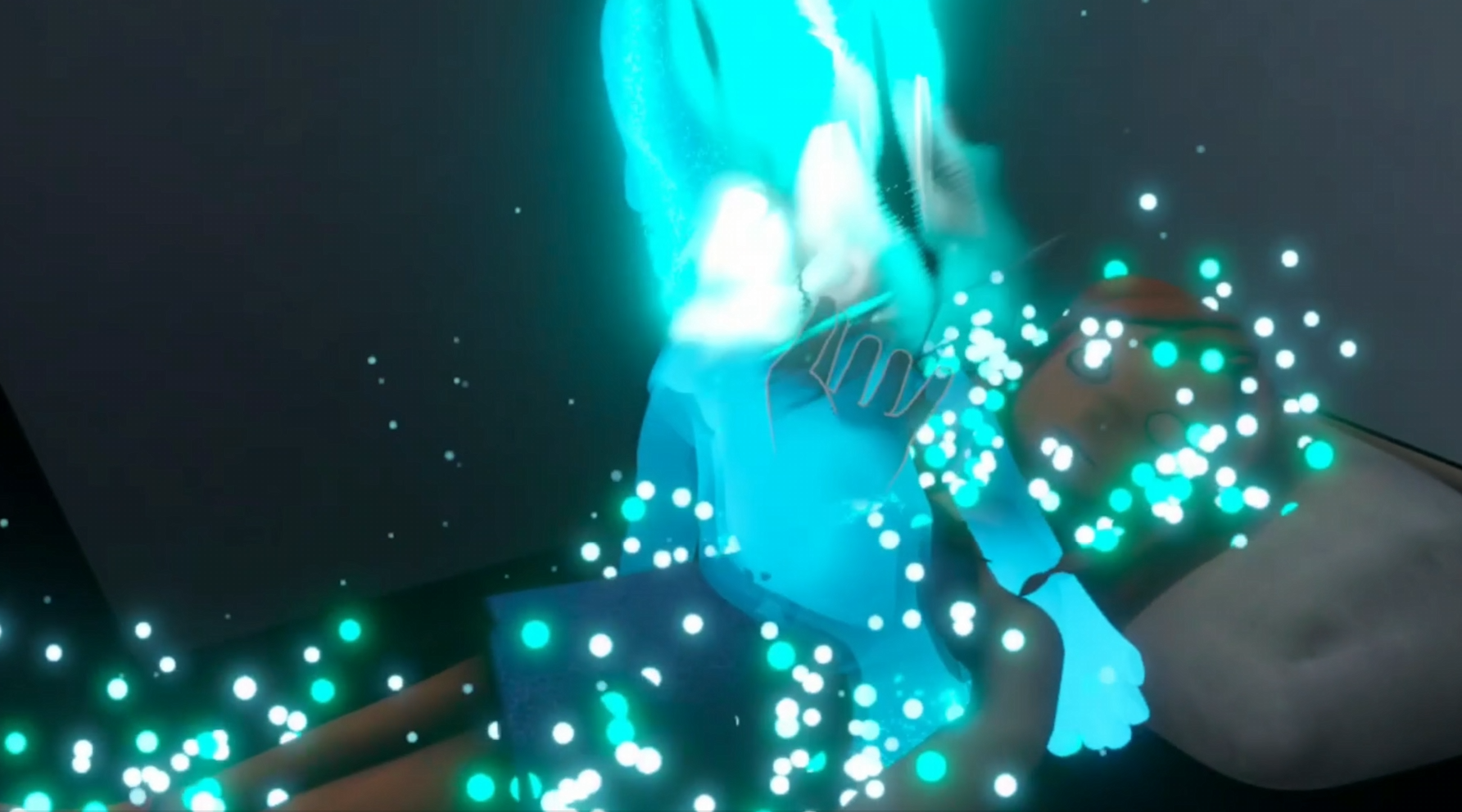

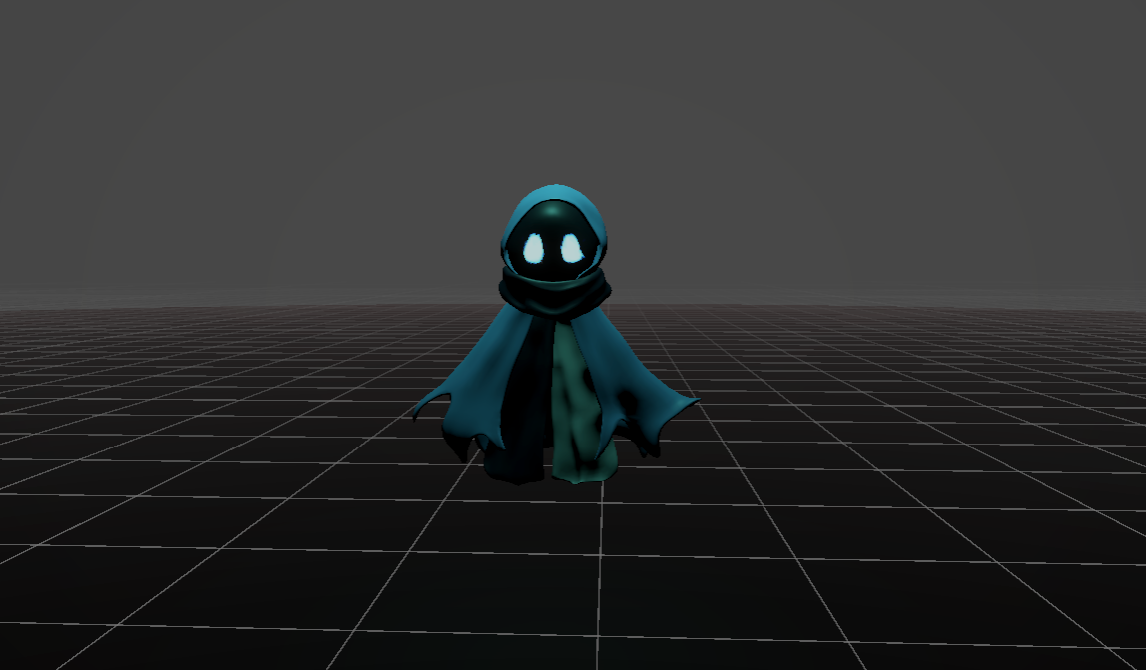

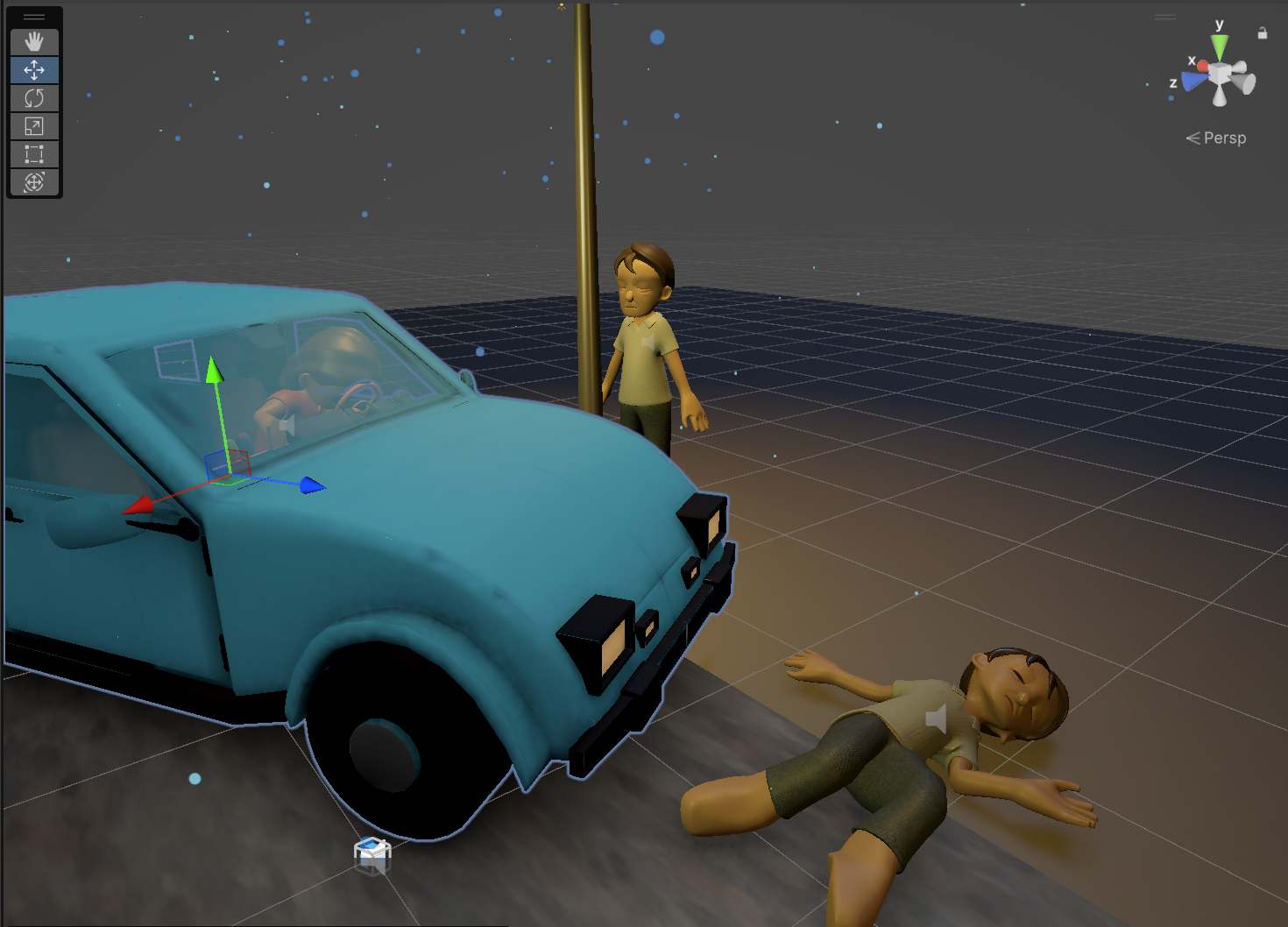

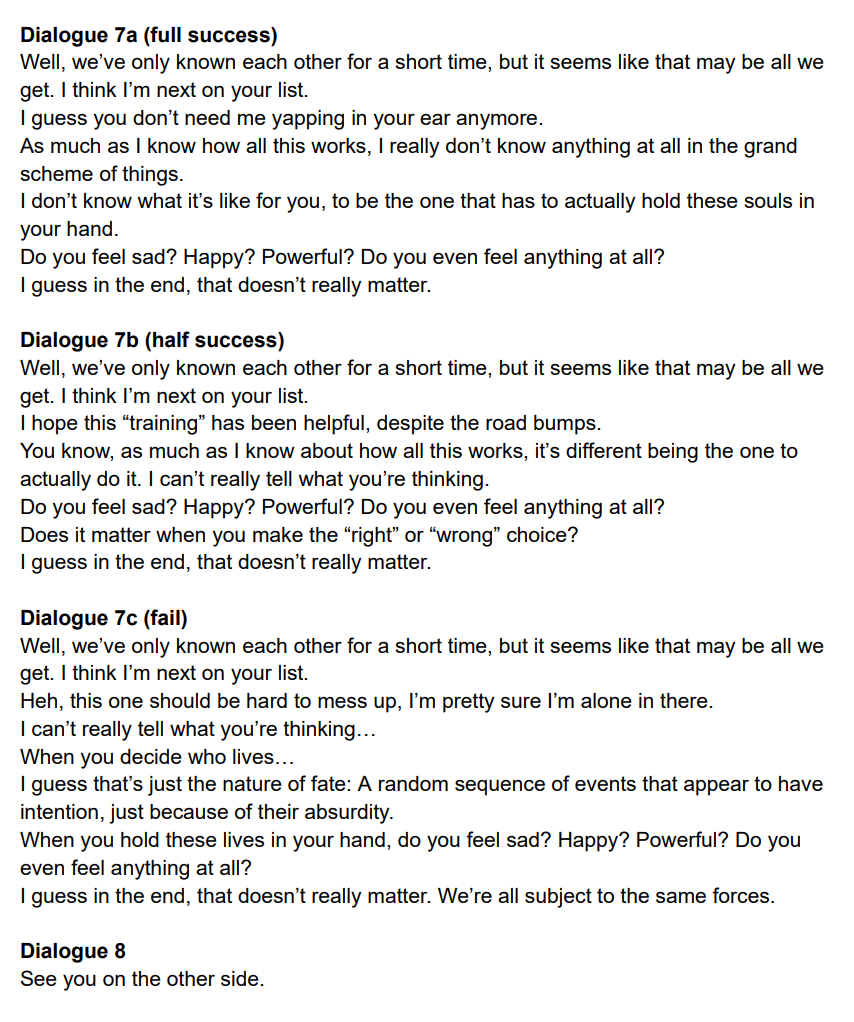

In the development for the final experience phase, we created a narrative experience where players become Death to release people’s souls by crushing their hearts, and get to an emotional experience where they have to take the life of a companion accompanying their journey. We zoomed into the haptic patterns of heartbeat + crushing to discover what contexts (animations, sound, and VFXs) are in need to aid the delivery of this haptic moment. We also tried to convey different final moments of death through distinct heartbeat patterns: weak, arrhythmic, steady, or intensifying, which reveal who is destined to die.

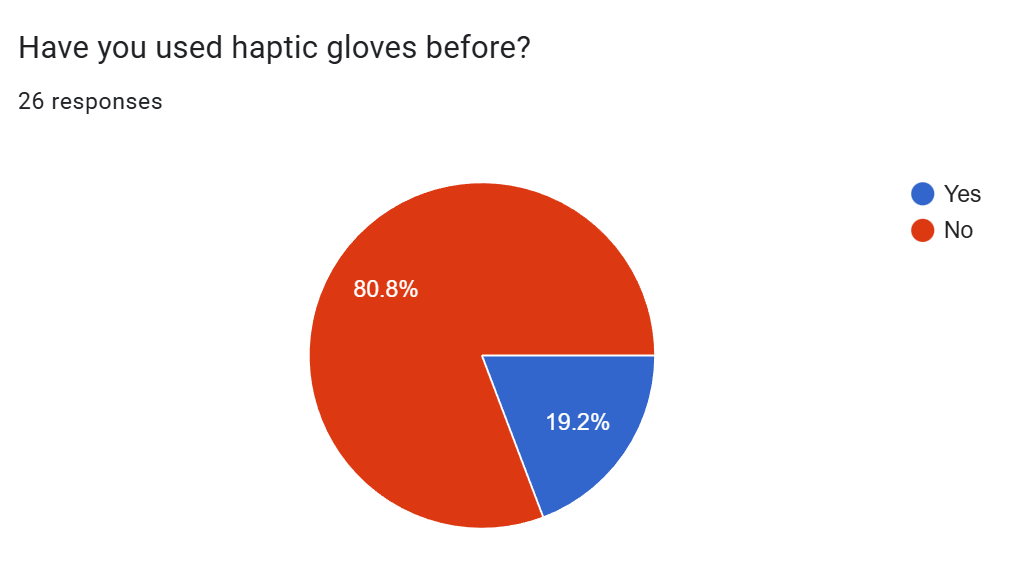

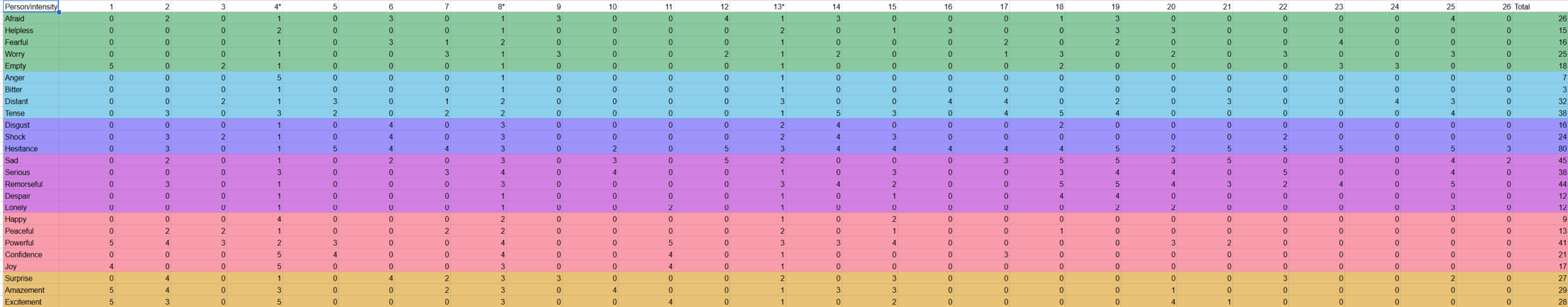

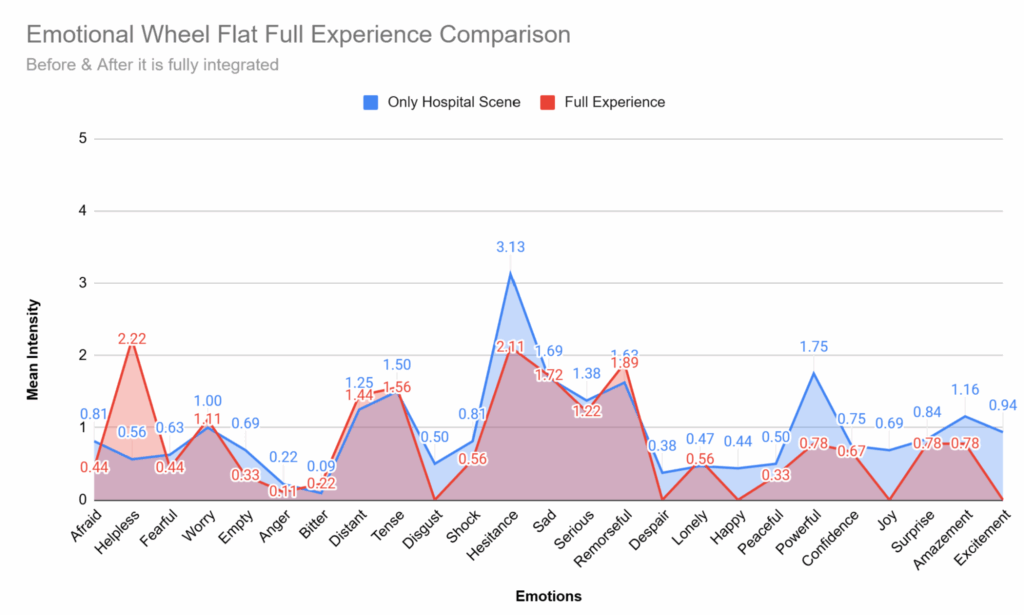

During testing, we utilized the PANAS scale along with the emotional wheel, to test whether this haptic moment (crushing a heart with different heartbeat patterns) in the context of the entire narrative experience resulted in our intended emotion of remorse and sadness. I have attached the comparison between having only 1 scene vs. the entire experience.

The experience received highly positive feedback and is being submitted to conferences post-semester. We also created comprehensive documentation on haptic experience design to serve as a reference for future designers.

Team members

- Winnie Tsai – Producer, UX Research, Prototyper

- Michael Wong – Sound Designer, Narrative Design, Rigging Artist

- Jack Chou – Gameplay Programmer, Game Designer

- Jing Chung – Gameplay Programmer, System Architecture, Technical Artist

- Alex Hall – Environment Art, Narrative Design

- Yufei Chen – 3D Character Art, 2D Art

What went well

Comprehensive Bottom-Up Exploration and Foundational Contribution

Our commitment to the bottom-up approach defined the project’s success. We started with the smallest possible unit (individual haptic parameters) and built upwards (adding context, then narrative structure). This process not only led to our final experience but also yielded significant foundational deliverables:

- We created a library of haptic patterns and an analysis of their potential.

- We confirmed that the greatest design insight was that the relationship between haptics and emotions lies in leveraging individual sense memories.

- We compiled all our prototypes into a Haptics Playground, serving as a valuable resource and reference for future designers and ETC projects working with these gloves.

Sustained and Iterative Playtesting Schedule

Our discipline regarding playtesting was essential to achieving a playable, polished game by the end of the semester. We participated in every available ETC playtest opportunity, with only weeks 1, 2, and 10 being exceptions. This rapid, constant iteration allowed us to:

- Understand player experience for haptics perception regardless of gaming or VR experience.

- Immediately incorporate user feedback into the game design, programming, and art assets.

- Rapidly refine the complex interaction moment (the heart crushing) and calibrate the supporting audio/visual contexts.

Successful Applied Case Study

While the cognitive and emotional connection to haptics is an already researched area, our project successfully served as a case study in the application of these principles to an emotionally rich VR game context. We defined a pipeline for using haptics as the primary vehicle for accumulating emotional weight, validating the hypothesis that carefully designed haptic moments, repeated in a narrative structure, can deliver a complex and moving emotional climax.

Achieving Complex Emotional Nuance

We successfully delivered on the ambitious goal of evoking complex emotions (remorse, sadness, bittersweet acceptance) during the climax with granularly designed heart moments of haptics supported by audio and visual. Furthermore, we demonstrated effective emotional storytelling through art direction, as the choice of a frozen diorama style (initially for technical expediency) inadvertently created a feeling of distance that reinforced the narrative themes of Death.

What could have been better

Interdisciplinary Communication and Critical Asset Dependency

The biggest challenge we faced was the breakdown of interdisciplinary communication and differing expectations between roles, which led to a severe bottleneck specifically around the delivery of 3D models for the dioramic scenes.

- Early Phase Misalignment: In the exploration phase, we initially struggled with role definition. Programmers expected artists to provide conceptual direction for haptic prototypes, while artists expected programmers to define the technical requirements and constraints first. We eventually solved this by establishing a unified team vision before delegating tasks, an adjustment that cost us early time.

- Late Phase Drag and Dependency: In the second half of the semester, the production schedule stalled due to asset dependency. While programming (gameplay logic and systems), narrative design, and sound design were largely completed or on schedule, the team faced a substantial drag waiting for many 3D model assets due to individual life events impacting the character/environment art pipeline. This was a huge problem because these models were essential for building the static dioramic scenes that frame the interaction. We failed to be proactive enough in identifying this critical bottleneck and reallocating resources, which resulted in:

- Slower testing and iteration cycles in the second half.

- A final experience where the 3D models were not as polished as desired, as the rest of the team had to scramble late in the process to assist, instead of the original artists having the necessary time for refinement.

Sacrificed Agency for Narrative Flow

Due to time constraints resulting from the art asset bottleneck and difficulties in naturally conveying the companion’s emotional connection within the limited scene count, we compromised on player agency. We had to rely on direct instructions instead of subtle indirect control for certain player actions. This decision resulted in player feedback regarding a lack of agency and a disruptive narrative flow, preventing the emotional climax from reaching its full potential.

Conclusion

This semester’s project reinforced the importance of establishing clear guidelines and research foundations for creating meaningful experiences. Starting from the ground level with haptic exploration, we gained deep domain knowledge and discovered the fundamental truth that haptics require context. This insight prompted us to investigate the minimal viable context needed, working from the most basic, pure starting point.

By building incrementally upon this core foundation without losing sight of our primary focus, we successfully maintained design coherence throughout iteration, which was a critical factor in completing our final experience. Equally important was effective team communication. When everyone shares a common goal and stays on the same page, each team member can leverage their expertise most efficiently, ultimately elevating the final product.

Next step plan

The primary goal of the next steps is to address the critical feedback regarding player agency and the emotional connection with the companion, which were constrained by the semester’s timeline and asset dependency issues. This plan involves expanding the experience and formalizing the project’s documentation and external presentation.

Experience Expansion and Redesign

This phase focuses on addressing the narrative and design flaws identified in the final build.

- Expand Scene Count to Five (Original Plan):

- Increase the number of scenarios from three to five. This will provide the necessary space to build the player-companion relationship incrementally and allow the climax to feel more earned.

- Strengthen Companion Relationship:

- Integrate new narrative moments and subtle dialogue throughout the expanded scenes to develop the companion’s personality, goals, and relationship with the player prior to the emotional climax.

- Improve Player Agency and Tutorial:

- Redesign the initial tutorial scene to rely on indirect control and environmental cues rather than direct, instructional dialogue. The player should learn the mechanics (heartbeat perception and crushing) through subtle discovery, thereby increasing their feeling of agency and narrative immersion.

- Asset Polish and Integration:

- Dedicate focused time to complete and polish the missing or rushed 3D models that were the primary bottleneck in the initial build. Full polish of these assets is required before the final five-scene experience can be fully integrated and tested.

Post-Semester Submission and Presentation

This phase leverages the current state of the project for professional exposure.

- Conference Submission:

- Formally submit the current 10-minute VR experience, In The End, along with the comprehensive TACIT Documentation, to relevant conferences (e.g., SONA, SIGGRAPH, Laval Virtual) and academic venues focused on interactive media and haptics.

- ETC Documentation Finalization:

- Ensure the Haptics Playground environment and the technical/design documentation are fully compiled and deposited as a resource for future ETC student teams.

SONA Festival Submission

Speaking of festivals, in the last week, we submitted our experience, which we now call In the End, to a immersive film festival SONA.

Synopsis

In The End is an immersive VR narrative structured around haptic interaction as its primary storytelling language. The participant inhabits the role of the Death, guided through a sequence of still, diorama-like scenes depicting the final moments of someone’s life, culminating in the loss of a trusted companion.

Using haptic gloves, participants feel distinct heartbeat patterns: weak, arrhythmic, steady, or intensifying, which reveal who is destined to die. Dialogue and audiovisual context frame each scene, while touch carries emotional meaning. The act of releasing a soul is felt as resistance or surrender, followed by lingering residual sensations that communicate consequence.

The experience invites reflection on presence, responsibility, and what it means to feel the end of a life—one heartbeat at a time.

Poster