Welcome back! This week, we had our Quarter walkarounds, where faculty provided feedback to help us refine our approach to interactions and toolkit usability. A follow-up discussion with Brenda Harger gave us deeper insights into narrative design and engagement strategies in CAVERN’s environment. On the technical side, we made a major breakthrough in stereoscopic rendering, but Vive tracker integration remained highly unstable. Finally, we also planned our toolkit 1.0 for the visit to South Fayette that will happen next week.

Refining How We Communicate Our Project During Quarters

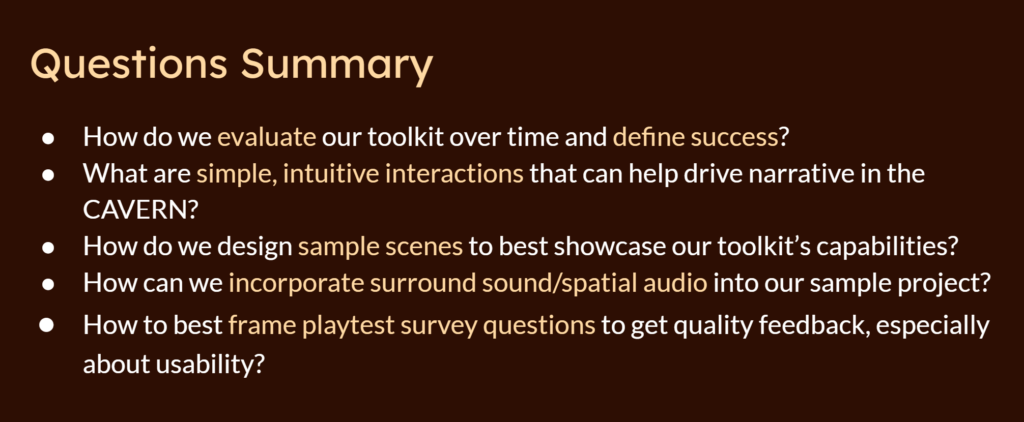

This week, we had our first major round of faculty feedback through Quarters walkarounds. During these sessions, faculty rotated between project teams, offering guidance and helping us evaluate our initial direction. This was also the first time we formally presented our project since last semester’s pitch, which meant reassessing how we communicate our goals.

We discovered that faculty had differing expectations for our toolkit. Some envisioned a fully no-code, drag-and-drop system, while we had always planned for a toolkit that still requires coding for non-CAVERN-specific interactions. This raised an important question: How do we define accessibility in our toolkit? Our approach assumes that by designing for high school students as a less technical user base, we will also enable ETC graduate students, regardless of coding experience, to create impactful experiences in CAVERN.

Another key realization was that the term “novice” can mean many different things—a user could be new to programming, game development, or CAVERN itself. Faculty feedback helped us recognize that we need to clearly define our target audience and ensure that our documentation and onboarding process supports different levels of experience.

Exploring Non-Tech Multiplayer Interaction Techniques with Brenda

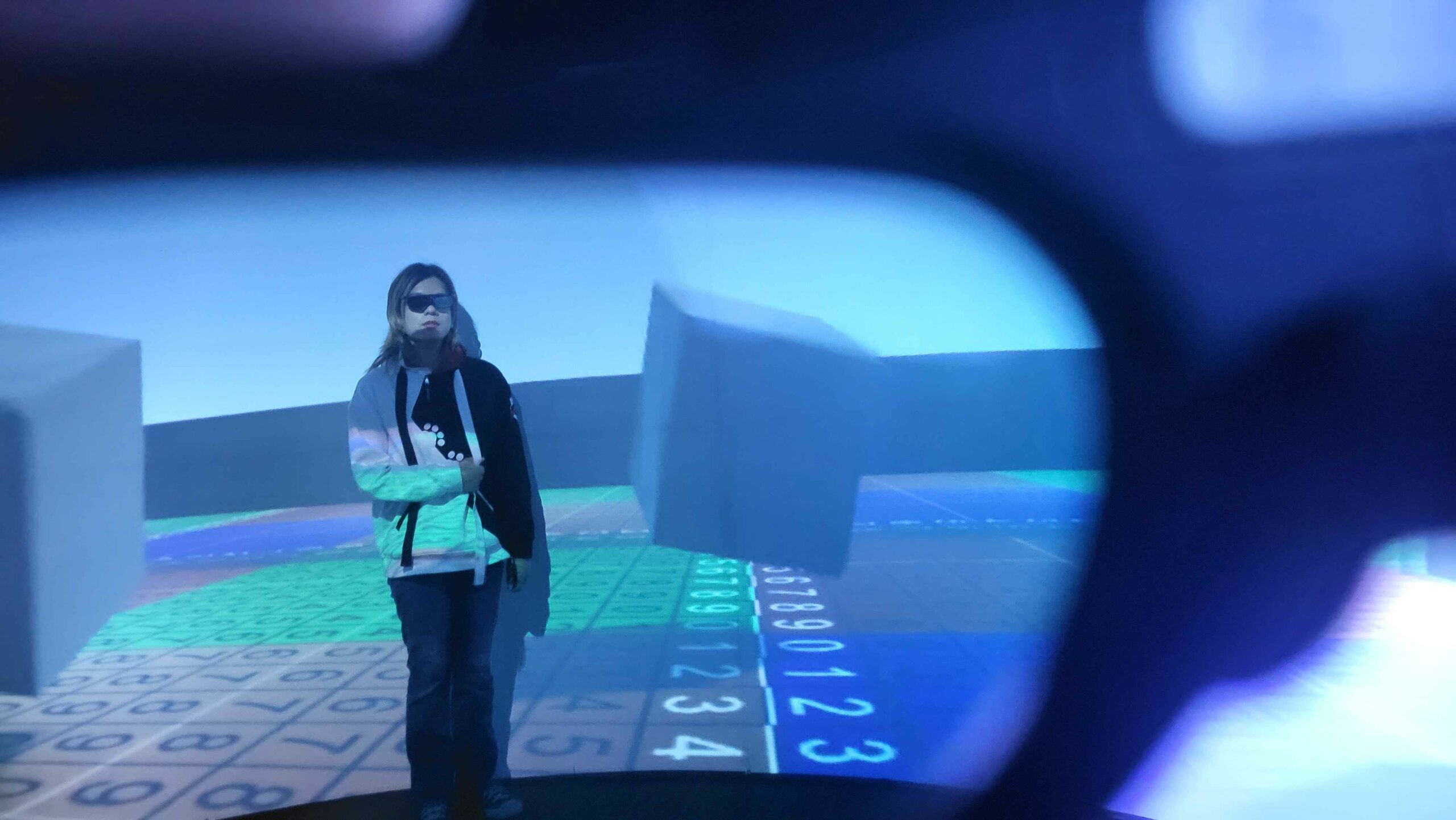

After Quarters, together with Brenda Harger, ETC’s professor teaching Improvisational Acting, we explored in person in CAVERN how users engage with the space and how interaction design could be made more intuitive.

Just like how interactive storytelling children’s game like Going on a Bear Hunt is fun and engaging even without any technology or props, Brenda encouraged us to consider utilizing the wide 20-feet play area CAVERN provides for opportunities for multiplayer social experience. Clapping, stomping, and following movements are all simple interactions that are beyond the digital screen or complex controls, but perfect for the space.

In addition, CAVERN’s curved wall gives potential to creating moments of surprise – objects can appear from behind, wrap around players periphery, or a sound cue could guide attention subtly without requiring direct instructions. Minimizing explicit verbal guidance and allowing players to naturally discover mechanics can make interactions feel more immersive and intuitive.

Sometimes, simple environmental cues and physical actions outside of tech solutions can be just as compelling as complex mechanics. This conversation helped us rethink how to blend physical actions with digital interactions to create a seamless, intuitive experience inside CAVERN.

Camera 1.0 – Achieving Stereoscopic Rendering (Somewhat)

With the success of last week’s monoscopic camera, this week was the time to start bringing the world into 3D by exploring stereoscopic rendering.

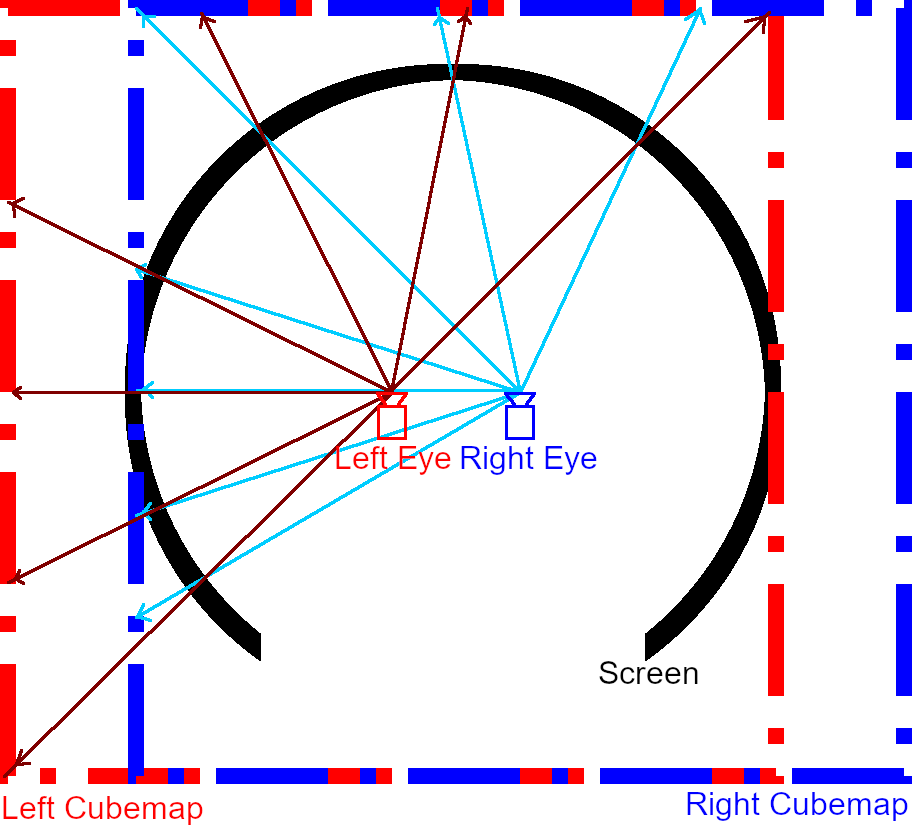

Stereoscopic rendering allows us to achieve the “popping out” effect that we see when watching a 3D movie. To render a stereoscopic view on a flat screen, we render the scene twice, each with a slight offset for each eye, and overlay the images on top of one each other. When the player puts on 3D glasses, it filters out the images such that each eye only sees one image, and the brain combines them to perceive depth.

The offset is known as the interpupillary distance (IPD), which is the distance between our eyes. On average, adult humans have an IPD of 63mm. In the case of the Cavern, the output for each eye is vertically stacked in the output render buffer, and specialised software is used to overlay them when projecting onto the screen.

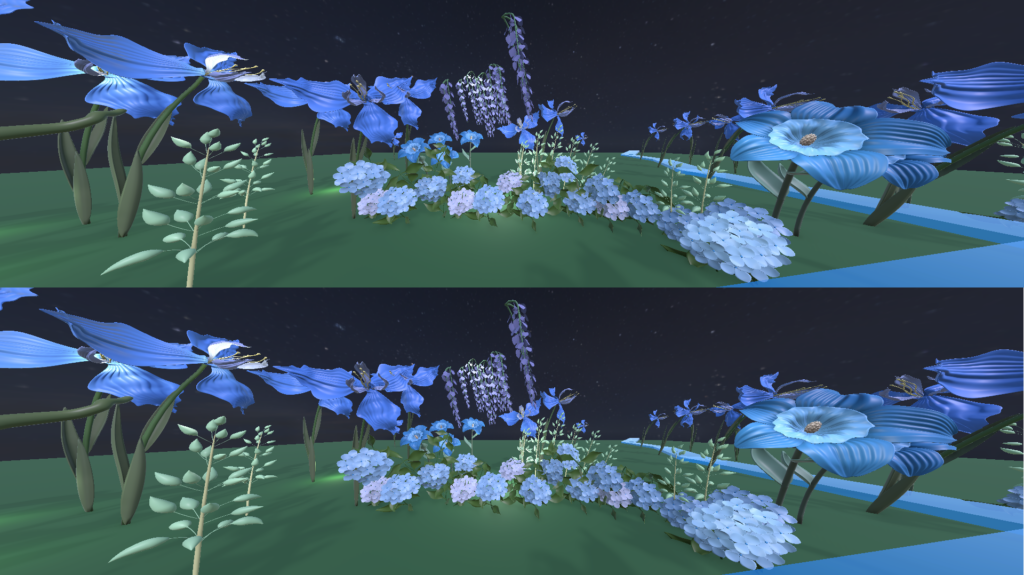

We can also approximate the effect using multiple cubemaps for stereoscopic rendering.

Finally, at 11pm on Friday, our stereoscopic camera 1.0 was created and tested with two naive users of the CAVERN, and garnered great response – they both were able to see a cube floating mid air and outside of the screen!

However, we’ve noticed that as the screen angle approaches 90°, the stereoscopic effect decreases as the IPD between the eyes approaches zero. Furthermore, going beyond 90°, the view from the left eye will now be to the right of the right eye, resulting in the depth perception of objects being reversed. So while the solution generally works, it’s not a perfect, yet. So, quote our exceptionally hardworking Terri who’s done this much in a week already: “Oh well, I know what to work on next week then!”

Debugging Vive Trackers

On the other hand, Vive Trackers integration was met with huge obstacles. While last week we successfully integrated it into Unity via the unity-openvr-tracking package, it ran into unknown bugs that led to endless crashing of the Unity editor, as well as the entire computer upon exiting play mode.

On initial inspection, we pinpointed the bug crash being an asynchronous system function in OpenVR still being called even after exiting play mode. We tried to create a breakpoint, tried to comment different lines of code, but all were in vain.

Miraculously, on Friday, right when we were debating whether or not to include the buggy version into the demo next week at South Fayette and pushed a temporary version to the main branch on GitHub, it suddenly started working as intended! We decided to continue investigating the problem, but for now, a working version is available!

Other Updates

Beyond the core technical breakthroughs and design discussions, we also made progress in other areas:

- Sound Sample Scene: Winnie built a test environment for spatial audio, including a 2D background music and a spatialized sound effect circling the camera, which will be integrated in our initial sample scene.

- Art Development Continues: Mia and Ling continued working on finalizing models for our sample scene, ensuring they are optimized for CAVERN’s projection system. The environment is slowly and steadily taking shape.

- Planning for South Fayette Visit: Our producers scheduled the first visit to South Fayette, and we started outlining what we want to showcase and how to structure our interactions for student engagement.

Next Steps for Week 4

Since we are visiting South Fayette next week, integrating our freshly built camera, initial solution for input, sample scene art and sound assets, all into our toolkit package will be our main priority.

Week 3 was all about refining our approach, tackling major technical challenges, and rethinking how users engage with CAVERN. With our first real playtest approaching, Week 4 will be a critical milestone in seeing how our work translates into actual user interactions.

Stay tuned for Week 4 updates!