This week is CAVERN Jam 2.0! Great experiences were made during Jam 1.0 that this time even more people signed up, resulting in 13 jammers and 8 worlds! At the same time, Terri and Winnie continued working on Orbbec Femto Bolt integration into the toolkit, and it was finally released into the package on Friday noon, just in time to create a final jam world for the CAVERN showcase! Also, we continued to playtest and researched CAVERN space by introducing and showcasing many CAVERN worlds to all the guests that arrived through the week. (A Disney Imagineer came!)

Jam 2.0: Reactive Worlds

While we focused on testing toolkit onboarding time during Jam 1.0 as well as basic interaction through Vive Trackers, this time, our main focus was to see how our documentation and range of features supported as many creative usages for a special space like the CAVERN.

In addition, through many playtesting sessions since the start of the semester, we realized that people wanted to see a world that guests can freely interact and influence. (“I want to be able to touch this flower, and the water underneath it will ripple”) Therefore, our final jam theme was “Reactive Worlds” with interaction input (Vive Tracker encouraged but not mandated).

Before the event started, we made sure to update all new features as well as design considerations into the user documentation. We added the 3 types of UI (and the ways to think about CAVERN as a space), design practices for surround audio, and helper tools such as gizmos and debug keys. We also replaced old Vive Tracker setup instructions and removed the mandatory need to install OpenXR through a tar file.

Jam Showcase!

* small hiccup of events: while our original plan was to have the showcase on Wednesday after 1.5 days of development, ETC building water pipe brokage delayed the whole timeline. In the end, after countless rescheduling, we had the showcase on Friday afternoon, right before an official ETC outing to watch a Pirates Game.

Now, let us introduce the wonderful participants and their worlds of CAVERN Jam 2.0! This time, 13 participants formed 8 teams. Out of the 13 people, 2 were faculty, 4 were artists, and the rest are programmers and technical artists.

Mike and Bryan: Multiplayer Puzzle Game

Mike and Bryan are faculty! Mike was our project consultant, and Bryan was the research engineer at ETC IT department. Both of them worked with the previous and current CAVERN teams many times, and they were especially interested in trying out our documentation. At the end, they created a collaborative puzzle that plays surround sound! 3 players would each hold a Vive Tracker controlling a colorful sphere. Observing the other spheres in the world, players would physically move to and communicate with other players to find the right mix of colors. Upon success, a tune will play from each sphere, showcasing surround sound in the space.

Grace: Orbecc James Bond Cinematic Experience

Grace is the programmer of the other CAVERN team, Anamnesis, a project using Orbbec sensors to create an interactive live action film for the CAVERN. Grace also joined Jam 1.0, and this time, she created a James Bond experience. As our version of Orbbec was not yet integrated into the toolkit, and the Anamnesis team worked with a paid asset for Femto Bolts throughout the semester, Grace used that as she was most familiar with it. In this experience, the Femto Bolts would track her body, and when she walked to a certain place, a subsequent animation will follow, and with the immersiveness given by the roundness of the space, along with cleverly designed visual attention direction and music, this was a really cinematic experience that is somehow also like a performance!

Jing and Jose: Head-Tracked Space Exploration Game

Jing, who joined the previous jam as well, and Jose, who is the cinematographer in Team Anamnesis, collaborated and made a space exploration game. With a UI guiding players on where they are in the vast space, players control a Vive Tracker to get closer to a destination point on the map UI. One important thing to note was that, as we did not clearly explained the usage of head tracking within the documentation, they decided to move the entire world when moving the character in the world. This was the problem that HyCave faced last semester which resulted in large CPU consumption. Fortunately Jing and Jose’s world did not cause any lag, and we also learned a lesson to put head tracking tutorial on the documentation.

Yuhuai: Slenderman Horror Game

Yuhuai is a multi-talented person, a technical artist, programmer, and sound designer. He created a horror game with Slenderman. Controlling a Vive Tracker attached on plastic stick, it served as a flash light within the game. If you successfully shone the lights where the Slenderman was standing, they will not continue forward to you. If unsuccessful too many times, it will jump at you for a extremely scary jump scare (which due to stereoscopic nature of the space, even people at the edge of CAVERN, were hugely startled)!!

Mia and Jinyi: Screen Space UI and VFX Experimentation

Jinyi is a programmer who also created a Unity tool package in a previous semester, and Mia is Spelunx’s beloved 3D and VFX artist. They created a world testing the screen space UI, where you can select ETC faculty Chris Klug’s photo on screen, drag it to a TV, and here a sound recording of him speaking. In addition to testing the UI feature, they also discovered that small particles like snowflakes works exceptionally well in CAVERN, not intruding guests’ physical space but also giving an immersive feel.

Skye and Enn: Doppler Effect Performing Arts

Skye and Enn are both artists. Skye had an additional CS degree, so he was the programmer of this team. This is an extremely cool project playing with doppler effect in the CAVERN. Wearing a Vive Tracker hat, as you get closer a flower petal, your speed within the world will also increase. The background music playing from the second repeated petal in the flower, will then show doppler effect, where pitch is changed because of frequency changing with respect to time. In addition, this space is artistic and fun (playing to the music: ), that people simply start dancing and making different movements just to play with the sound changings. This eventually became a performing art piece!

Josh: Ported Beat Saber to CAVERN!

This time, our programmer Josh, decided to port Beat Saber into the CAVERN. While processing the beat map was challenging for this short amount of time, the main challenge was actually creating physical props that the Vive Tracker can be attached to that would map to the game world while preserving the feel of moving in the physical world, so that the two world’s are merged seamlessly. We had very cheap light sabers, and after countless testing, Josh attached the Vive Trackers to the base of the light saber, and in Unity, created an offset box colliders to interact with the cubes flying at you, so that slashing in the real world, resulting in correct slashing in the virtual!

Winnie and Terri: Barbie 12 Dancing Princess Orbbec

Orbbec was still in integration mode when Jam 2.0 started. However, by Thursday night, a core Orbbec support is almost completely done. Therefore, Winnie worked on a simple gold spike to test the workflow. Inspired by the opening of Barbie’s Twelve Dancing Princess, where several silhouettes follow the same movement, the gold spike was simple. Only that it is not as simple! Turned out, that since the cameras were skewed, looking down at the space at around 45 degrees, each camera had to be carefully calibrated to a certain rotation as well (usually 180 – the angle it look down at), and fine-tuning at the CAVERN is necessary.

In all, the showcase was a huge success, drawing more than 30 people in and out, and everyone had a joyous time celebrating each other’s creation!

Orbbec Release

As Friday was our feature freeze deadline in case more refinements were needed before the semester ended, instead of supporting a full suite of interactions just like how Vive Tracker has building blocks, for Orbbec, we decided to simplify integration into the project.

As of now, the CAVERN toolkit supports the following features for Orbbec Femto Bolts:

- one-click set-up solution (instead of manually installing many dll files scattered across the Internet)

- single camera support at a time. (multicamera support would require streamlining and merging data streams from 3 different camera, and it became out of scope for our remaining time.)

- helper skeleton visuals to show tracking in realtime that can be turned on and off.

- support for changing orientation of the physical camera (as the middle camera in ETC CAVERN had been installed upside down by accident, and if we changed it, it would break previous projects. Yet we would like to support other correct setup installed elsewhere.)

- A working Orbbec prefab setup within a scene would require:

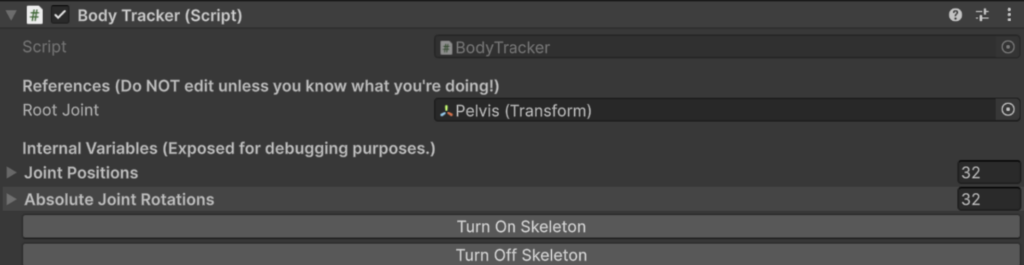

- BodyTracker: representing the physical sensors within the space. It is usually 3 in current CAVERN setups, but we now only support 1. It is the place to turn on skeleton rendering or not.

- BodyTrackermanager: the manager that accepts and processes data. There should be only 1 in a scene. It is the place to register the Femto Bolt devices. It will automatically detect the device if it is not already setup.

- BodyTrackerAvatar: 3D models of the actual model you would like your movements to render to. It has to be properly rigged according to the supported joints and bones. You can place as many avatars, and they will all update according to the 1 supported sensor. If it is place at the same place as the BodyTracker, the model will render at the same place as the skeleton. Experimenting displacement is how you can create correct avatar placement.