Overview

- 3D Models

- Tech Demo

- Challenges

- Next Week’s Goals

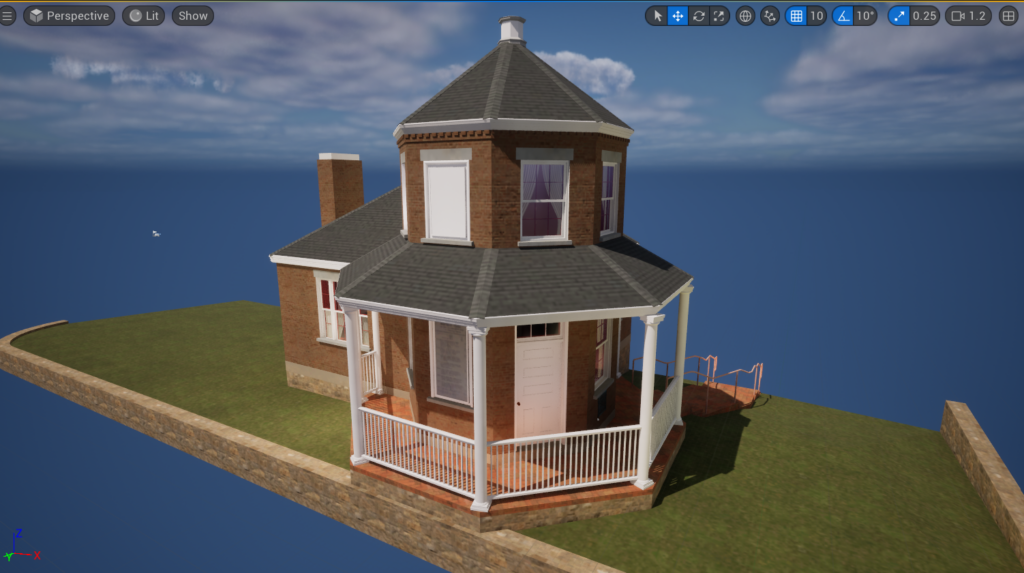

3D Models

This week, we gained access to many of the 3D models that we will utilize in our project. This includes the scan of Searights Tollhouse from the Portals Project, as well as a Wild West-themed asset bundle that includes some human characters, horses, wagons, and contemporary buildings from the era of the National Road.

Our artists will be focusing their efforts on arranging a layout of these buildings, as well as rigging and animating them for our app design. They will also be working on creating realistic textures for the road at different stages of history, UI and other 2D art for in-game interactions, and visual effects to enhance the experience.

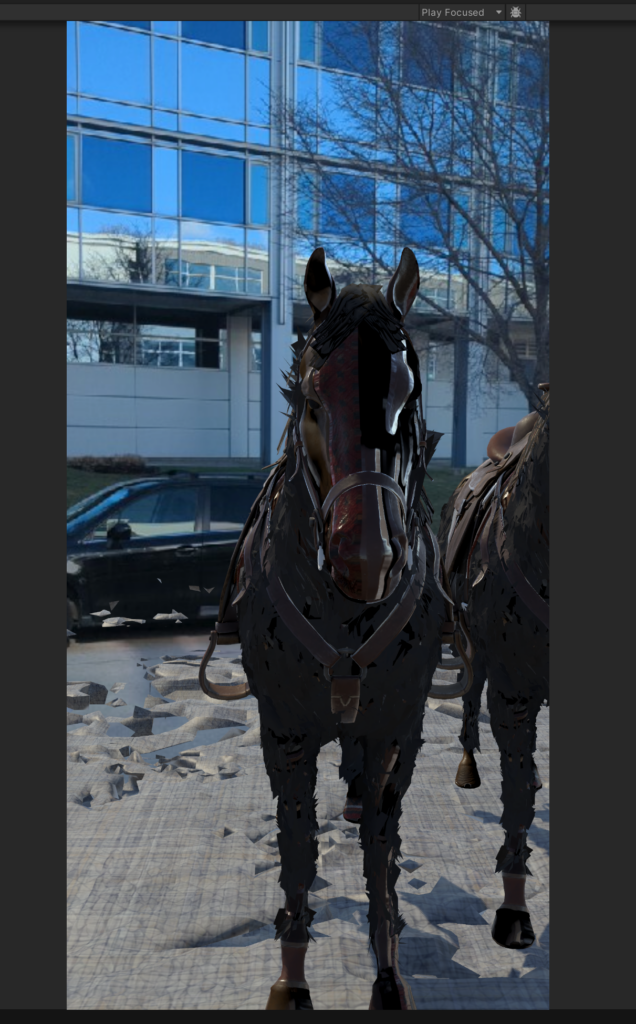

Tech Demo

With the new models ready to test out, our programmers began creating prototypes, testing out spawning objects and having them move around on the ground in an AR scene.

One challenge that we needed to overcome was that most of the tutorials and demos available online showing how to set up an AR scene with Niantic’s package show how to detect the ground and then have the user manually spawn in objects (usually by throwing them into the scene). However, this approach does not work for our project, since we want to have more complex scenes that exist on their own without player input.

We were able to build a prototype that uses the location of the Niantic semantic layer meshes in the scene to place objects on the ground without the user needing to click on the screen or do anything, and this foundation will let us build up more complex prefabs that can contain all the objects that will spawn on the ground around the player and that the player can interact with.

Challenges

As mentioned above, a major challenge for this week was taking our tech exploration with the Niantic Spatial Platform and converting what we learned into a demo that would be useful for our project, one where objects can be placed on the ground using Niantic’s semantic layer data automatically, without any user input, so that we could spawn more complex scenes and environments around the player

Next Week’s Goals

- Field trip to Searights Tollhouse

- Meet with subject matter experts Carl Rosendahl (Distinguished Professor of Practice and the Director of ETC’s Silicon Valley campus, who has a lot of experience with VR and AR startup projects) and Chris Klug (ETC faculty, extensive narrative and tabletop design experience) to seek their advice

- Build prototypes for AR interactions that we designed and create a demo that can be tested at the IDeATe/ETC weekly Playtest Night on main campus

- Finalize in-progress team promo material including the team logo, half-sheet, poster, and team photo