Artist

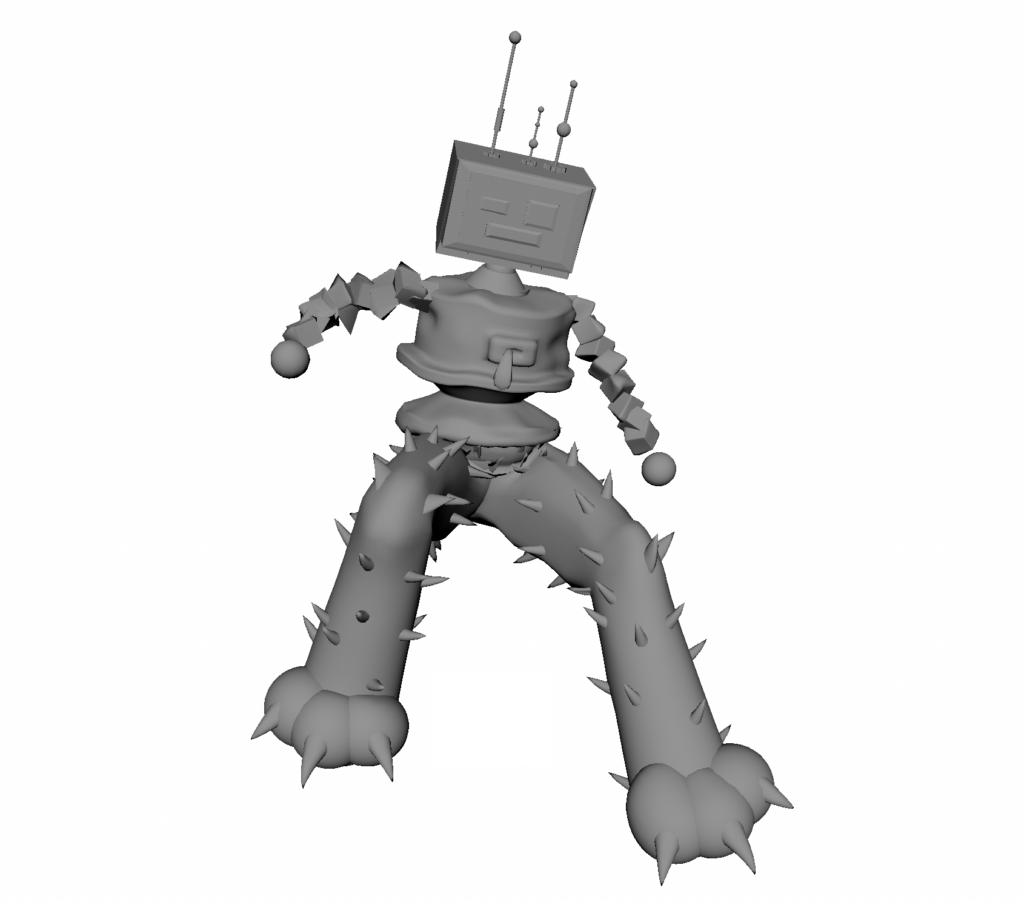

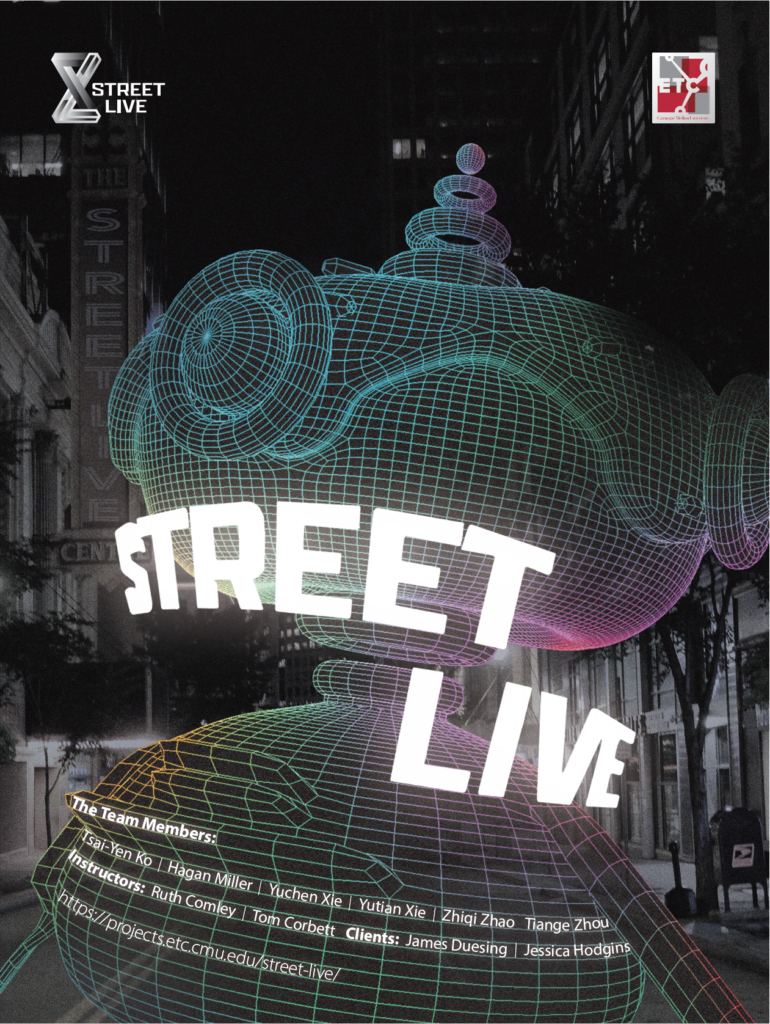

The Artists worked on creating some more models for the mix and match character design. This week the artists also worked with the UI/UX Designers to decide how the storylines of the animations were to take place within the application. The current plan is to use the head of the mix and match character to decide the motivation and trajectory of the scene and to have the torso and legs influence the method in which the motivation of the head comes to pass. Hagan also got a simplistic form of animation retargeting to work in Maya. Below is a playblast of a portion of the range of motion capture from the first time we went to the motion capture studio on main campus. Ray worked on finishing up the half-sheet and poster design updated with the characters made by the artists.

New Creation 1

New Creation 2

Programmer

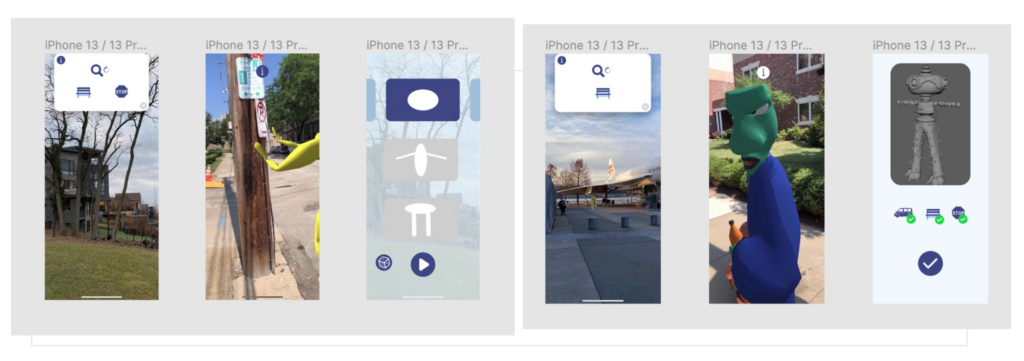

The Programmers worked on proposing a solution to the frame rate issue in the form of a button, when that button is pressed, the object detection would then trigger instead of the object detection being constantly up. The programmers also implemented the cat animation into the Snob Bog application; the application uses the neural network to detect cars and then uses AR to detect the surface in which the cat can jump. This is being processed to work with various heights of cars. Also, the programmers worked on training the new machine learning model to recognize new objects like water bottles. This is to be a continued process in the upcoming weeks as well.

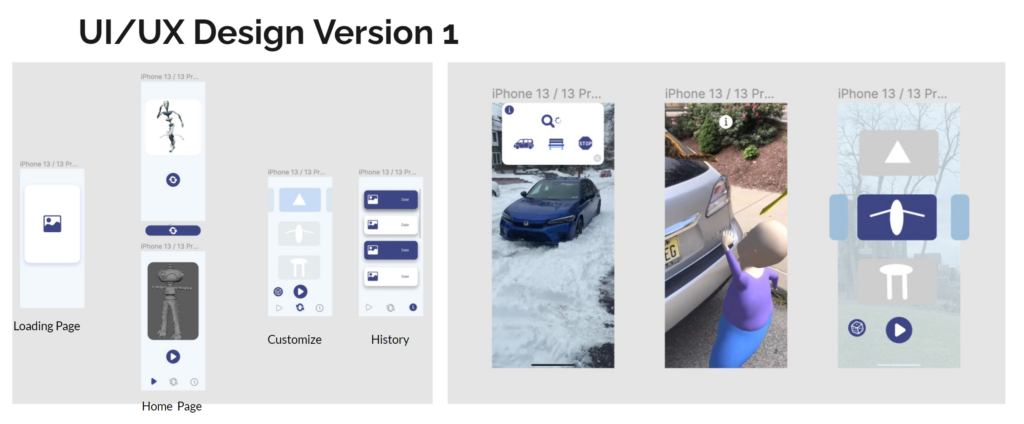

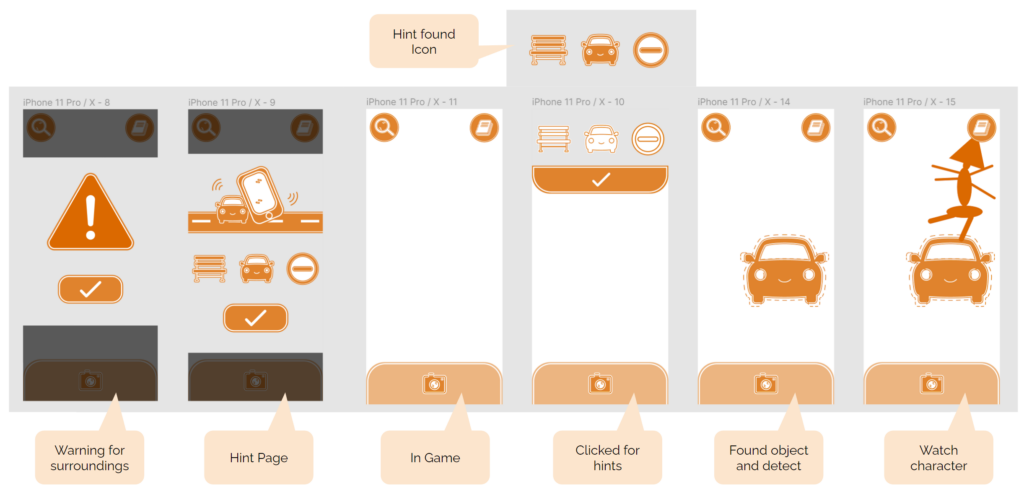

Ui/UX Designer

The UI/UX Designers worked with the Artists to begin to understand how the stories of the animations will be constructed, with using the heads as the main motivator and the torso and legs as the influencers. The UI/UX Designers came up with two textless versions to propose to the client this week. After meeting with the client however, they are now tasked with making a near textless user interface that does not appear if possible throughout the experience and that user choice should be dictated before the story experience of the application instead of being able to edit and change things during the walk.