Artists

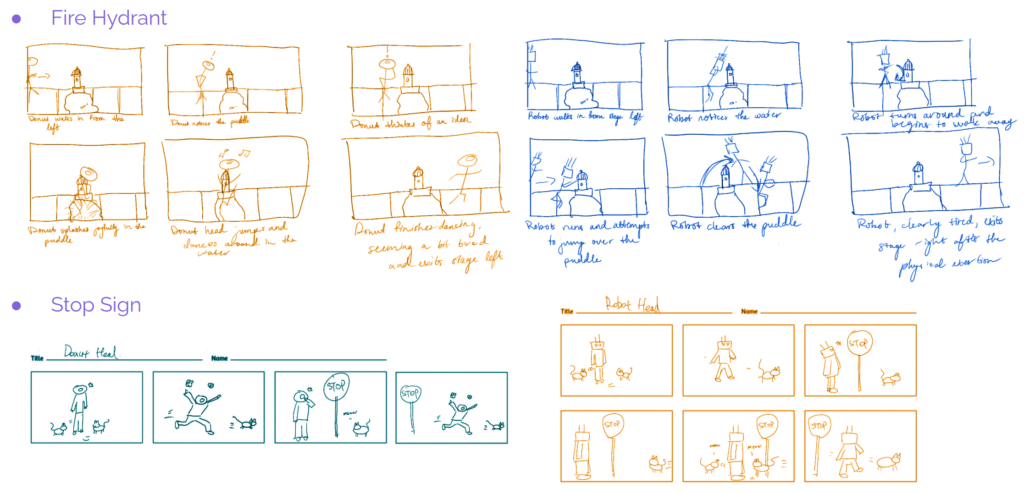

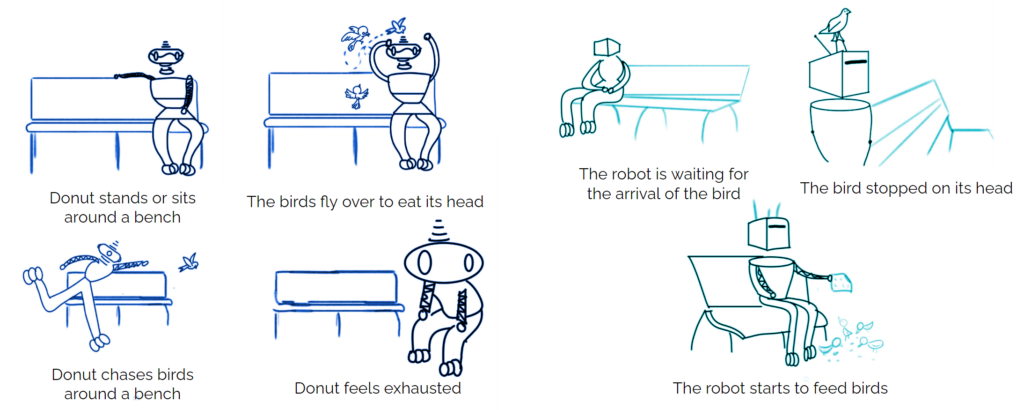

The Artists and the UI Designers worked together on the storylines for the customizable character, after meeting with the client this week, we decided we need to pivot mindsets in terms of the customizable character and the scenes in which they take place. The client is less concerned with the overarching story and more concerned with customizable character and their scenes acting as a proof concept so that they can use this for reference when expanding the application after the semester has ended into the community realm where people will be able to add their own custom content to the application. Below are some of the initial storyboards that we came up with.

We are deciding to move forward with the bench as the triggering object for the customizable character scenes going forward to keep a sense of continuity as well and to indirectly teach the user that when they see certain objects, it should trigger that type of character. For now, we plan to create a storyline through the bench as the triggering object but also a stand alone scene that is not based around a triggering object and can make sense no matter the triggering object to help further the proof of concept mentality. Outside of this, all roles have been putting time into the 1/2’s presentations that are due on the 27th.

Programmers

Our Programmers have been working on creating a short demo for the 1/2’s presentations coming up. Cleo trained an object detection model using a custom data set. However, she encountered an import error when attempting to import this model into Unity. This problem likely occurred due to some of the features of the model are not fully supported by Unity at present. Cleo is currently working on a solution to this newfound issue. Robin was able to combine Cleo’s previous work on neural network models into our current project. Now the project is using Yolo-V3-tiny as the object detection model instead of Inception-V2. Robin is also working on putting our current assets into the project.

UI Designers

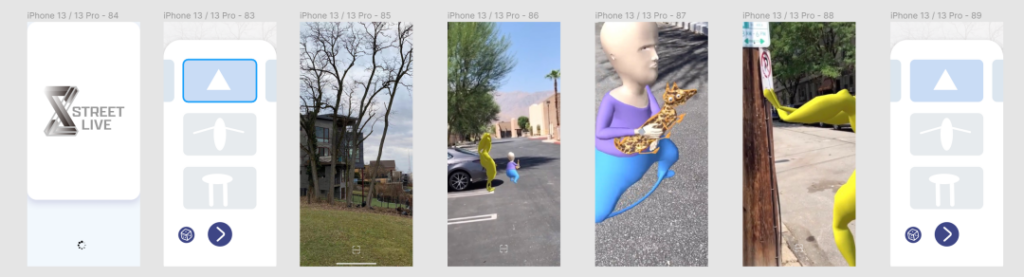

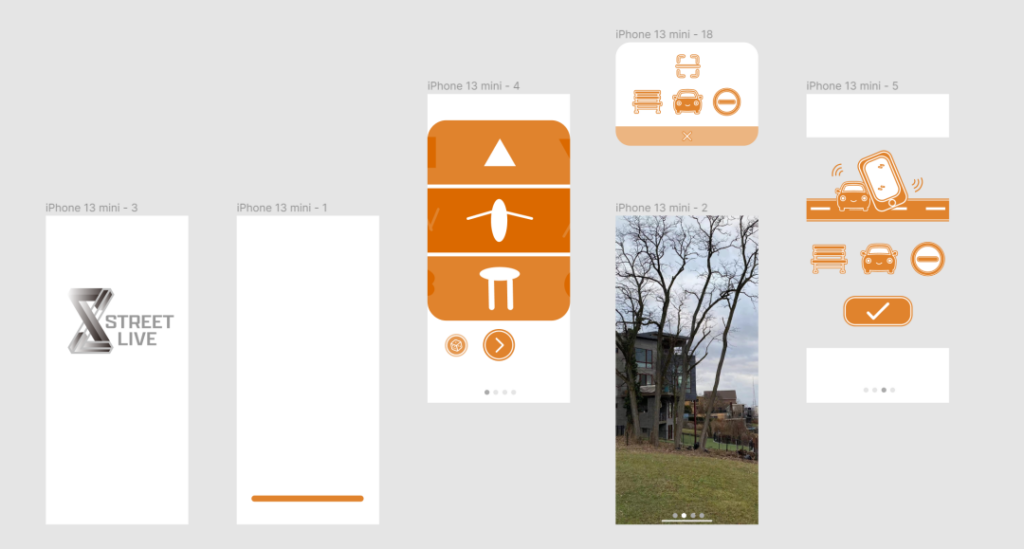

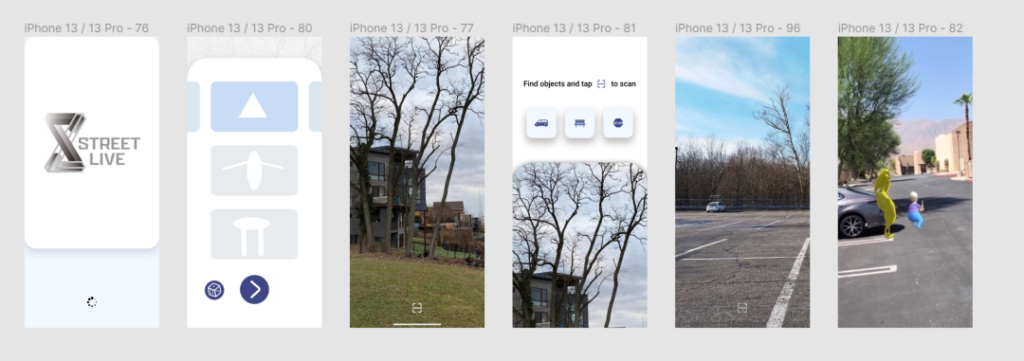

Our UI Designers focused on balancing the usability of the combination of invisible UI and clear and intuitive instructions. They create four different wireframes and prototypes for playtesting. These playtests were done through Figma and not through the current existing app. There are plans in the near future to conduct playtests using the actual app. These different tests can be seen below.