ART FOR PROJECTION INTERACTION 1

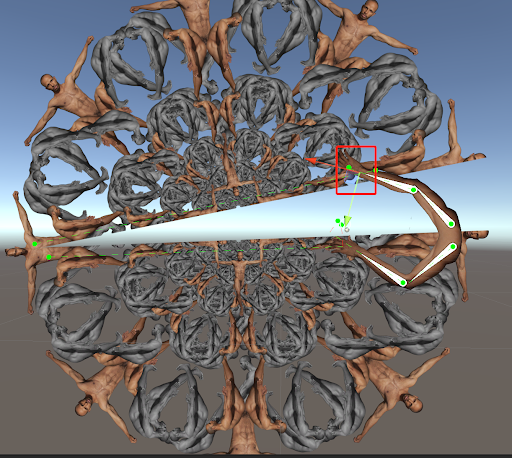

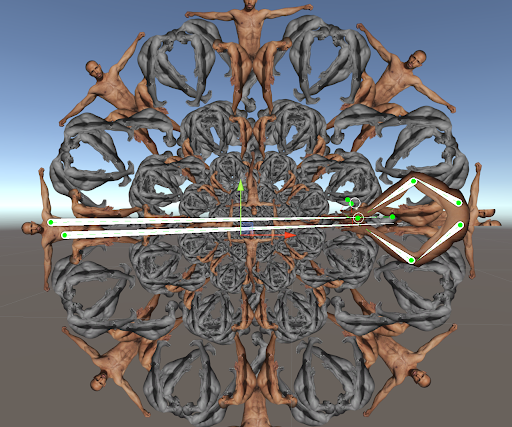

We started experimenting different styles of animating and rigging the previous Learning Tree prototype to best suit the style of our client’s artworks. To make sure that the guests’ feeling of controlling and affecting the artwork can last longer, we decided to rig all men on the tree as well so that when guests continue to move their arms after the tree has been generated, all men on the tree can still move and react to the guests spontaneously.

PROJECTION INTERACTION 2

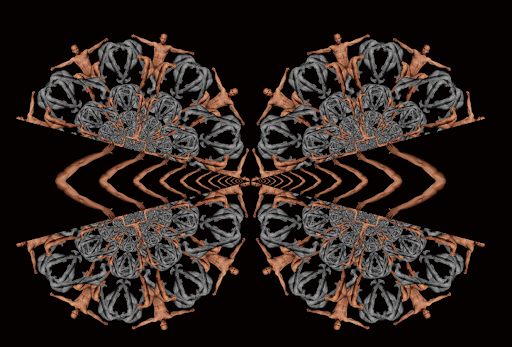

Moving forward in the direction of interactive projection mapping, the team continued exploring more interactions based on our client Renee Cox’s artworks. Last week we worked on an artwork called Learning Tree. This week we explored another art work called Pac-Man a Savoir, 2022. We started developing interactions based on it.

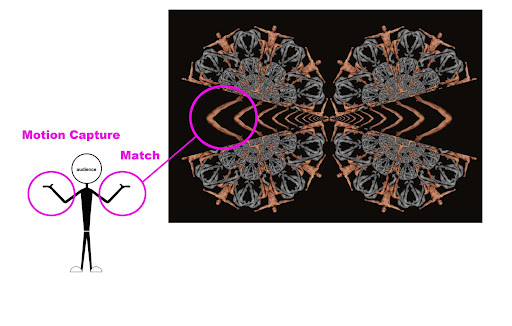

For the pacman image, the inspiration is also obvious, so we decided to use kinect as well and make the guests control the opening and closing of the pacman by detecting and matching their arms with the arms in the middle of the two semicircles.

TECH FOR PROJECTION INTERACTION 2

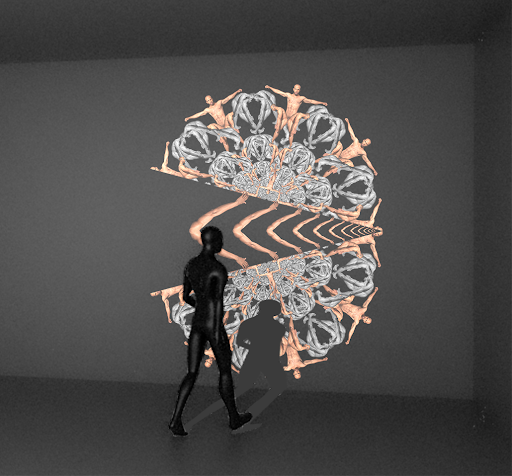

In the Pac-Man a Savoir interaction, the guest can control the movement of arms in the images by the movement of their arms.

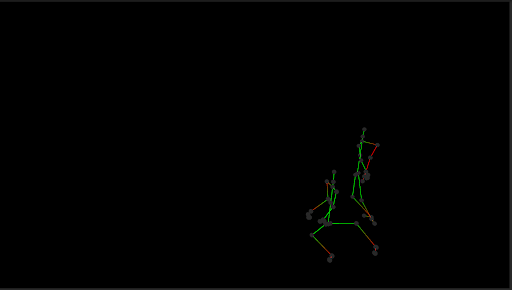

We achieve this effect in Unity by rigging some IK joint point and skeleton in the 2D imagine. We are Actually controlling the red points in the picture below, so that we can control the open and close of the pacman. We have some code to detect the hand joint points from the Kinect skeleton and then change the red point position according to the position change of the hand.

TECH CHALLANGES

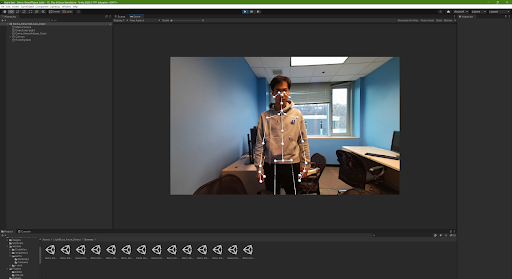

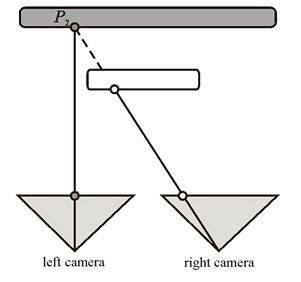

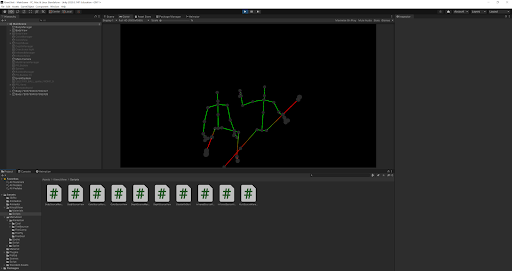

Kinect will capture video or image, then detect certain joint point (shoulder neck head)in people and we can then build a skeleton in unity scene.

But as we developed, we encountered many technical problems during the whole project implementation.

- Kinect 2 will have get into some detection error when two people are so close to each other or kinect can not see parts of body and you can see in the image below that the skeletons are all mess. We find out that Azure kinect actually will not have this problem because it will predict where your bones should appear when parts of your body are not visible or when your bodies are overlapping or too close.

2. Tracking performance: Less ideal tracking performance in darker environment, cloth or skin. When the main controller turns around it could temporarily lose track of shoulders and hips.

3. Unity does not support multiple Kinect 2.

This issues led us to explore more tech and we came across Azure Kinect which seemed more appropriate for our use.

KINECT 2 VS AZURE KINECT

- The main feature of the azure Kinect is that it has a TOF sensor which is a depth sensor to capture 3d data. This improves the failure cases in pitch black scenarios and with people wearing black outfit since it does not rely on visual image to do the tracking.

- Compared to Kinect 2, the built in unity api has a more accurate body-tracking ability with more joints detection. When you are maybe facing sideways or partially block by other people or objects, the azure Kinect can better estimate the part of your body that is being block. It also has a built-in synchronisation feature that will allow us to sync multiple Kinect devices together, talk in detail later.

- Other features including built-in IMU, adjustable field of view, etc, which helps with synchronizing and allows more versatility.

AZURE KINECT ADVANTAGES

- Synchronise 2-3 azure Kinect devices to increase the space that the cameras can cover.

2. The TOF depth sensors will scan a 3d point cloud environment that covers all the audiences within this space.

3. Built in IMUs will calculate the position of each device in relation to each other and synchronise the data gathered by all the devices.

4. Identify the main audience interacting with the art piece and use body tracking api to detect their skeletons, thus triggering interaction on screen.

We ended this week meeting our client Renee Cox in person for the first time and discussed more about what she hoped from the project.

Comments are closed