Production and Playtesting

This week marked the end of our final major asset sprint. We’ve also been collecting feedback from our instructors and faculty consultants to help us iterate on our existing environment. As we move forward into the next few weeks, we’ll be focusing a lot on playtesting. We have a few opportunities lined up to get feedback from people outside of the ETC, but we’ll also start doing more testing within the team as we rapidly iterate our assets.

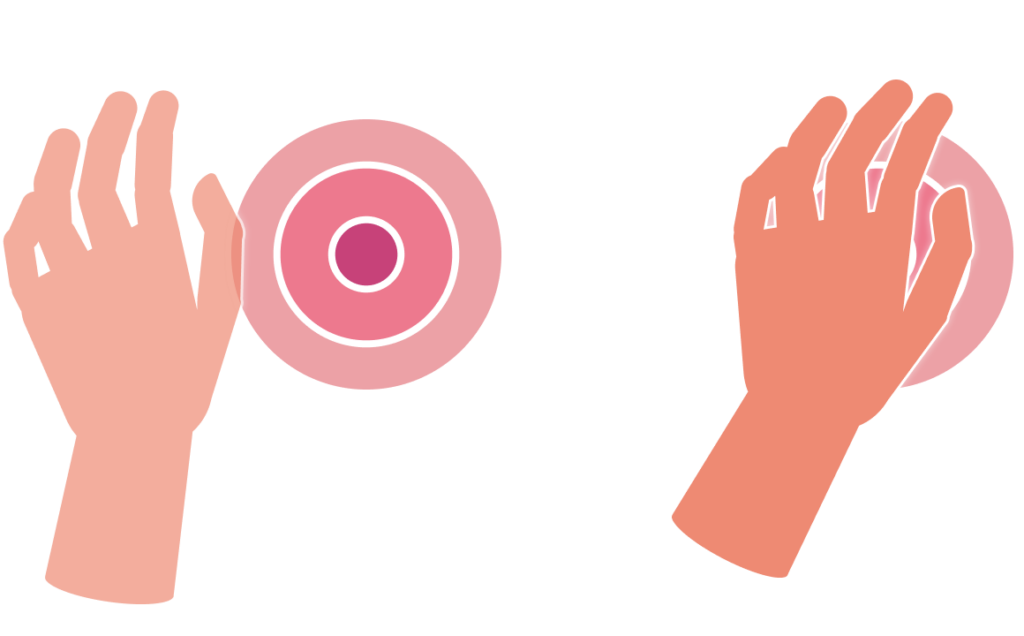

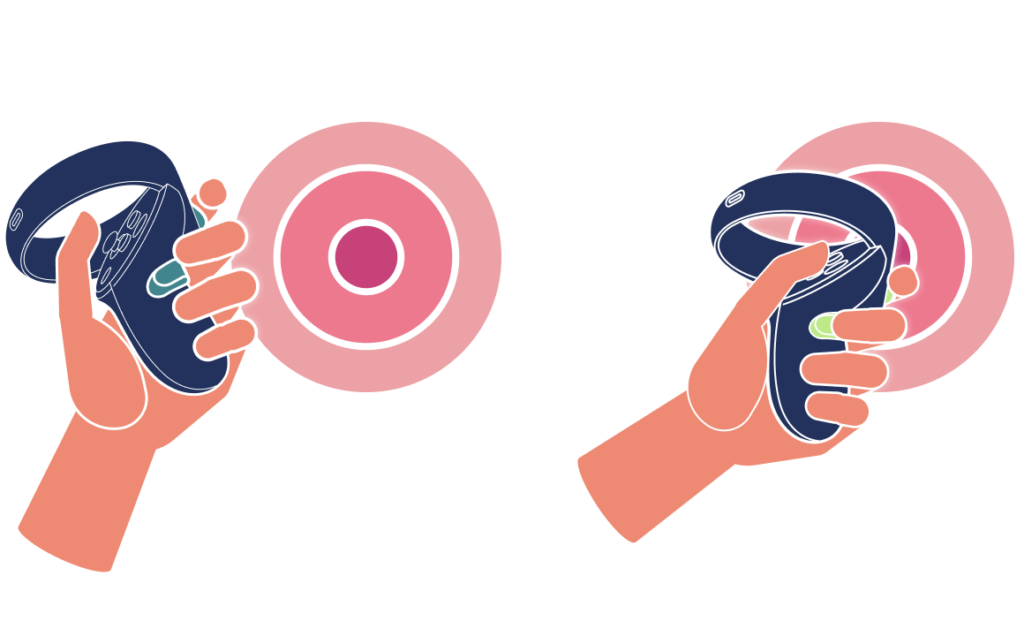

UI/UX

This week Constanza spent a lot of time focusing on UI changes. This includes both how the meditator interacts in the environment as well as how they receive instructions. While going through the script, Constanza and Gillian picked out spots in the first 2 sections of the meditation that would benefit from pictorial instructions in addition to the audio instructions. Constanza then created several 2D assets to be integrated into the experience.

Environment Building

This week Lauren continued iterating on environmental features such as the dragonfly and the replacement flower for the hogweed. They also added new shaders like the swirling particles shown below. Faris began implementing these changes in the environment and worked on a global shader that we might use for playtesting how obvious we can be with our biofeedback.

Biometrics and Sensor Integration

Zibo built out some basic UI to a desktop app that acts as the connection between the Hexoskin and our meditation environment. What it displays are the Respiration Rate (RR), whether the meditator is inhaling or exhaling (Respiration Event), and the total length of the last breath the meditator took (Respiration Interval). Currently we have two methods of communicating the biofeedback in the environment. The first way is using the Respiration Rate to calculate the Respiration Rate Variability and turning that into a “relaxation value” between 0 and 1 that maps onto the movement of an object. The second way is by displaying the Respiration Interval. We’re using these two methods because they represent gradual changes of the meditator’s breathing with minimal latency. Our next step is to look at incorporating the Respiration Event as part of our biofeedback. Faris and Constanza are also working to make our biofeedback display look more natural as the team works towards finding a balance between subtle and obvious displays of biofeedback.

Zibo also got a remote desktop set up with our Mac Mini so that testing with the Hexoskin is now more widely available to the rest of the team. We were previously having trouble connecting the Hexoskin Bluetooth to non-Mac computers, so this was a valuable step in setting up for playtesting next week.

Sound

After making lots of script edits last week, Gillian and Faris re-record the script. The app now has all updated dialogue and is beginning to get a soundscape. Programmatically, certain sound files trigger different events during the meditation, so there was some challenge in putting sounds files together to sound like they have a natural flow while still being broken up enough to trigger the right events. This will require some fine-tuning as we do more internal playtests.