This week we began production on a few prototypes, which will be detailed below. We are aiming to complete 13 prototypes by 1/2s so that we will be roughly 1/2 way through all the prototypes we would like to complete by the end of the semester.

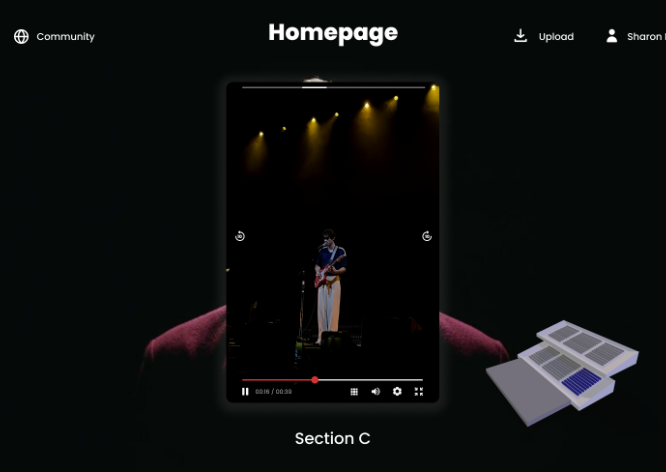

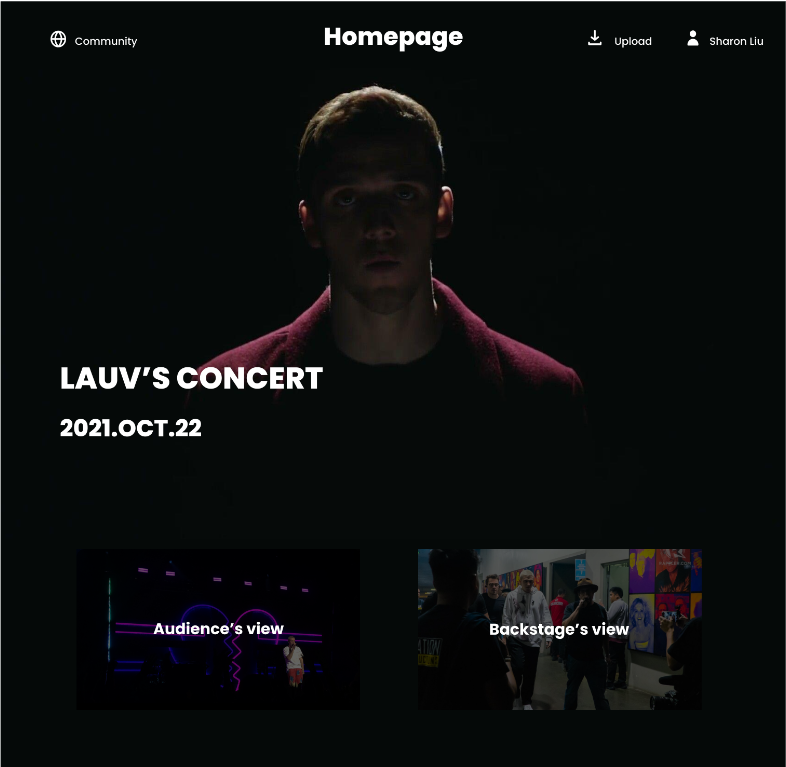

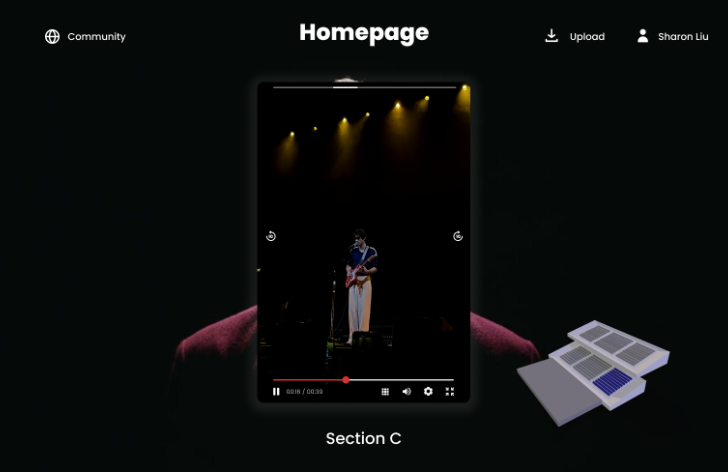

Theatre Map:

The Theatre Map allows users to interact with a 3D model of a theatre and click between different viewpoints.

Why?

- Possible Bad Seats (obscured view of performance)

- Could have viewpoint that is not normally accessible (i.e.: from backstage, or tech department)

Takeaways

- We would like to further develop this concept, by taking actual footage from a theatrical performance to playtest the usability of SwipeVideo in that space.

- We will possibly be going to Ruth Comely’s theatre to capture a performance as well as having a camera set-up by tech and backstage.

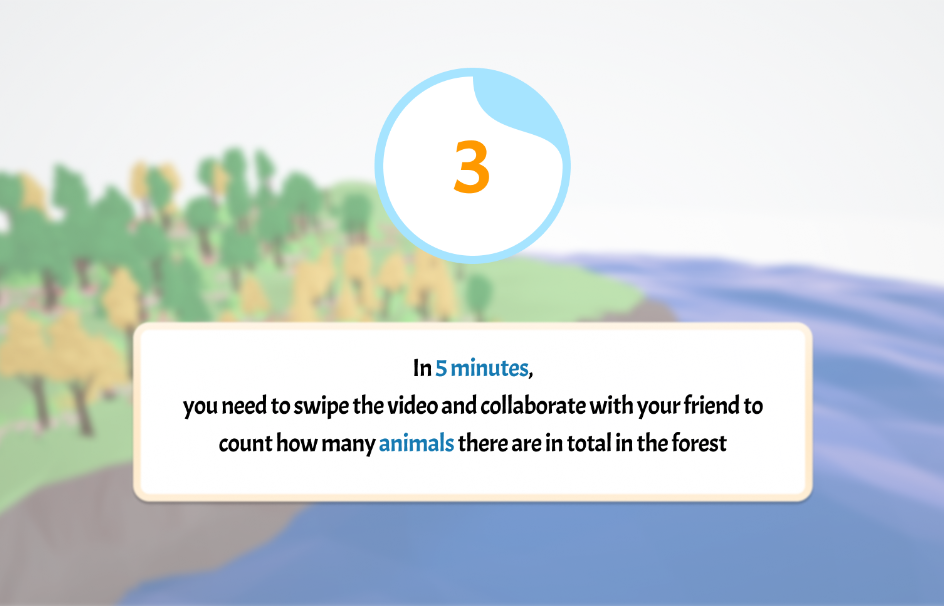

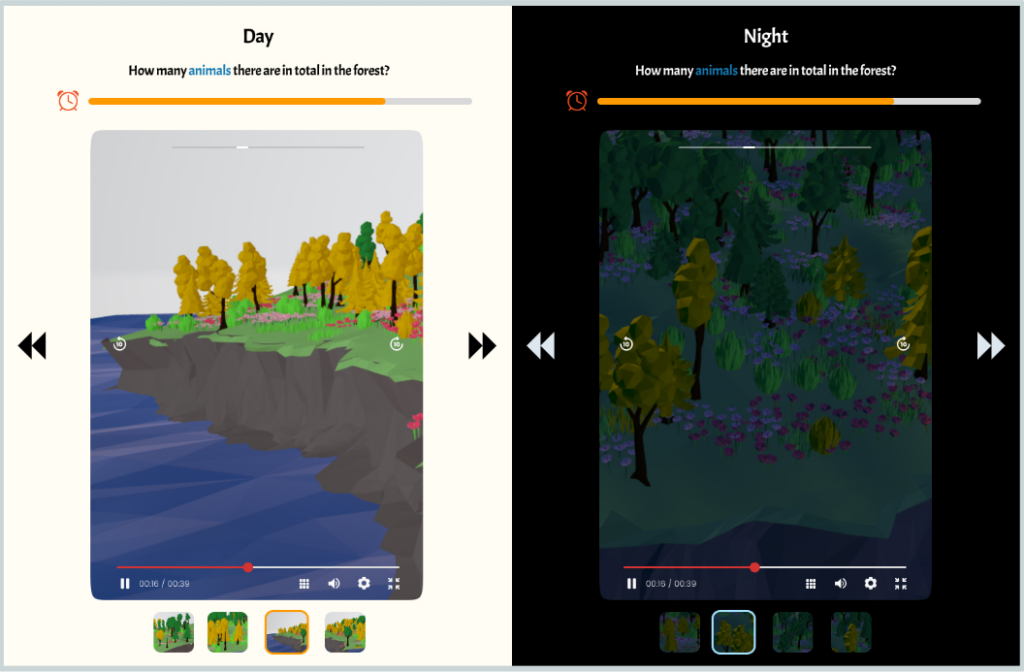

Educational Game:

The Educational Game was a proof of concept to see if SwipeVideo could be utilized in this way. We designed a concept for an educational game targeted to help train third-grader’s 3D-thinking skills. As a cooperative game, two children would get to work together to answer questions such as: How many animals are in the forest?

Why?

- Designing a concept for this age range allowed us to think about how SwipeVideo could be used for gaming in a non-complex way.

Takeaways:

- Game concept could be adapted by asking player’s more difficult questions, so the demographic could vary if developed further.

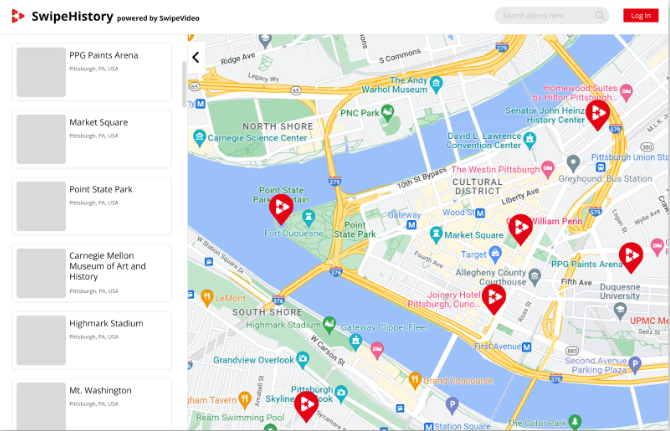

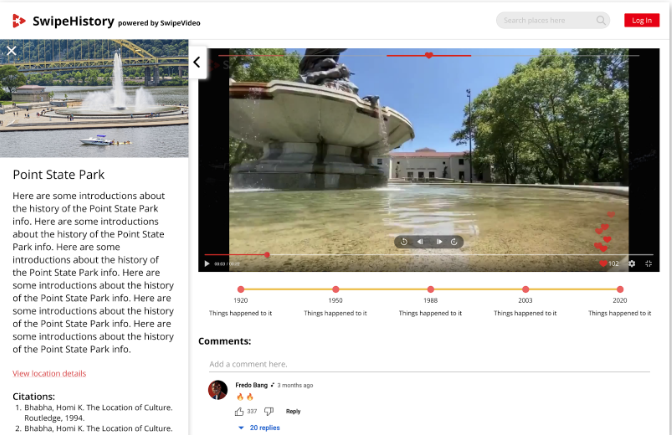

Swipe History:

SwipeHistory is a web portal that allows users to click on specific tags on a city map to view specific historical landmarks with SwipeVideo. Users would be able to swipe between modern and archival footage, to swipe through history.

Why?

- Offering an interactive way to engage with historical landmarks and history, was interesting to us to explore. Specifically blending modern and archival footage.

- Could be a way to bring SwipeVideo to both public space but also educational space.

Takeaways:

- We’d like to develop this further by exploring more of Pittsburgh history and capturing landmarks, as well as sourcing archival footage.

- The archival and modern footage would be more impactful if taken from the same angle, so the change would be more clear.

- Could be applied to family archival projects as well. Could blend home videos and other footage to create a digital heirloom.

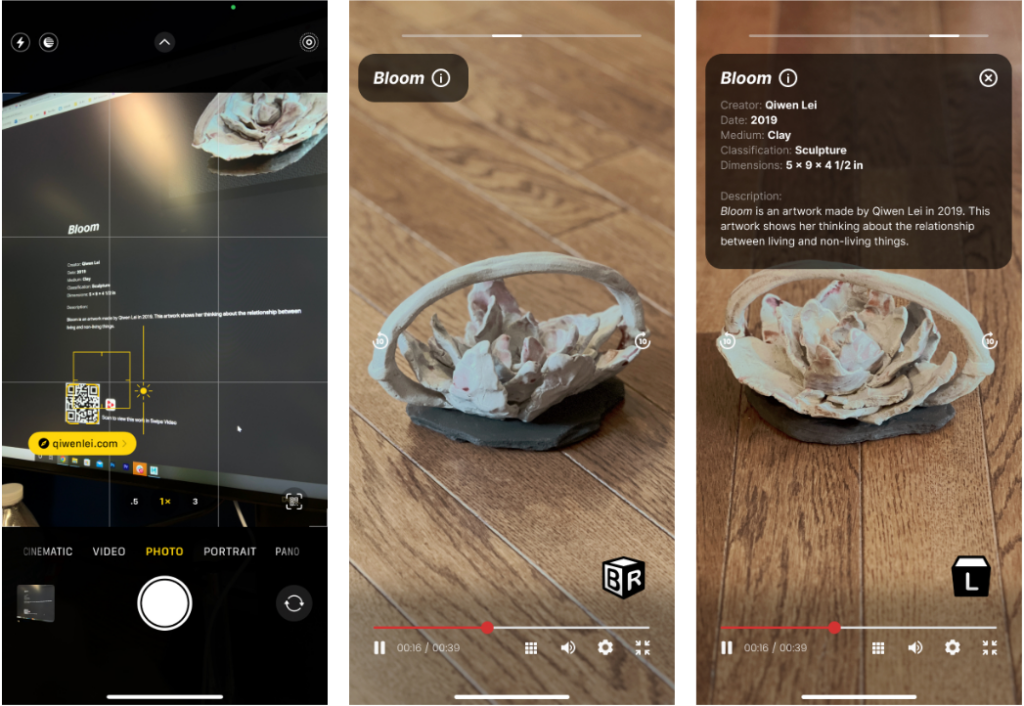

Further work on Depth Camera

This week we also developed the Depth Camera project further. This would enable us to create mesh using biometric information using the SwipeVideo technology.

Why?

- Could offer more affordable way to create motion-capture meshes.

Algorithm: that I am using to generate edges:

- sampling points on the depth photo in a grid manner

- generating idx for each of the point

- generate edges of every pair of neighboring points in the depth photo in a grid manner

- map idx of each point to its 3d coordinate

- removing points and edges containing points that are unwanted

- project points into the 3d space

- generate edges according to the 3d position of each of its ending points

Takeaways:

- Still not able to figure out the relationship between the color of depth camera and the real world distance, so that not able to stitch different photos together (could be solved by iOS programming, in this way I can access more parameter of the photo)

- There is distortion of the camera itself when items get close to the depth camera

- The depth photo can get not so accurate when it run into object with some color. For example, black is usually considered as points that are really far away. White objects are considered a little closer than they really are in the photo.